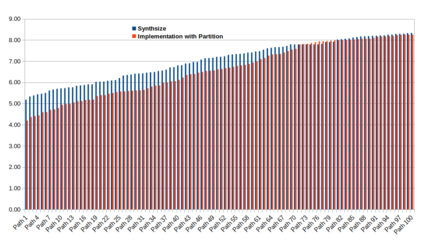

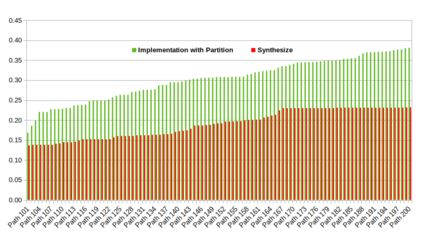

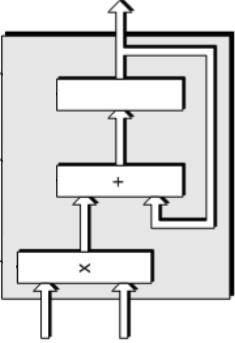

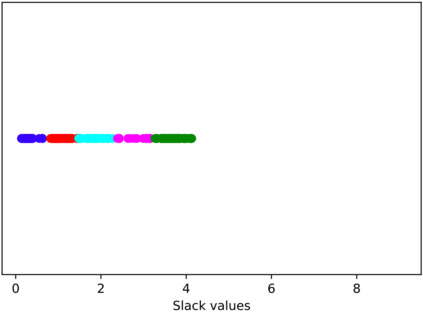

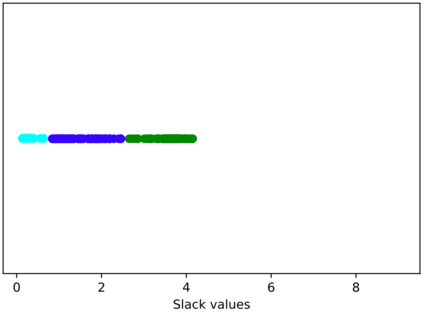

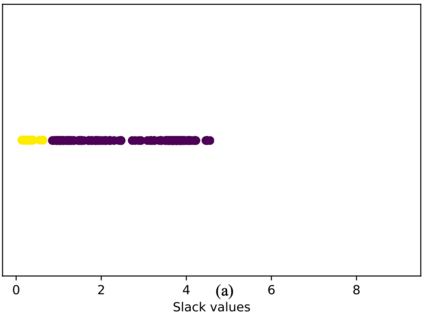

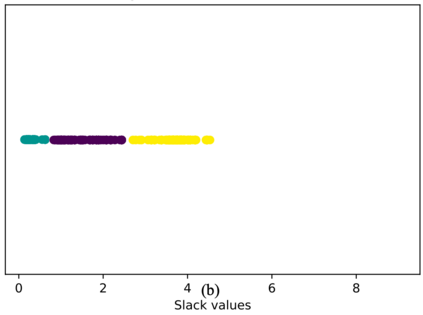

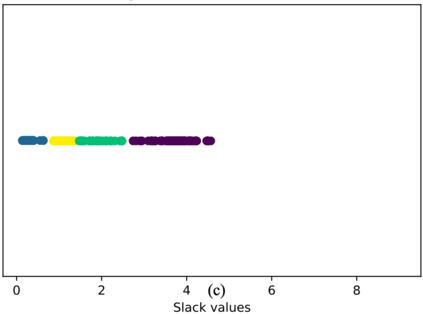

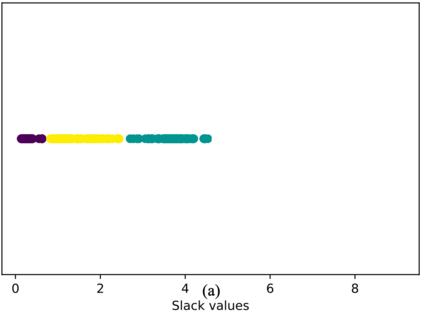

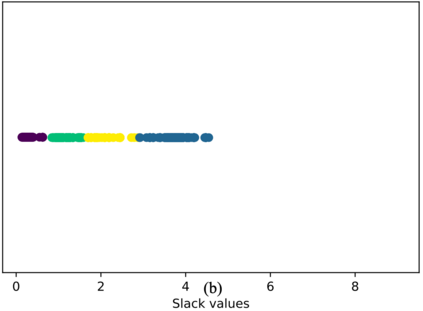

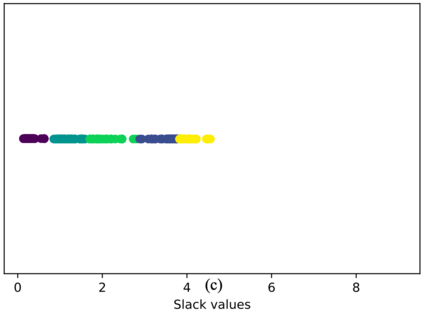

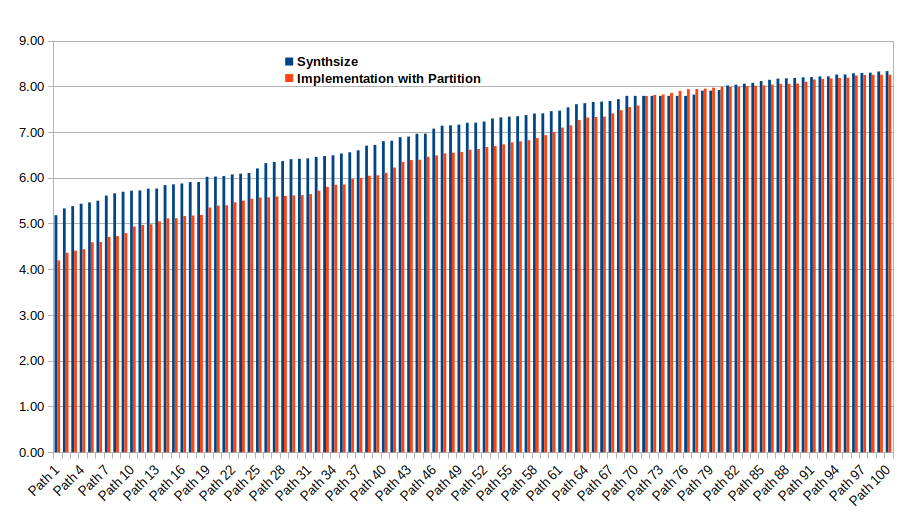

The exponential emergence of Field Programmable Gate Array (FPGA) has accelerated the research of hardware implementation of Deep Neural Network (DNN). Among all DNN processors, domain specific architectures, such as, Google's Tensor Processor Unit (TPU) have outperformed conventional GPUs. However, implementation of TPUs in reconfigurable hardware should emphasize energy savings to serve the green computing requirement. Voltage scaling, a popular approach towards energy savings, can be a bit critical in FPGA as it may cause timing failure if not done in an appropriate way. In this work, we present an ultra low power FPGA implementation of a TPU for edge applications. We divide the systolic-array of a TPU into different FPGA partitions, where each partition uses different near threshold (NTC) biasing voltages to run its FPGA cores. The biasing voltage for each partition is roughly calculated by the proposed static schemes. However, further calibration of biasing voltage is done by the proposed runtime scheme. Four clustering algorithms based on the minimum slack value of different design paths of Multiply Accumulates (MACs) study the partitioning of FPGA. To overcome the timing failure caused by NTC, the MACs which have higher minimum slack are placed in lower voltage partitions and the MACs have lower minimum slack path are placed in higher voltage partitions. The proposed architecture is simulated in a commercial platform : Vivado with Xilinx Artix-7 FPGA and academic platform VTR with 22nm, 45nm, 130nm FPGAs. The simulation results substantiate the implementation of voltage scaled TPU in FPGAs and also justifies its power efficiency.

翻译:现场可编程门阵列(FPGA)的快速出现加速了深神经网络硬件实施研究的步伐。 在全部 DNNN 处理器中,特定域架构(如谷歌的Tensor处理器(TPU))比常规的GPU(PTPU)要高得多。 然而,在可重新配置的硬件中实施TPU应强调节能以满足绿色计算要求。 电压缩放(一种对节能的流行方法)在FPGA中可能有点关键,因为如果不以适当的方式进行,可能会造成计时失败。 在这项工作中,我们展示了超低功率的 FPGA(DGA) 的 Systop-array(如谷地阵列等), 将TPGUPA的S-ray-array(如NTC) 用于不同的FPGA 的最小值, 微调压平流平流法的偏向性电压大约由提议的静态计划计算。但是,拟议的运行时制进一步校正校正其偏向电压的电压。,拟议的TRDFDA 平台的更低平流平极的平流图也是一个最低压 的压压 的压压压压压压压压压压压压压 。