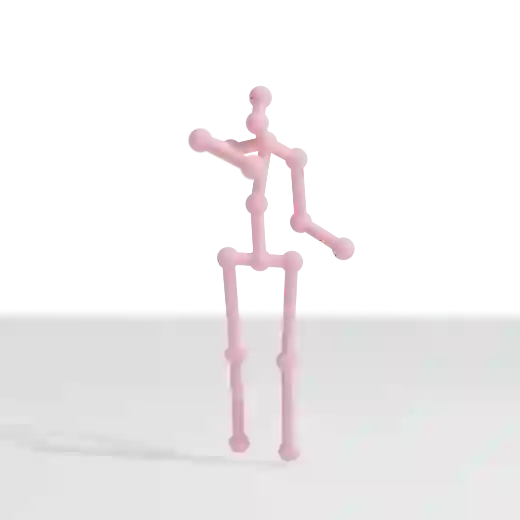

We present a self-supervised learning algorithm for 3D human pose estimation of a single person based on a multiple-view camera system and 2D body pose estimates for each view. To train our model, represented by a deep neural network, we propose a four-loss function learning algorithm, which does not require any 2D or 3D body pose ground-truth. The proposed loss functions make use of the multiple-view geometry to reconstruct 3D body pose estimates and impose body pose constraints across the camera views. Our approach utilizes all available camera views during training, while the inference is single-view. In our evaluations, we show promising performance on Human3.6M and HumanEva benchmarks, while we also present a generalization study on MPI-INF-3DHP dataset, as well as several ablation results. Overall, we outperform all self-supervised learning methods and reach comparable results to supervised and weakly-supervised learning approaches. Our code and models are publicly available

翻译:我们为3D人提出一个基于多视摄像系统的自我监督学习算法,对一个人进行自我监督的3D人构成的估算,2D人构成对每种观点的估计。为了以深神经网络为代表的模型进行我们模型的培训,我们提议了一个四损函数学习算法,它不需要任何2D或3D人构成地面真相。拟议的损失函数利用多视几何法来重建3D人构成的估算,并将身体强加在摄影视图中。我们的方法在培训期间利用了所有可用的相机视图,而推断则是单一视图。在我们的评估中,我们展示了人类3.6M 和 HumanEva 基准的有希望的绩效,同时我们还提供了对MPI-INF-3DHP 数据集的概括性研究,以及若干和解结果。总体而言,我们超越了所有自我监督的学习方法,并取得了可比较的结果,以监督的和薄弱控制的学习方法。我们的代码和模型是公开的。