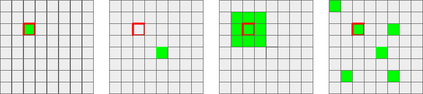

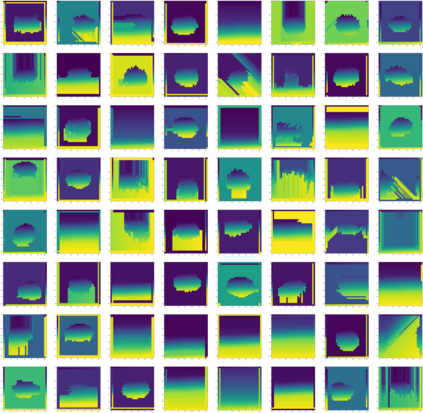

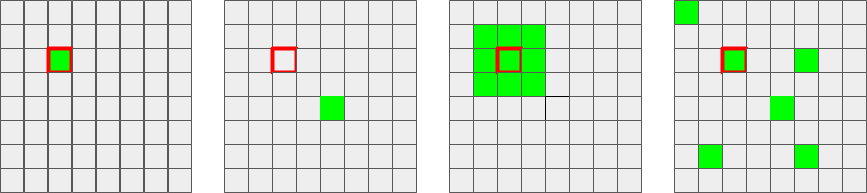

Privacy-Preserving Machine Learning algorithms must balance classification accuracy with data privacy. This can be done using a combination of cryptographic and machine learning tools such as Convolutional Neural Networks (CNN). CNNs typically consist of two types of operations: a convolutional or linear layer, followed by a non-linear function such as ReLU. Each of these types can be implemented efficiently using a different cryptographic tool. But these tools require different representations and switching between them is time-consuming and expensive. Recent research suggests that ReLU is responsible for most of the communication bandwidth. ReLU is usually applied at each pixel (or activation) location, which is quite expensive. We propose to share ReLU operations. Specifically, the ReLU decision of one activation can be used by others, and we explore different ways to group activations and different ways to determine the ReLU for such a group of activations. Experiments on several datasets reveal that we can cut the number of ReLU operations by up to three orders of magnitude and, as a result, cut the communication bandwidth by more than 50%.

翻译:隐私- 保存机器学习算法必须平衡分类准确性和数据隐私。 这可以通过结合诸如进化神经网络(CNN)等加密和机器学习工具来完成。 CNN通常由两种类型的操作组成: 进化层或线性层, 其次是非线性函数, 如RELU。 每种类型都可以使用不同的加密工具来高效实施。 但是这些工具需要不同的表达方式, 并且它们之间的转换需要花费时间和费用。 最近的研究表明, RELU 负责大部分通信带宽。 RELU 通常在每个相当昂贵的像素( 或激活) 位置上应用。 我们提议共享 ReLU 操作。 具体地说, 一种激活的RELU 决定可以被他人使用, 我们探索不同的方式来分组激活, 和不同的方式来确定这类激活组的RELU 。 对几个数据集的实验显示, 我们可以将RELU 操作的数量削减到三个级级, 并因此将通信带宽削减50%以上 。