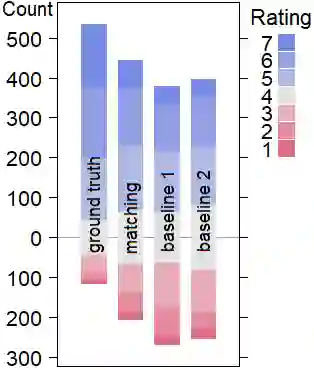

Automatic gesture generation from speech generally relies on implicit modelling of the nondeterministic speech-gesture relationship and can result in averaged motion lacking defined form. Here, we propose a database-driven approach of selecting gestures based on specific motion characteristics that have been shown to be associated with the speech audio. We extend previous work that identified expressive parameters of gesture motion that can both be predicted from speech and are perceptually important for a good speech-gesture match, such as gesture velocity and finger extension. A perceptual study was performed to evaluate the appropriateness of the gestures selected with our method. We compare our method with two baseline selection methods. The first respects timing, the desired onset and duration of a gesture, but does not match gesture form in other ways. The second baseline additionally disregards the original gesture timing for selecting gestures. The gesture sequences from our method were rated as a significantly better match to the speech than gestures selected by either baseline method.

翻译:从语音中自动生成手势通常依赖于非决定性的言语-言语-探究关系的隐含建模,并可能导致平均动作缺乏确定的形式。 在这里, 我们提议基于特定动作特点选择手势, 并显示与语音音频相关联的由数据库驱动的方法。 我们扩展了先前的工作, 确定了手势动作的表达性参数, 这些参数既可以从语音中预测出来, 也对良好的言语- 探究匹配( 如手势速度和手指延伸) 至关重要。 进行了一种概念性研究, 以评价用我们的方法选择的手势是否适当。 我们用两种基准选择方法比较了我们的方法。 第一个是尊重时间、 预想的开始时间和动作持续时间, 但没有以其他方式匹配手势形式。 第二个基线是忽略了选择手势的原始手势时间。 我们方法中的手势顺序被评为与言词的匹配率大大好于两种基线方法所选择的手势。