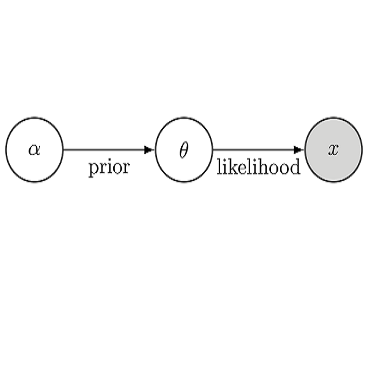

Recent advances in probabilistic deep learning enable amortized Bayesian inference in settings where the likelihood function is implicitly defined by a simulation program. But how faithful is such inference when simulations represent reality somewhat inaccurately? In this paper, we conceptualize the types of model misspecification arising in simulation-based inference and systematically investigate the performance of SNPE-C (APT) and the BayesFlow framework under these misspecifications. We propose an augmented optimization objective which imposes a probabilistic structure on the learned latent data summary space and utilize maximum mean discrepancy (MMD) to detect potentially catastrophic misspecifications during inference undermining the validity of the obtained results. We verify our detection criterion on a number of artificial and realistic misspecifications, ranging from toy conjugate models to complex models of decision making and disease outbreak dynamics applied to real data. Further, we show that posterior inference errors increase when the distance between the latent summary distributions of the true data-generating process and the training simulations grows. Thus, we demonstrate the dual utility of MMD as a method for detecting model misspecification and as a proxy for verifying the faithfulness of amortized simulation-based Bayesian inference.

翻译:在模拟程序暗含潜在功能定义的情形下,在模拟程序暗含了可能性功能的环境下,在概率深深深学习的概率最近取得进展,使贝耶斯人能够进行摊合。但是,当模拟代表现实时,这种忠实的推论如何不准确呢?在本文件中,我们构想了在模拟基础上的推论中出现的模型具体化模型类型,并系统地调查了SNPE-C(APT)和贝斯福罗框架在这些错误区分下的性能。我们提议了一个增强优化目标,对所学的潜在数据摘要空间施加一个概率结构,并利用最大平均值差异(MMD)在推断损害所获结果的有效性时发现潜在灾难性的误差。我们核查了我们关于人为和现实的误差的检测标准,从模拟同系模型到复杂的决策模型和对真实数据应用的疾病爆发动态。此外,我们表明,当真正的数据生成过程与培训模拟之间的潜在简要分布之间的距离增加时,后推推误差会增加。因此,我们展示了MMDMD的双重用途,作为模拟误判误判和误判误判的代差法。