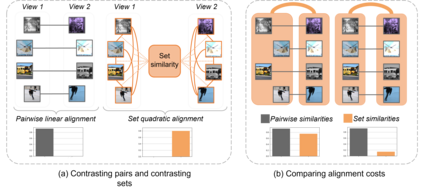

The standard approach to contrastive learning is to maximize the agreement between different views of the data. The views are ordered in pairs, such that they are either positive, encoding different views of the same object, or negative, corresponding to views of different objects. The supervisory signal comes from maximizing the total similarity over positive pairs, while the negative pairs are needed to avoid collapse. In this work, we note that the approach of considering individual pairs cannot account for both intra-set and inter-set similarities when the sets are formed from the views of the data. It thus limits the information content of the supervisory signal available to train representations. We propose to go beyond contrasting individual pairs of objects by focusing on contrasting objects as sets. For this, we use combinatorial quadratic assignment theory designed to evaluate set and graph similarities and derive set-contrastive objective as a regularizer for contrastive learning methods. We conduct experiments and demonstrate that our method improves learned representations for the tasks of metric learning and self-supervised classification.

翻译:对比式学习的标准方法是最大限度地实现数据不同观点之间的一致。 观点以对等方式排列, 以正、 将相同对象的不同观点编码或对等方式排列, 与不同对象的观点相对。 监督信号来自将完全相似性最大化于正对, 而负对是为了避免崩溃所需要的。 在这项工作中, 我们注意到, 考虑个体对子的方法无法在数据集从数据视图中形成时既考虑到内部的和相互之间的相似性, 从而限制了用于培训演示的监控信号的信息内容。 我们提议超越对个体对象的对比性, 将焦点放在对立的物体上。 为此, 我们使用组合式的二次分配理论, 来评估和绘制相似性图, 并得出定式- 调控目标, 作为对比性学习方法的常规要素。 我们进行实验, 并证明我们的方法改进了用于衡量学习和自我监控分类任务的知识表达方式 。