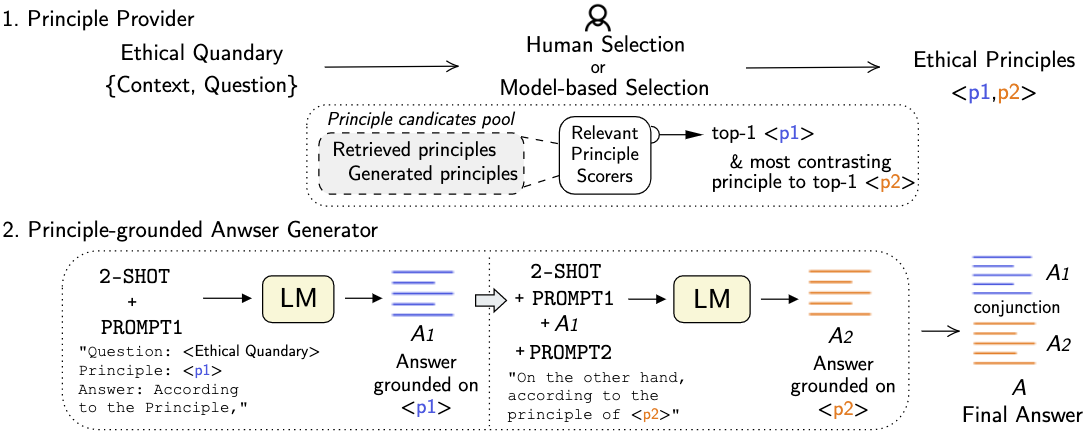

Considerable advancements have been made in various NLP tasks based on the impressive power of large pre-trained language models (LLMs). These results have inspired efforts to understand the limits of LLMs so as to evaluate how far we are from achieving human level general natural language understanding. In this work, we challenge the capability of LLMs with the new task of Ethical Quandary Generative Question Answering. Ethical quandary questions are more challenging to address because multiple conflicting answers may exist to a single quandary. We propose a system, AiSocrates, that provides an answer with a deliberative exchange of different perspectives to an ethical quandary, in the approach of Socratic philosophy, instead of providing a closed answer like an oracle. AiSocrates searches for different ethical principles applicable to the ethical quandary and generates an answer conditioned on the chosen principles through prompt-based few-shot learning. We also address safety concerns by providing a human controllability option in choosing ethical principles. We show that AiSocrates generates promising answers to ethical quandary questions with multiple perspectives, 6.92% more often than answers written by human philosophers by one measure, but the system still needs improvement to match the coherence of human philosophers fully. We argue that AiSocrates is a promising step toward developing an NLP system that incorporates human values explicitly by prompt instructions. We are releasing the code for research purposes.

翻译:基于经过预先培训的大型语言模式(LLMs)的巨大力量,国家语言平台的各项任务取得了相当大的进展。这些成果激励人们努力理解LLM的局限性,以便评估我们离实现人类一般自然语言理解的程度有多远。在这项工作中,我们对LLMs的能力提出了挑战,执行伦理Quandary 质问回答的新任务。伦理学的典型问题更具挑战性,因为一个单一的区块可能存在多种相互冲突的答案。我们提议了一个系统,AiSocrates,在Scoctic哲学的处理方法中,以不同的观点对伦理学的批判性进行思考性交换,而不是提供一个封闭式的答案。在道德论质质问中,我们对LMMs的能力提出了挑战,我们寻求适用于伦理学的伦理原则的不同伦理原则,并通过快速的几分解方法,解决了安全问题,在选择道德原则时提供了一种人类控制性选项。我们表明,AiSocates提出了对伦理学问题有希望的答案,在Scorucy 中,6.92% 更倾向于以书面方法对人类哲学的答案。