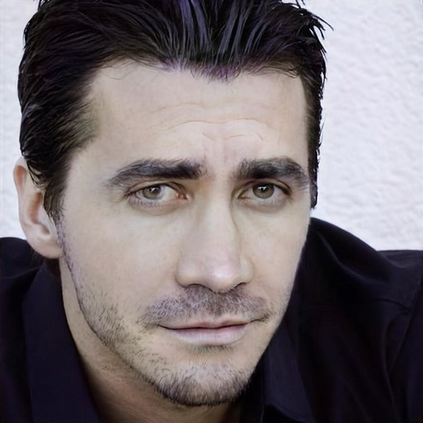

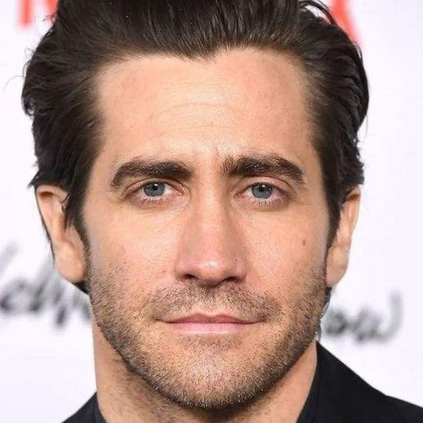

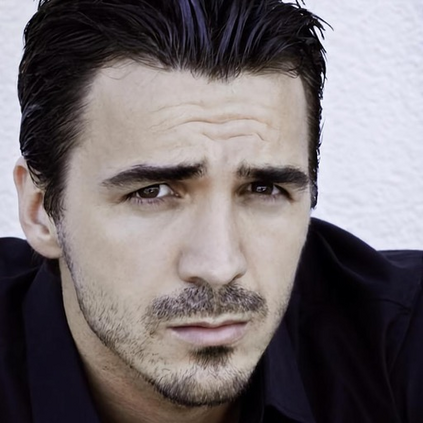

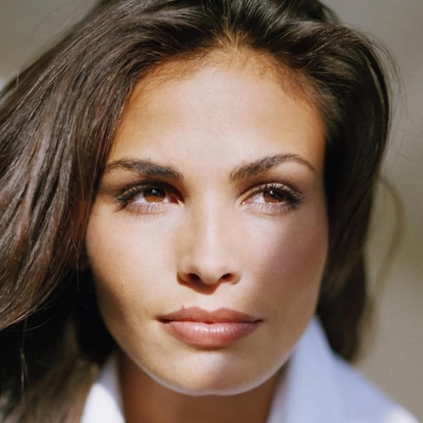

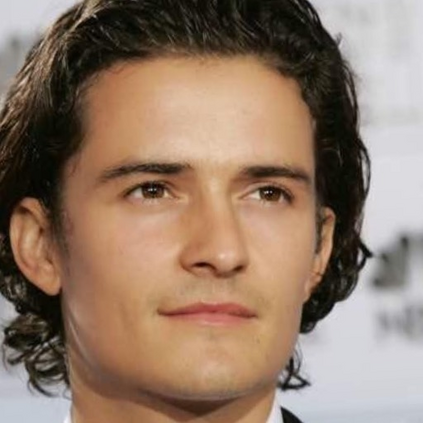

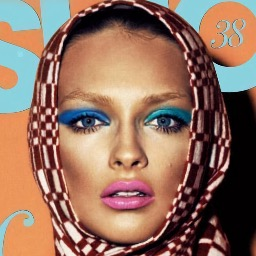

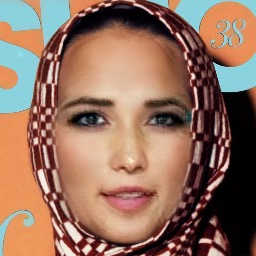

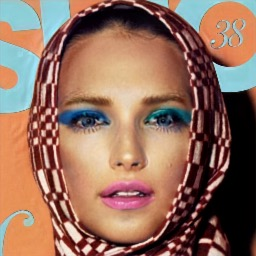

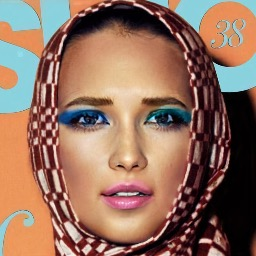

Numerous attempts have been made to the task of person-agnostic face swapping given its wide applications. While existing methods mostly rely on tedious network and loss designs, they still struggle in the information balancing between the source and target faces, and tend to produce visible artifacts. In this work, we introduce a concise and effective framework named StyleSwap. Our core idea is to leverage a style-based generator to empower high-fidelity and robust face swapping, thus the generator's advantage can be adopted for optimizing identity similarity. We identify that with only minimal modifications, a StyleGAN2 architecture can successfully handle the desired information from both source and target. Additionally, inspired by the ToRGB layers, a Swapping-Driven Mask Branch is further devised to improve information blending. Furthermore, the advantage of StyleGAN inversion can be adopted. Particularly, a Swapping-Guided ID Inversion strategy is proposed to optimize identity similarity. Extensive experiments validate that our framework generates high-quality face swapping results that outperform state-of-the-art methods both qualitatively and quantitatively.

翻译:鉴于其应用范围很广,人们已多次尝试将人不可知的面部转换任务。虽然现有方法主要依赖枯燥的网络和损失设计,但它们仍然在平衡源和目标面料的信息方面挣扎,倾向于产生可见的文物。在这项工作中,我们引入了一个简洁而有效的框架,名为StyleSwap。我们的核心想法是利用基于风格的生成器来增强高不忠性和强健的面部转换能力,从而可以将生成器的优势用于优化身份相似性。我们发现,只要进行最低限度的修改,SysteleGAN2架构就能成功处理源和目标两方面的预期信息。此外,在托尔格B层的启发下,将进一步设计一个Swapping-Driven Mask 分支来改进信息混合。此外,还可以采用StylegingGAN的转换优势。特别是,提出了一个Swapping-Guid ID Inversion战略来优化身份相似性。广泛的实验证实,我们的框架能够产生高质的面部交换结果,即质量和量和量上均超的先进方法。