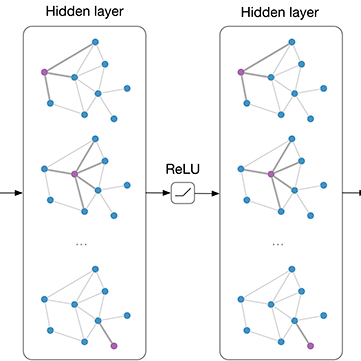

Node classification based on graph convolutional networks (GCNs) is vulnerable to adversarial attacks by malicious perturbations on graph structures, such as inserting or deleting graph edges. In this paper, by given a general attack model, we concretize the vulnerability of the GCN into a concrete description: The edge-read permission to GCN makes it vulnerable. We propose the Anonymous GCN (AN-GCN) which capable of making nodes participate in classification anonymously, thus withdraw the edge-read permission of the GCN. Extensive evaluations demonstrate that, by keeping the anonymity of node position while classification, AN-GCN block malicious perturbations passing along to the visible edge, meanwhile keep high accuracy (2.93\% higher than the state of the art GCN)

翻译:以图形革命网络(GCN)为基础的节点分类很容易受到图形结构(如插入或删除图形边缘)恶意干扰的对抗性攻击,例如插入或删除图形边缘。在本文中,通过给定一般攻击模型,我们将GCN的脆弱性具体化为具体的描述:GCN的边际读读许可使其变得脆弱。我们建议匿名GCN(AN-GCN)能够匿名参与分类,从而撤销GCN的边际访问许可。广泛的评估表明,通过保持节点位置匿名,同时分类,AN-GCN街区恶意扰动沿可见边缘移动,同时保持高度准确性(2.93 ⁇ /%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/%/