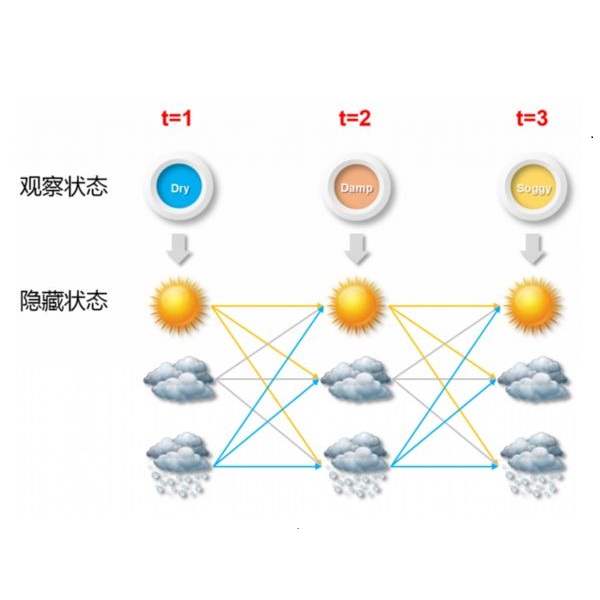

Pufferfish is a Bayesian privacy framework for designing and analyzing privacy mechanisms. It refines differential privacy, the current gold standard in data privacy, by allowing explicit prior knowledge in privacy analysis. Through these privacy frameworks, a number of privacy mechanisms have been developed in literature. In practice, privacy mechanisms often need be modified or adjusted to specific applications. Their privacy risks have to be re-evaluated for different circumstances. Moreover, computing devices only approximate continuous noises through floating-point computation, which is discrete in nature. Privacy proofs can thus be complicated and prone to errors. Such tedious tasks can be burdensome to average data curators. In this paper, we propose an automatic verification technique for Pufferfish privacy. We use hidden Markov models to specify and analyze discretized Pufferfish privacy mechanisms. We show that the Pufferfish verification problem in hidden Markov models is NP-hard. Using Satisfiability Modulo Theories solvers, we propose an algorithm to analyze privacy requirements. We implement our algorithm in a prototypical tool called FAIER, and present several case studies. Surprisingly, our case studies show that na\"ive discretization of well-established privacy mechanisms often fail, witnessed by counterexamples generated by FAIER. In discretized \emph{Above Threshold}, we show that it results in absolutely no privacy. Finally, we compare our approach with testing based approach on several case studies, and show that our verification technique can be combined with testing based approach for the purpose of (i) efficiently certifying counterexamples and (ii) obtaining a better lower bound for the privacy budget $\epsilon$.

翻译:普费鱼是一个用于设计和分析隐私机制的巴耶斯隐私框架。 它通过在隐私分析中允许明确的事先知识, 改善隐私差异, 目前的数据隐私金质标准。 通过这些隐私框架, 在文献中开发了一些隐私机制。 在实践上, 隐私机制往往需要修改或调整以适应特定应用。 隐私机制需要根据不同的情况重新评价。 此外, 计算机设备只能通过浮动点计算来估计连续的噪音, 这在性质上是互不相连的。 隐私证据因此可能复杂, 容易出错。 这种烦琐的任务可能会给平均数据保管者造成负担。 在本文中, 我们提出对普费鱼隐私的自动核查技术。 我们使用隐蔽的马尔科夫鱼的隐私机制来指定和分析离散的隐私机制。 我们的案例研究显示, 隐藏的马尔科夫索鱼的保密机制中的普费鱼的核查问题很难被重新定义。 我们建议一种算法来分析隐私要求。 我们用一种叫做FAIER的快速方法, 并且提出若干个案例研究。 令人惊讶的是, 我们的案例研究显示, 以基于基于保密性测试的保密性测试技术, 展示了一种基于常规的快速测试的结果。