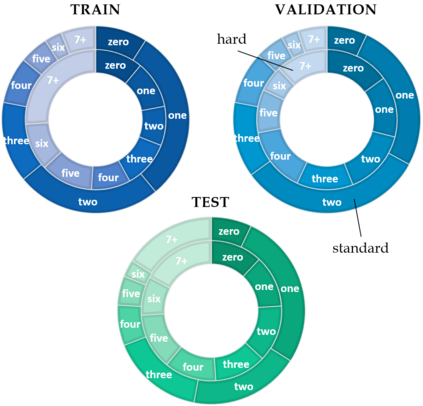

We investigate the reasoning ability of pretrained vision and language (V&L) models in two tasks that require multimodal integration: (1) discriminating a correct image-sentence pair from an incorrect one, and (2) counting entities in an image. We evaluate three pretrained V&L models on these tasks: ViLBERT, ViLBERT 12-in-1 and LXMERT, in zero-shot and finetuned settings. Our results show that models solve task (1) very well, as expected, since all models are pretrained on task (1). However, none of the pretrained V&L models is able to adequately solve task (2), our counting probe, and they cannot generalise to out-of-distribution quantities. We propose a number of explanations for these findings: LXMERT (and to some extent ViLBERT 12-in-1) show some evidence of catastrophic forgetting on task (1). Concerning our results on the counting probe, we find evidence that all models are impacted by dataset bias, and also fail to individuate entities in the visual input. While a selling point of pretrained V&L models is their ability to solve complex tasks, our findings suggest that understanding their reasoning and grounding capabilities requires more targeted investigations on specific phenomena.

翻译:我们调查了在两个需要多式联运整合的任务中预先培训的视觉和语言(V&L)模型的推理能力:(1) 将正确的图像调制配对与不正确的图像配对区分开来,(2) 在图像中计数实体。我们对这些任务的三个预先培训的V &L模型进行了评估:VilBERT、VilBERT 12in-1和LXMERT, 在零发和微调的环境下进行。我们的结果表明,由于所有模型都对任务进行了预先培训,这些模型都非常出色地解决了任务(1) 任务。然而,所有经过培训的V &L模型没有一个能够充分解决任务(2) 我们的计数探测器,它们无法概括出分配数量。我们对这些结果提出了一些解释:LXMERT(以及在某种程度上VELBERT 12in-1) 显示了一些灾难性地忘记任务的证据。关于计数调查的结果,我们发现所有模型都受到数据集偏差的影响,也没有在视觉输入中使实体分化。虽然经过预先培训的V &L模型的销售点是它们解决复杂任务的能力,但我们的研究结果表明,了解它们的具体推理学和地面能力需要更精确的研究。