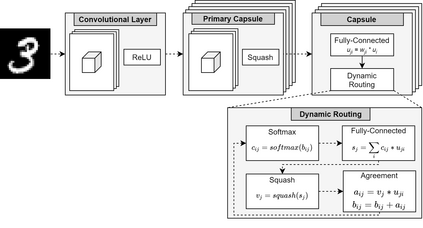

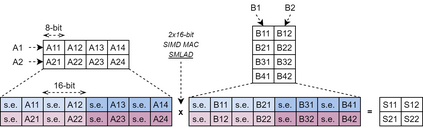

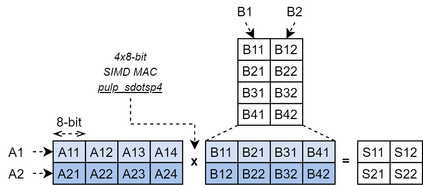

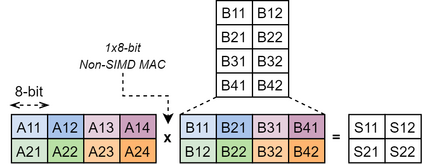

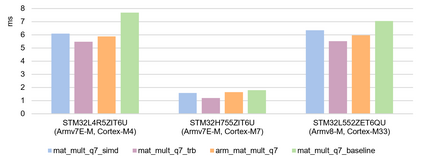

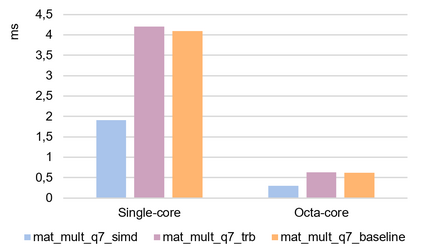

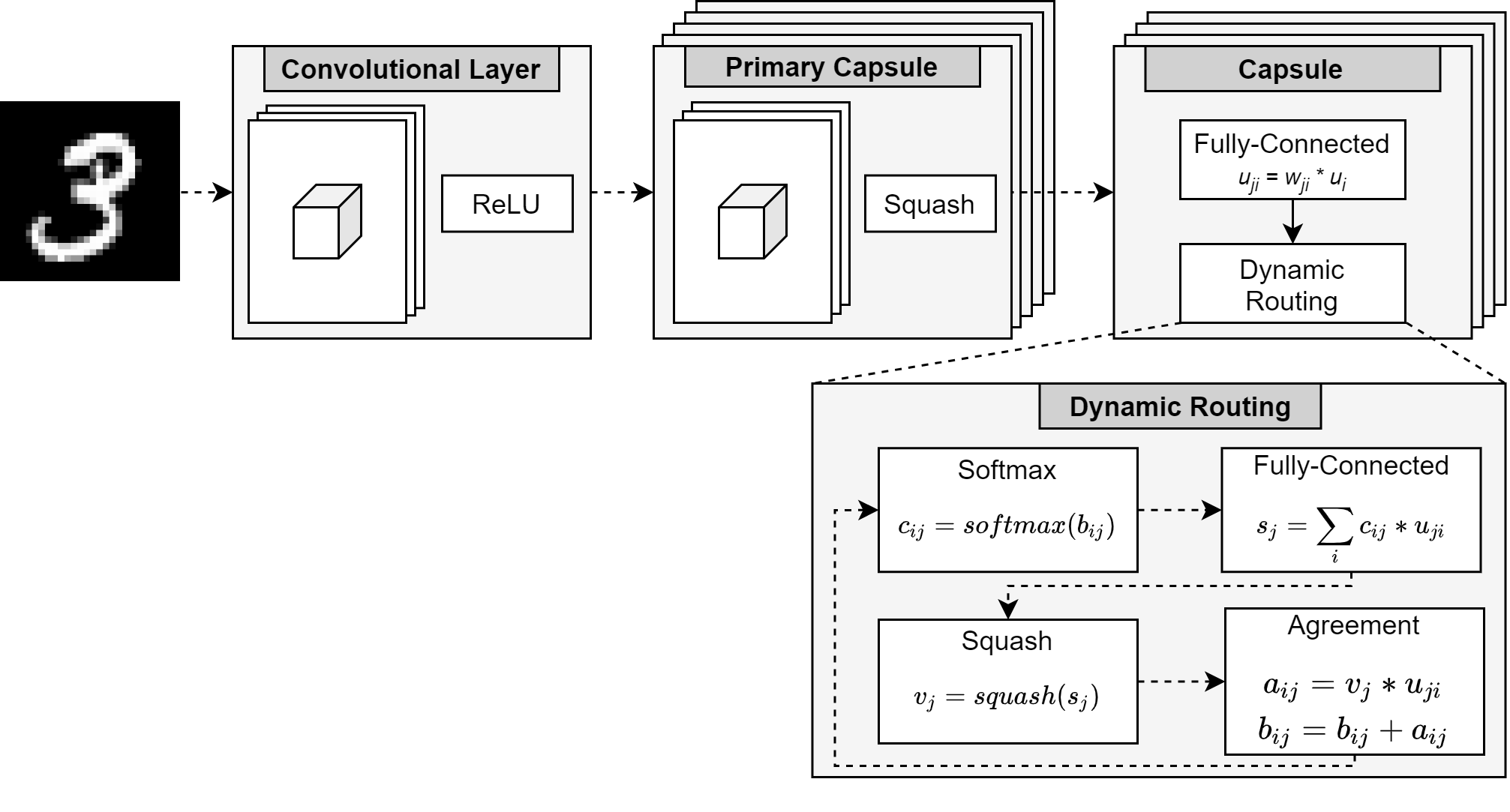

Capsule networks (CapsNets) are an emerging trend in image processing. In contrast to a convolutional neural network, CapsNets are not vulnerable to object deformation, as the relative spatial information of the objects is preserved across the network. However, their complexity is mainly related with the capsule structure and the dynamic routing mechanism, which makes it almost unreasonable to deploy a CapsNet, in its original form, in a resource-constrained device powered by a small microcontroller (MCU). In an era where intelligence is rapidly shifting from the cloud to the edge, this high complexity imposes serious challenges to the adoption of CapsNets at the very edge. To tackle this issue, we present an API for the execution of quantized CapsNets in Cortex-M and RISC-V MCUs. Our software kernels extend the Arm CMSIS-NN and RISC-V PULP-NN, to support capsule operations with 8-bit integers as operands. Along with it, we propose a framework to perform post training quantization of a CapsNet. Results show a reduction in memory footprint of almost 75%, with a maximum accuracy loss of 1%. In terms of throughput, our software kernels for the Arm Cortex-M are, at least, 5.70x faster than a pre-quantized CapsNet running on an NVIDIA GTX 980 Ti graphics card. For RISC-V, the throughout gain increases to 26.28x and 56.91x for a single- and octa-core configuration, respectively.

翻译:CapsNet是图像处理中的一种新兴趋势。 与进化神经网络相比, CapsNet并不易受到目标变形的影响, 因为天体的相对空间信息在整个网络中被保存。 但是,它们的复杂性主要与胶囊结构和动态路由机制有关, 这使得在一个由小型微控制器(MCU)驱动的资源限制装置下, 以原始形式部署一个CapsNet(Capsule Net)几乎不合理。 在情报从云迅速向边缘移动的时代, 这种高度复杂对在非常边缘采用CapsNet带来严重挑战。 为了解决这个问题,我们为Cortex-M和RISC-V MCUS执行四分立的CaptsNet提供了AIPI。 我们的软件内核将CMSSIS-NN和RISC-V PULP-NNU, 用于支持8位整数的胶囊操作,作为OUDRE的增益。 与此同时, 我们提议了一个框架, 用于在最大值Ax 5- National- mex IM 上进行最小化的50- mex 。