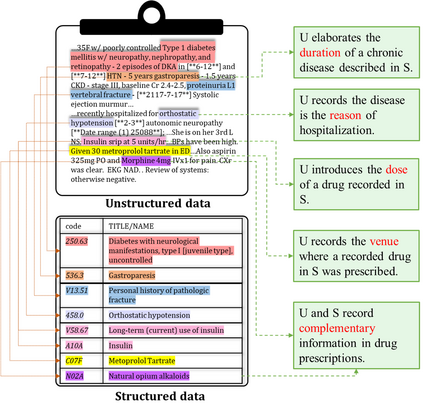

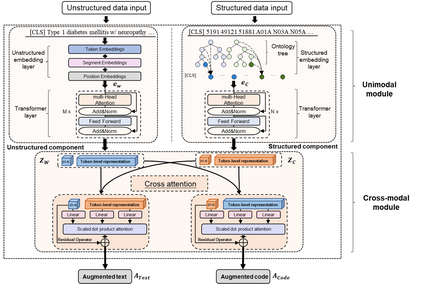

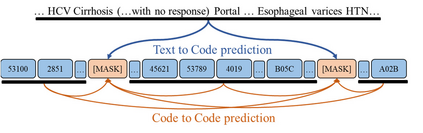

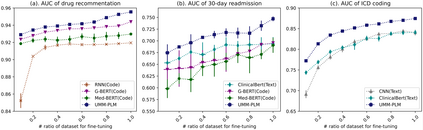

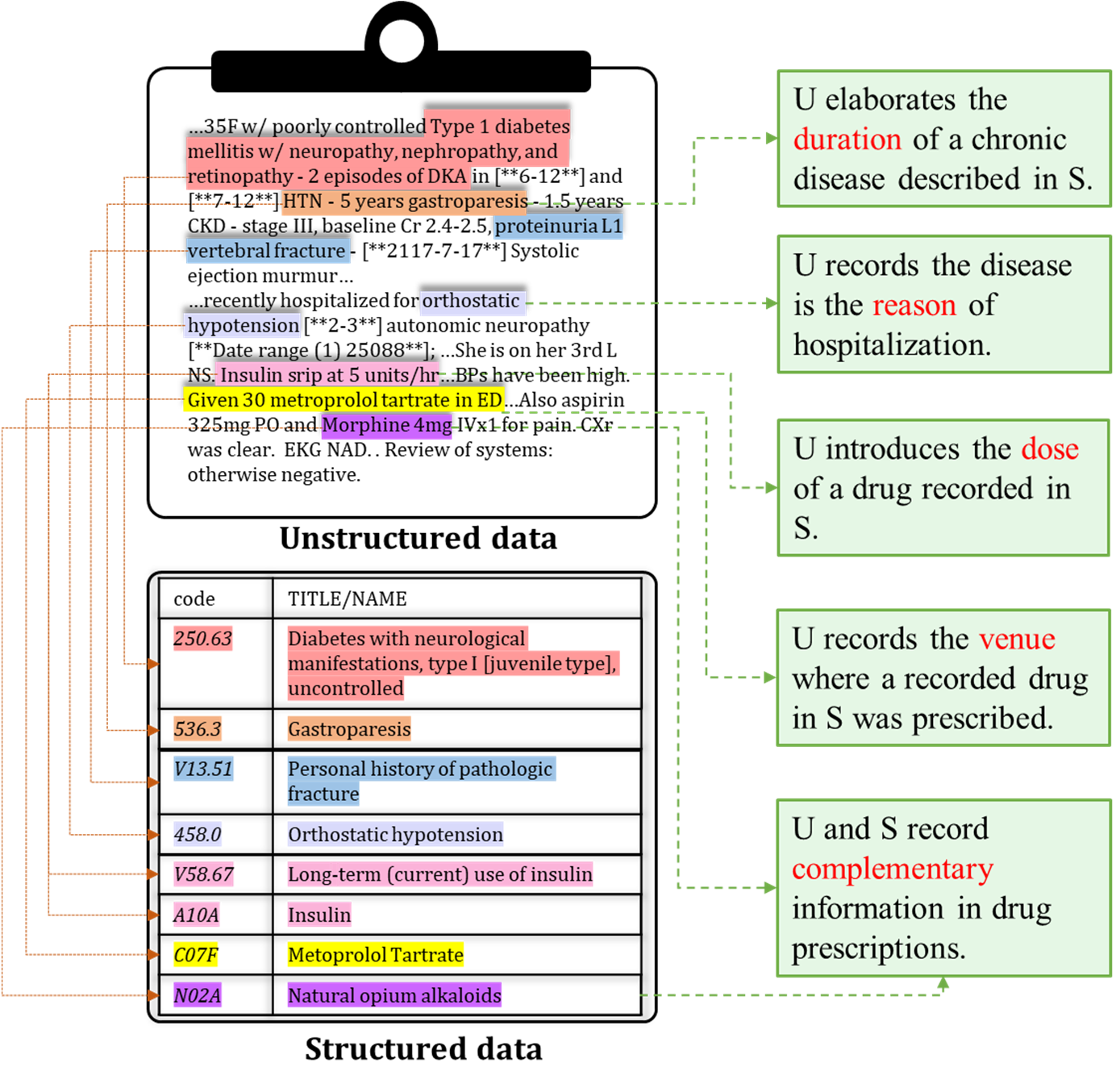

The massive amount of electronic health records (EHRs) has created enormous potentials for improving healthcare, among which structured (coded) data and unstructured (clinical narratives) data are two important textual modalities. They do not exist in isolation and can complement each other in many real-life clinical scenarios. Most existing studies in medical informatics, however, either only focus on a particular modality or apply simple and na\"ive ways to concatenate data from different modalities, which ignores the interactions between them. To address these issues, we proposed a Unified Medical Multimodal Pre-trained Language Model, named UMM-PLM, to jointly learn enhanced representations from both structured and unstructured EHRs. In UMM-PLM, an unimodal information extraction module is used to learn representative characteristics from each data modality respectively, where two Transformer-based components are adopted. A cross-modal module is then introduced to model the interactions between the two modalities. We pre-trained the model on a large EHR dataset containing both structured data and unstructured data, and verified the effectiveness of the model on three downstream clinical tasks, i.e., medication recommendation, 30-day readmission, and ICD coding, through extensive experiments. The results demonstrate the power of UMM-PLM compared with benchmark methods and state-of-the-art baselines. Further analyses show that UMM-PLM can effectively integrate multimodal textual information and potentially provide more comprehensive interpretations for clinical decision-making.

翻译:大量电子健康记录(EHRs)为改进医疗保健创造了巨大的潜力,其中结构化(编码)数据和结构化(临床叙述)数据是两种重要的文字模式,它们并非孤立地存在,在许多真实的临床假设中可以相互补充。医学信息学中的大多数现有研究,要么仅仅侧重于特定模式,要么采用简单和反向的方法,将不同模式的数据组合起来,忽视它们之间的互动。为解决这些问题,我们提议了一个名为UMM-PLM的统一医疗多式多式预培训语言模型,以共同从结构化和非结构化的EHR中学习强化的表述。在UMM-PLM中,一个单式信息提取模块用于分别学习每种数据模式的代表性特征,其中采用了两个基于变压器的组件。然后引入了一个跨式模块,用以模拟两种模式之间的互动。为了解决这些问题,我们预先对包含结构化数据和结构化非结构化数据的大型EHR数据集进行了培训,并核实了三种下游临床模型的有效性。在MMM-M-M-M-M-M-M-M-M-M-M-M-M-M-M-M-M-M-PL-M-M-M-PL-M-M-M-M-PL-PL-M-M-PL-PL-M-PL-M-M-M-M-M-M-M-M-M-M-M-M-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-C-ID-ID-ID-IL-IL-ID-ID-I-I-I-I-I-I-ID-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-I-