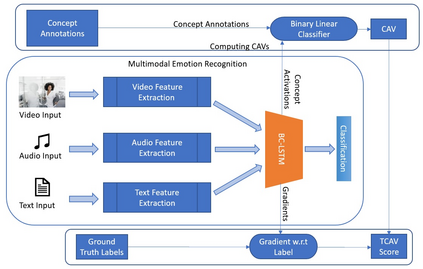

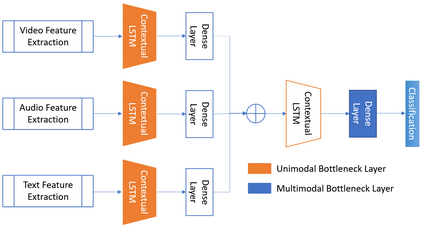

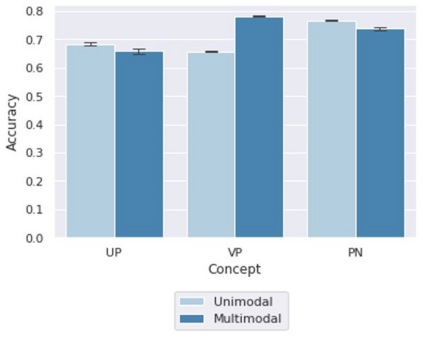

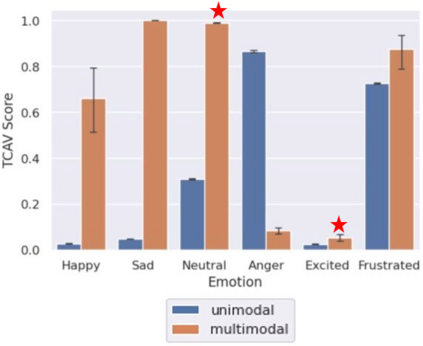

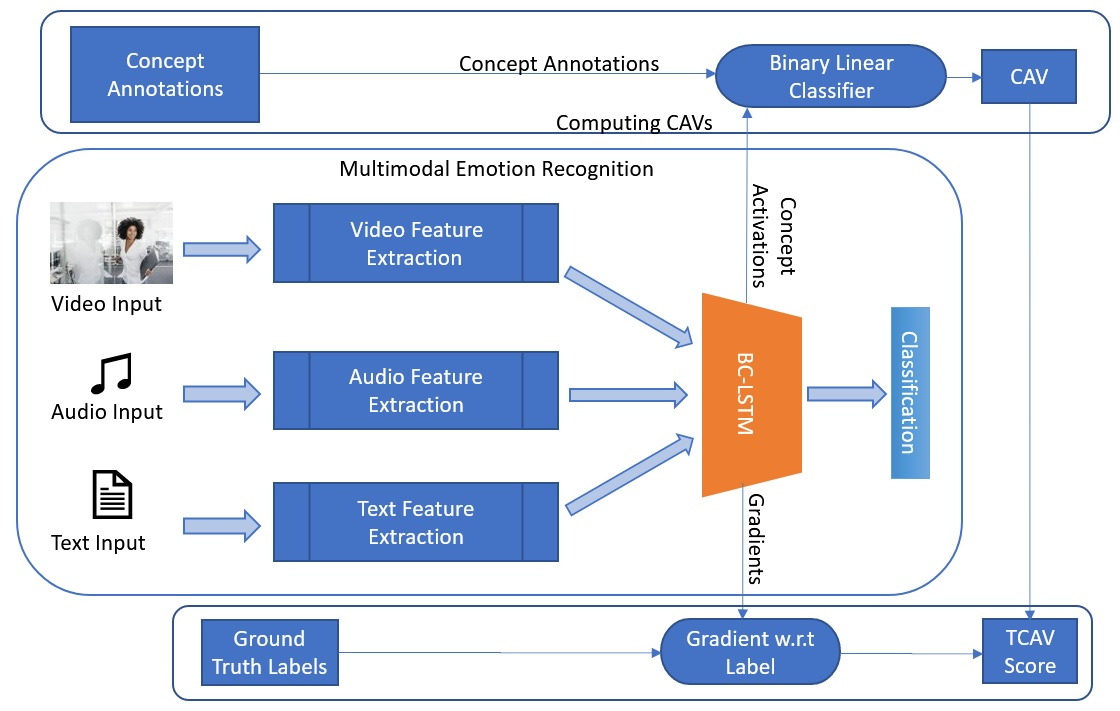

Multimodal Emotion Recognition refers to the classification of input video sequences into emotion labels based on multiple input modalities (usually video, audio and text). In recent years, Deep Neural networks have shown remarkable performance in recognizing human emotions, and are on par with human-level performance on this task. Despite the recent advancements in this field, emotion recognition systems are yet to be accepted for real world setups due to the obscure nature of their reasoning and decision-making process. Most of the research in this field deals with novel architectures to improve the performance for this task, with a few attempts at providing explanations for these models' decisions. In this paper, we address the issue of interpretability for neural networks in the context of emotion recognition using Concept Activation Vectors (CAVs). To analyse the model's latent space, we define human-understandable concepts specific to Emotion AI and map them to the widely-used IEMOCAP multimodal database. We then evaluate the influence of our proposed concepts at multiple layers of the Bi-directional Contextual LSTM (BC-LSTM) network to show that the reasoning process of neural networks for emotion recognition can be represented using human-understandable concepts. Finally, we perform hypothesis testing on our proposed concepts to show that they are significant for interpretability of this task.

翻译:多式情感认知是指将输入视频序列分类为基于多种输入模式(通常是视频、音频和文字)的情感标签。近年来,深神经网络在识别人类情感方面表现出了显著的成绩,并且与人类在这项任务方面的表现相当。尽管最近在这一领域取得了进步,但由于其推理和决策过程的模糊性质,情感识别系统尚未被现实世界设置所接受。这个领域的研究大多涉及改进这项任务绩效的新结构,很少尝试为这些模型的决定提供解释。在本文件中,我们讨论了在使用概念动因矢量(CAVs)来识别情感背景下神经网络的可解释性问题。为了分析模型的潜在空间,我们定义了情感AI所特有的人类可理解概念,并将这些概念映射到广泛使用的 IEMOCAP 多式联运数据库中。然后,我们评估了我们提出的概念在双向背景LSTM(BC-LSTM)网络多个层次上的影响,以显示这些模型的逻辑网络在通过概念识别情感认同的神经网络的推理过程中,我们最后可以代表着这种可理解性概念。