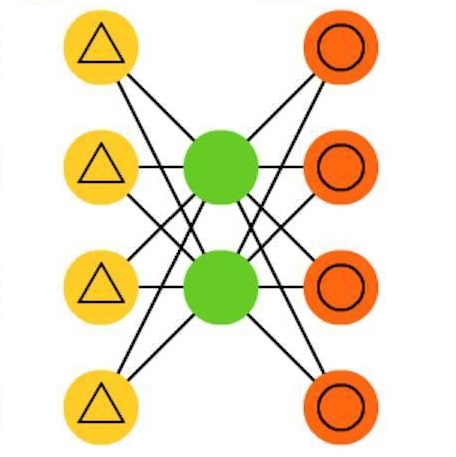

Traditional physics-informed neural networks (PINNs) do not always satisfy physics based constraints, especially when the constraints include differential operators. Rather, they minimize the constraint violations in a soft way. Strict satisfaction of differential-algebraic equations (DAEs) to embed domain knowledge and first-principles in data-driven models is generally challenging. This is because data-driven models consider the original functions to be black-box whose derivatives can only be obtained after evaluating the functions. We introduce DAE-HardNet, a physics-constrained (rather than simply physics-informed) neural network that learns both the functions and their derivatives simultaneously, while enforcing algebraic as well as differential constraints. This is done by projecting model predictions onto the constraint manifold using a differentiable projection layer. We apply DAE-HardNet to several systems and test problems governed by DAEs, including the dynamic Lotka-Volterra predator-prey system and transient heat conduction. We also show the ability of DAE-HardNet to estimate unknown parameters through a parameter estimation problem. Compared to multilayer perceptrons (MLPs) and PINNs, DAE-HardNet achieves orders of magnitude reduction in the physics loss while maintaining the prediction accuracy. It has the added benefits of learning the derivatives which improves the constrained learning of the backbone neural network prior to the projection layer. For specific problems, this suggests that the projection layer can be bypassed for faster inference. The current implementation and codes are available at https://github.com/SOULS-TAMU/DAE-HardNet.

翻译:传统的物理信息神经网络(PINNs)并不总能满足基于物理的约束,尤其是当约束包含微分算子时。相反,它们以软方式最小化约束违反。在数据驱动模型中严格满足微分代数方程(DAEs)以嵌入领域知识和第一性原理通常具有挑战性。这是因为数据驱动模型将原始函数视为黑盒,其导数只能在函数求值后获得。我们提出了DAE-HardNet,这是一种物理约束(而不仅仅是物理信息)神经网络,它同时学习函数及其导数,并强制实施代数约束和微分约束。这是通过使用可微分投影层将模型预测投影到约束流形上来实现的。我们将DAE-HardNet应用于多个由DAEs控制的系统和测试问题,包括动态Lotka-Volterra捕食者-猎物系统和瞬态热传导。我们还展示了DAE-HardNet通过参数估计问题估计未知参数的能力。与多层感知器(MLPs)和PINNs相比,DAE-HardNet在保持预测精度的同时,将物理损失降低了数个数量级。它还具有学习导数的额外优势,从而改善了投影层之前骨干神经网络的约束学习。对于特定问题,这表明可以绕过投影层以实现更快的推理。当前实现和代码可在https://github.com/SOULS-TAMU/DAE-HardNet获取。