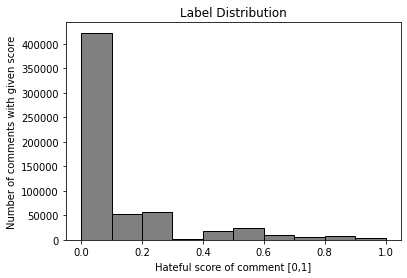

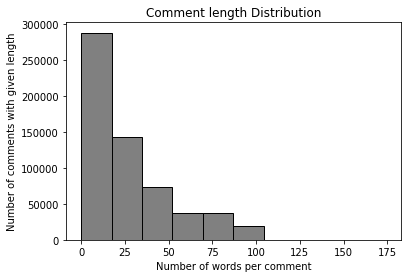

This document sums up our results forthe NLP lecture at ETH in the springsemester 2021. In this work, a BERTbased neural network model (Devlin et al.,2018) is applied to the JIGSAW dataset (Jigsaw/Conversation AI, 2019) in or-der to create a model identifying hate-ful and toxic comments (strictly seper-ated from offensive language) in onlinesocial platforms (English language), inthis case Twitter. Three other neural net-work architectures and a GPT-2 (Radfordet al., 2019) model are also applied onthe provided data set in order to com-pare these different models. The trainedBERT model is then applied on two dif-ferent data sets to evaluate its generali-sation power, namely on another Twitterdata set (Tom Davidson, 2017) (Davidsonet al., 2017) and the data set HASOC 2019(Thomas Mandl, 2019) (Mandl et al.,2019) which includes Twitter and alsoFacebook comments; we focus on the En-glish HASOC 2019 data.In addition,it can be shown that by fine-tuning thetrained BERT model on these two datasets by applying different transfer learn-ing scenarios via retraining partial or alllayers the predictive scores improve com-pared to simply applying the model pre-trained on the JIGSAW data set. Withour results, we get precisions from 64% toaround 90% while still achieving accept-able recall values of at least lower 60s%, proving that BERT is suitable for real usecases in social platforms.

翻译:本文总结了我们在2021年春季的春天, 在 2021年春季, ETER 在 ETH 的 NLP 讲座中的结果。 在这项工作中, 基于 BERT 的60 神经网络模型( Devlin et al., 2018 ) 应用在 JIGSAW 数据集( Jigsaw/ Converation AI, 2019 ) 或 der 上, 以创建在在线社会平台( 英文) 中识别仇恨和有毒评论的模型( 严格以冒犯性语言分隔) 。 在Twitter 2019 上, 另外三个神经网络网络工程架构和 GPT( Radfordet Al, 2019 ) 也应用了基于 BERTERT模型的60 神经网络模型模型( 等), 以便让这些不同的模型能够调制 。 然后, 将经过培训的BERTERT模型应用在两个 diff- ferent 数据集上,, 也就是在另一套TABE 上, 我们用这些数据库的模型来改进数据。