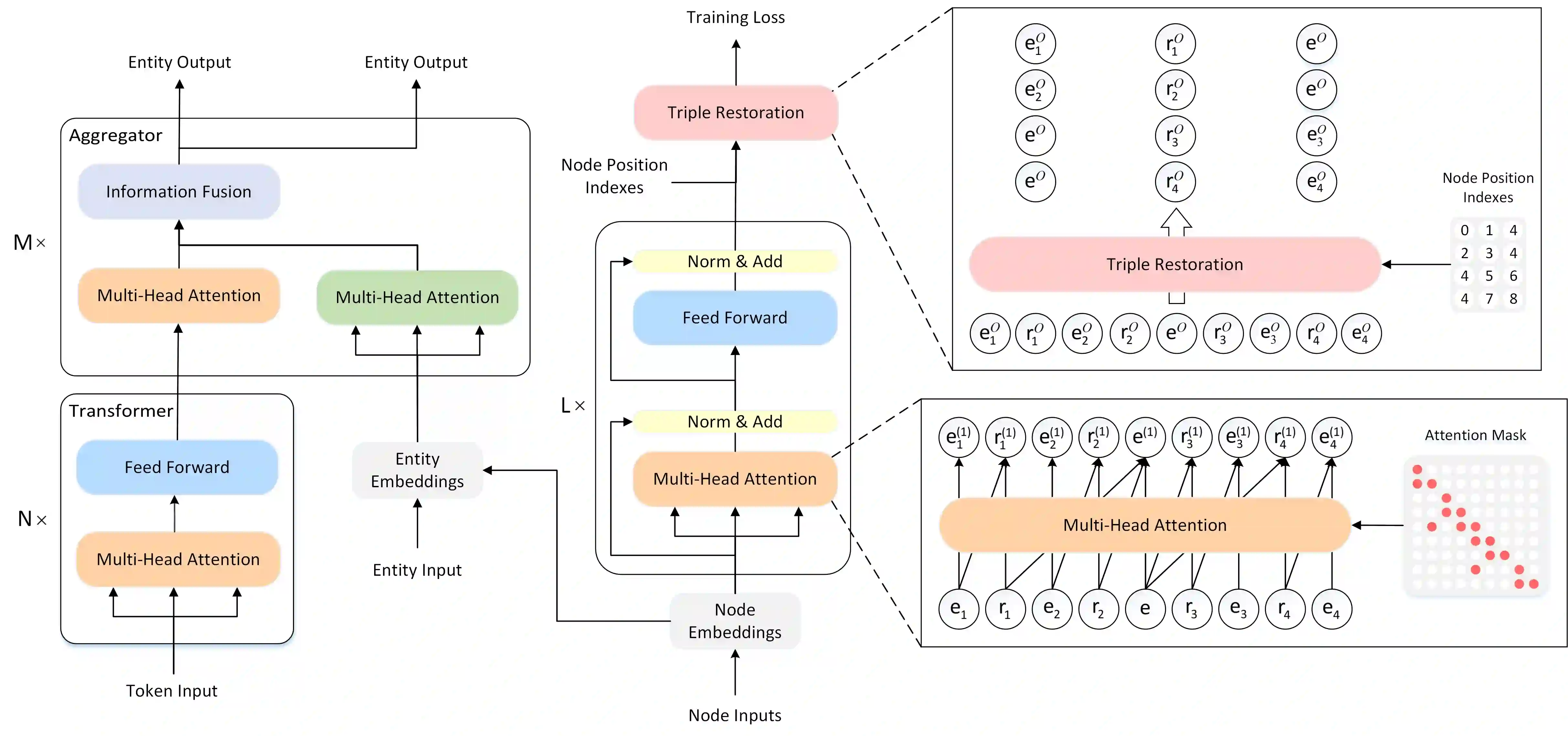

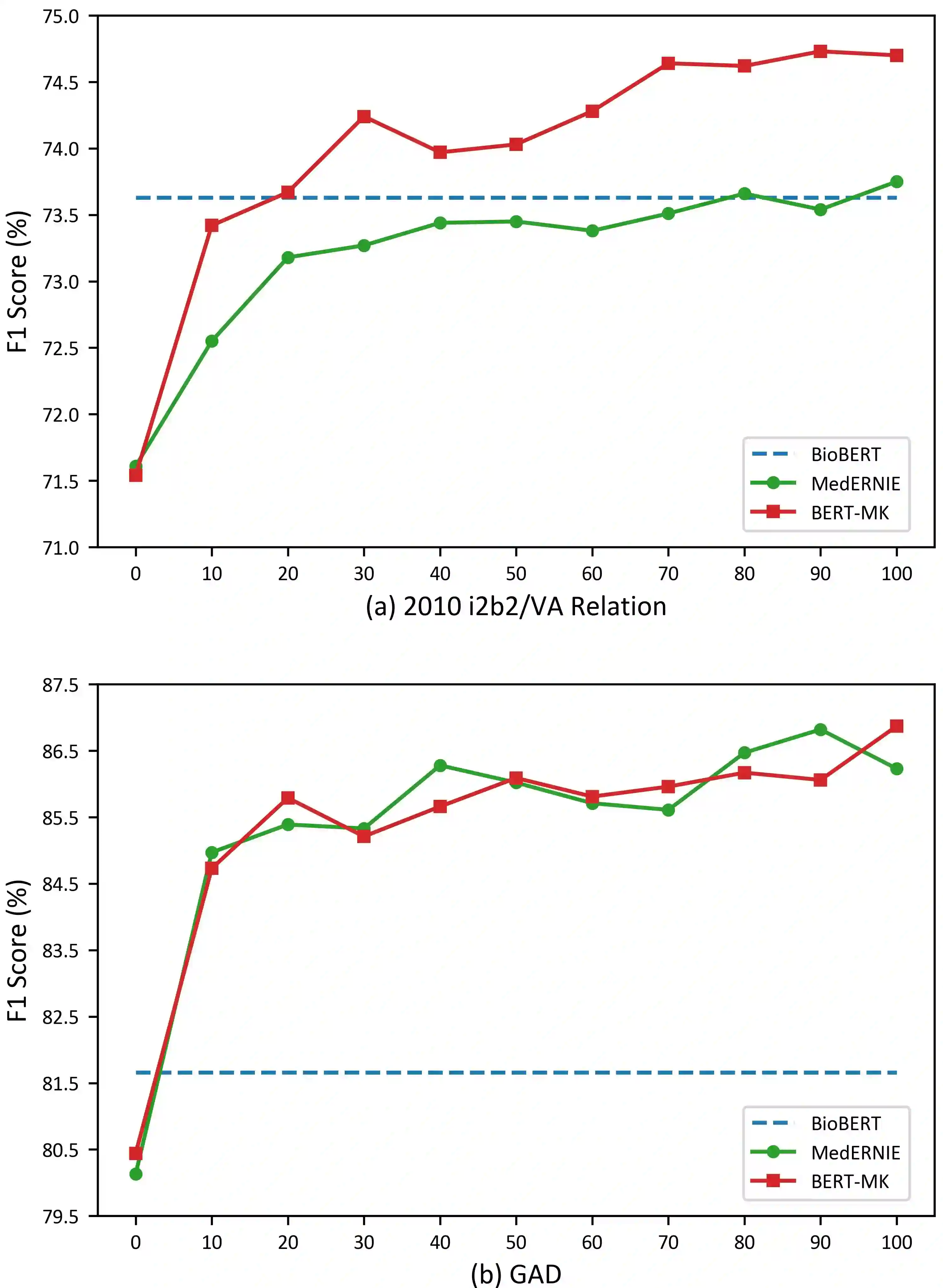

Complex node interactions are common in knowledge graphs, and these interactions also contain rich knowledge information. However, traditional methods usually treat a triple as a training unit during the knowledge representation learning (KRL) procedure, neglecting contextualized information of the nodes in knowledge graphs (KGs). We generalize the modeling object to a very general form, which theoretically supports any subgraph extracted from the knowledge graph, and these subgraphs are fed into a novel transformer-based model to learn the knowledge embeddings. To broaden usage scenarios of knowledge, pre-trained language models are utilized to build a model that incorporates the learned knowledge representations. Experimental results demonstrate that our model achieves the state-of-the-art performance on several medical NLP tasks, and improvement above TransE indicates that our KRL method captures the graph contextualized information effectively.

翻译:复杂的节点互动在知识图中很常见,这些互动还包含丰富的知识信息。然而,传统方法通常在知识代表学习(KRL)程序期间将三重作为培训单位,忽视知识图(KGs)中节点的背景信息。 我们将模型对象概括为非常一般的形式,从理论上支持从知识图中提取的任何子图,这些子图被输入到一个新型的基于变压器的模型中,以学习知识嵌入。为了扩大知识的使用场景,使用预先培训的语言模型来构建一个包含知识代表的模型。实验结果表明,我们的模型在数项医学NLP任务上取得了最先进的表现, TransE的改进表明,我们的KRL方法有效地捕捉了图形背景信息。