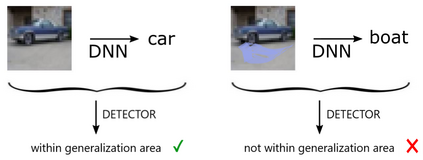

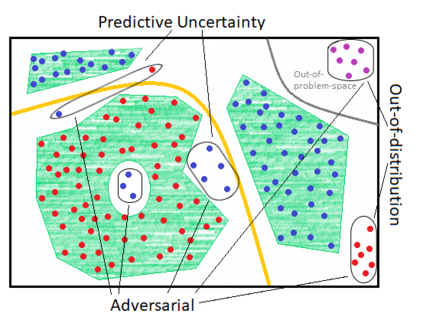

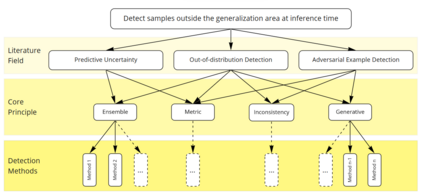

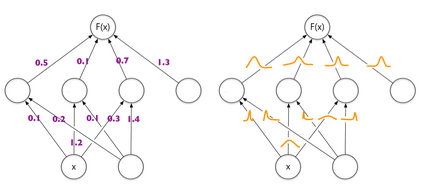

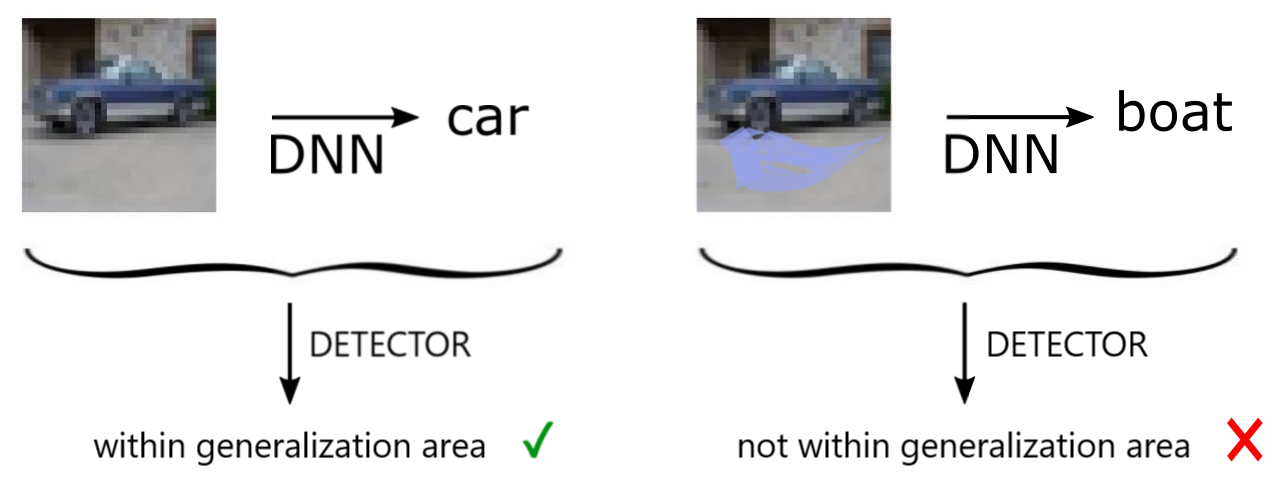

Deep Neural Networks (DNNs) achieve state-of-the-art performance on numerous applications. However, it is difficult to tell beforehand if a DNN receiving an input will deliver the correct output since their decision criteria are usually nontransparent. A DNN delivers the correct output if the input is within the area enclosed by its generalization envelope. In this case, the information contained in the input sample is processed reasonably by the network. It is of large practical importance to assess at inference time if a DNN generalizes correctly. Currently, the approaches to achieve this goal are investigated in different problem set-ups rather independently from one another, leading to three main research and literature fields: predictive uncertainty, out-of-distribution detection and adversarial example detection. This survey connects the three fields within the larger framework of investigating the generalization performance of machine learning methods and in particular DNNs. We underline the common ground, point at the most promising approaches and give a structured overview of the methods that provide at inference time means to establish if the current input is within the generalization envelope of a DNN.

翻译:深神经网络(DNN)在许多应用中取得了最先进的业绩。然而,很难事先判断接受输入的DNN是否会提供正确的产出,因为其决定标准通常不透明。如果输入是在其概括性信封所包的区域内,DNN提供正确的产出。在这种情况下,输入样本中所含的信息由网络合理处理。如果DNN的概观正确的话,评估推论时间就具有很大的实际重要性。目前,实现该目标的方法是在不同的问题设置中调查的,而不是相互独立调查,导致三个主要研究和文献领域:预测不确定性、分配外探测和对抗性实例探测。这项调查将三大领域连接到调查机器学习方法普遍性能的大框架内,特别是DNN。我们强调共同点,指出最有希望的方法,并有条理地概述提供推论时间的手段,以确定目前的输入是否在DNN的概括性能范围内。