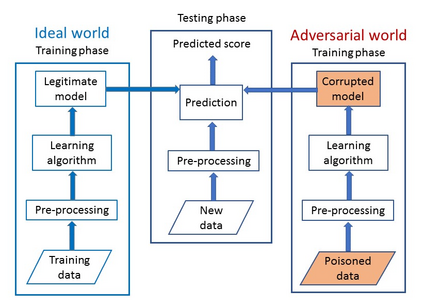

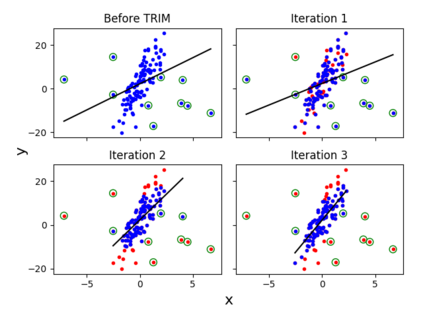

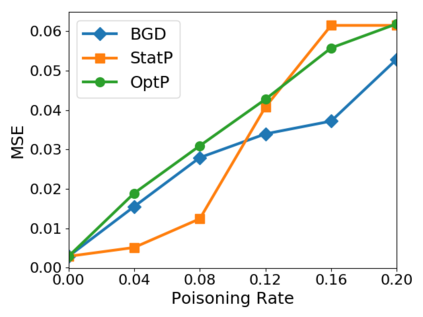

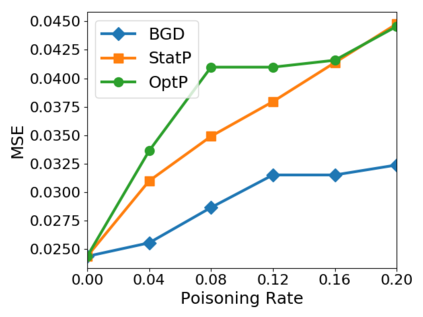

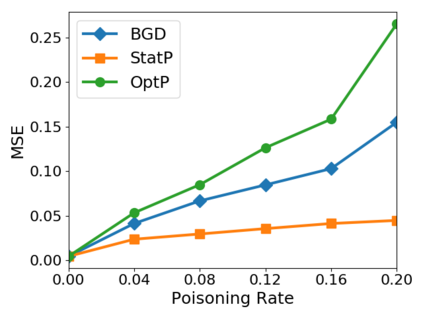

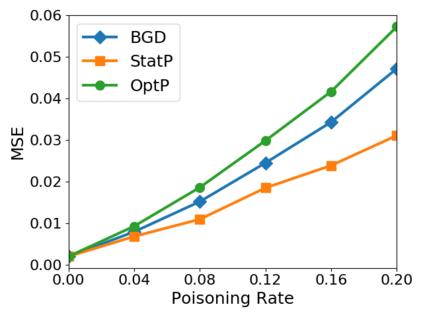

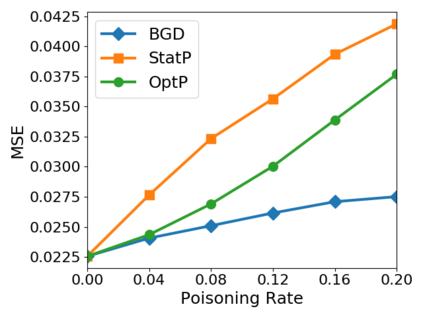

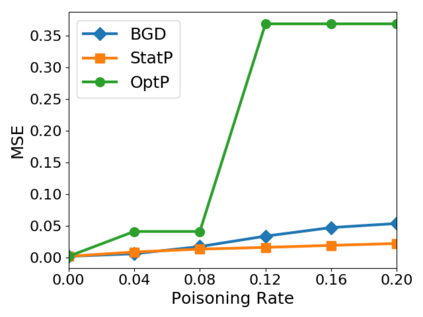

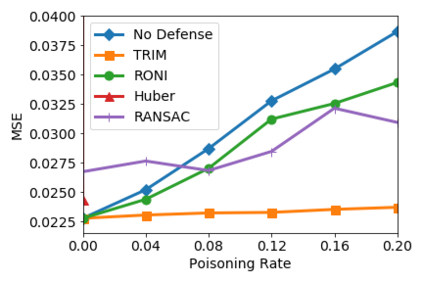

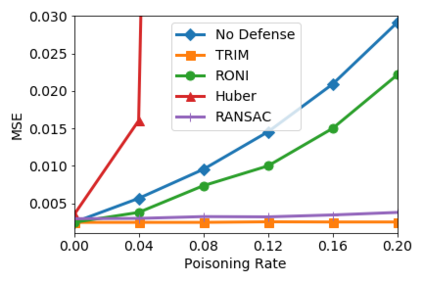

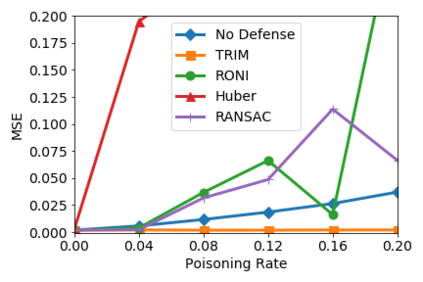

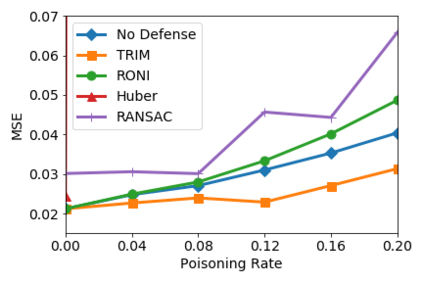

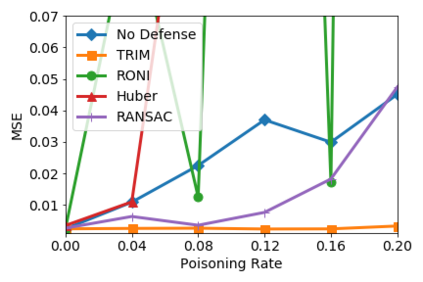

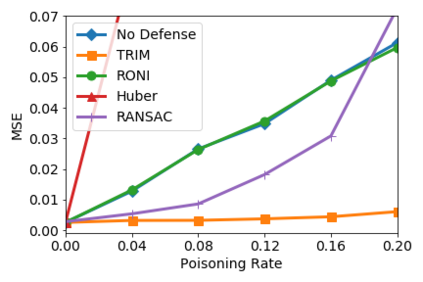

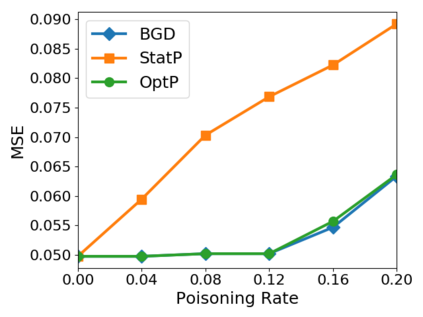

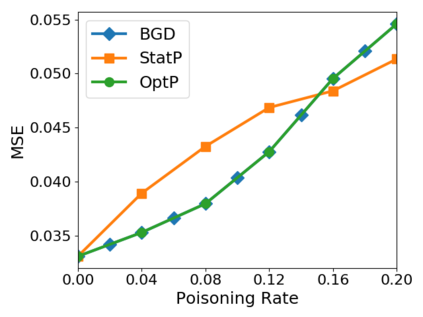

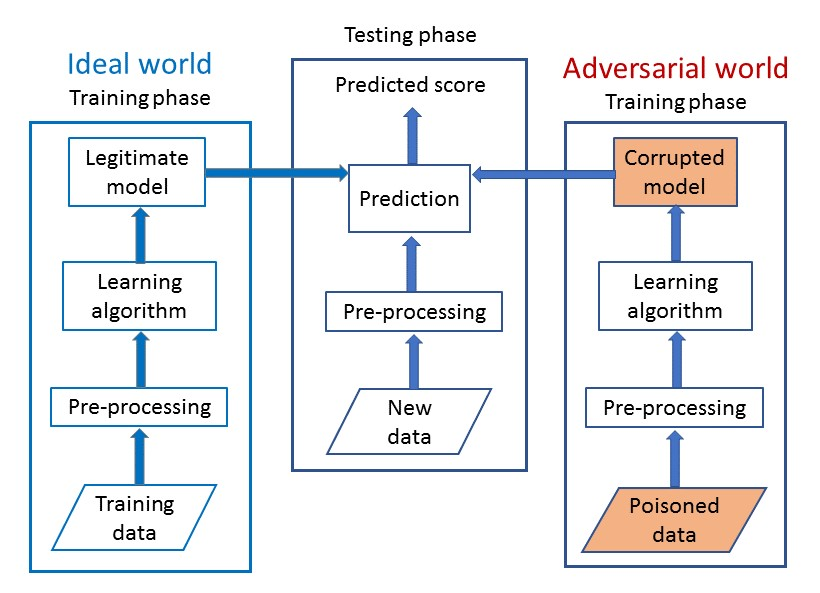

As machine learning becomes widely used for automated decisions, attackers have strong incentives to manipulate the results and models generated by machine learning algorithms. In this paper, we perform the first systematic study of poisoning attacks and their countermeasures for linear regression models. In poisoning attacks, attackers deliberately influence the training data to manipulate the results of a predictive model. We propose a theoretically-grounded optimization framework specifically designed for linear regression and demonstrate its effectiveness on a range of datasets and models. We also introduce a fast statistical attack that requires limited knowledge of the training process. Finally, we design a new principled defense method that is highly resilient against all poisoning attacks. We provide formal guarantees about its convergence and an upper bound on the effect of poisoning attacks when the defense is deployed. We evaluate extensively our attacks and defenses on three realistic datasets from health care, loan assessment, and real estate domains.

翻译:随着机器学习被广泛用于自动决策,攻击者有强大的动力来操纵机器学习算法产生的结果和模型。 在本文中,我们首次系统地研究中毒袭击及其线性回归模型的对应措施。在中毒袭击中,攻击者故意影响培训数据以操纵预测模型的结果。我们提议了一个专门为线性回归设计的理论优化框架,并在一系列数据集和模型上展示其有效性。我们还引入了快速的统计袭击,这需要有限地了解培训过程。最后,我们设计了一种新的有原则的防御方法,该方法对于所有中毒袭击具有高度的抵抗力。我们正式保证其趋同性,并在部署防御时对中毒袭击的影响有一个上限。我们广泛评价了我们从保健、贷款评估和房地产领域获得的三个现实数据组进行的攻击和防御。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem