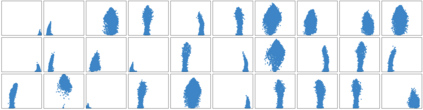

Temporal action detection (TAD) aims to determine the semantic label and the boundaries of every action instance in an untrimmed video. It is a fundamental task in video understanding and significant progress has been made in TAD. Previous methods involve multiple stages or networks and hand-designed rules or operations, which fall short in efficiency and flexibility. Here, we construct an end-to-end framework for TAD upon Transformer, termed \textit{TadTR}, which simultaneously predicts all action instances as a set of labels and temporal locations in parallel. TadTR is able to adaptively extract temporal context information needed for making action predictions, by selectively attending to a number of snippets in a video. It greatly simplifies the pipeline of TAD and runs much faster than previous detectors. Our method achieves state-of-the-art performance on HACS Segments and THUMOS14 and competitive performance on ActivityNet-1.3. Our code will be made available at \url{https://github.com/xlliu7/TadTR}.

翻译:时间动作检测(TAD)旨在确定语义标签和每个动作的界限,这是视频理解的一项基本任务,在TAD中已经取得了显著进展。 以往的方法涉及多个阶段或网络和手工设计的规则或操作,但效率与灵活性都不足。 在这里, 我们在变换器上为TAD建立一个端到端的框架, 称为\ textit{TadTR}, 它同时将所有动作都预测成一组标签和时间位置并列。 TadTR 能够通过有选择地在视频中选取一些片段进行行动预测所需的时间背景信息。 它大大简化了TAD的管道, 运行速度比以前的探测器快得多。 我们的方法在HACS段和THUMOS14 上实现了最先进的表现, 在活动网- 1. 3. 3上的竞争性表现。 我们的代码将在\url{https://github.com/xllu7TadTR}上公布。