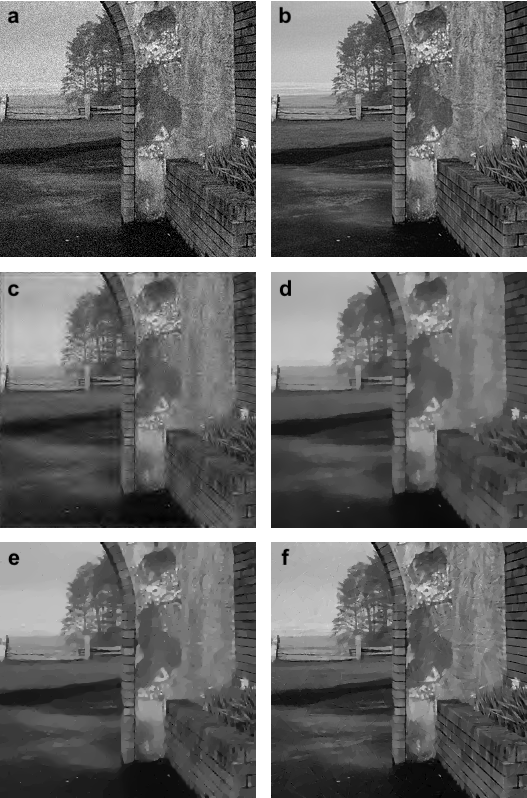

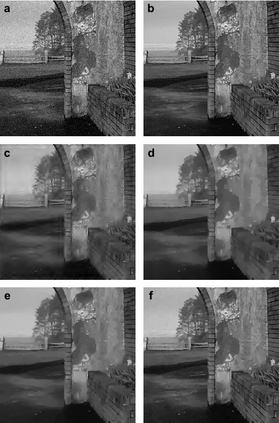

We present a unifying view on various statistical estimation techniques including penalization, variational and thresholding methods. These estimators will be analyzed in the context of statistical linear inverse problems including nonparametric and change point regression, and high dimensional linear models as examples. Our approach reveals many seemingly unrelated estimation schemes as special instances of a general class of variational multiscale estimators, named MIND (MultIscale Nemirovskii--Dantzig). These estimators result from minimizing certain regularization functionals under convex constraints that can be seen as multiple statistical tests for local hypotheses. For computational purposes, we recast MIND in terms of simpler unconstraint optimization problems via Lagrangian penalization as well as Fenchel duality. Performance of several MINDs is demonstrated on numerical examples.

翻译:我们对各种统计估计技术,包括惩罚、变异和阈值方法提出统一的看法,这些估计数据将结合统计线性反问题,包括非参数和变位点回归,以及高维线性模型作为实例进行分析。我们的方法显示,许多看来无关的估计计划是称为MIND(MIND(Multisale Nemirovskii-Dantzig)的多尺度性估计数据一般类别的特殊例子。这些估计数据是由于在可被视为地方假设的多重统计测试的交织限制下,最大限度地减少某些正规化功能的结果。为了计算目的,我们通过Lagrangeian惩罚和Fenchel双重性,将MIND重新列入较简单的非约束性优化问题,几个MIND的绩效在数字实例上得到了证明。