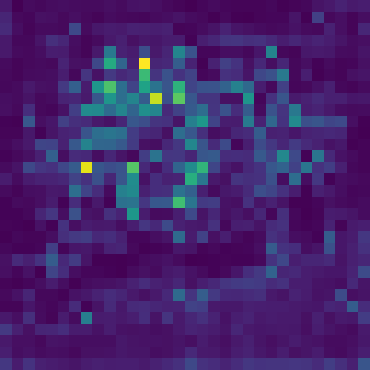

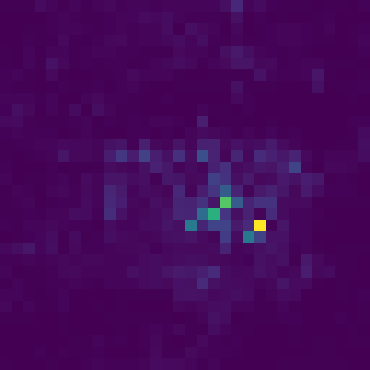

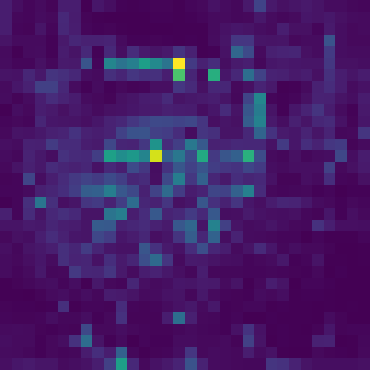

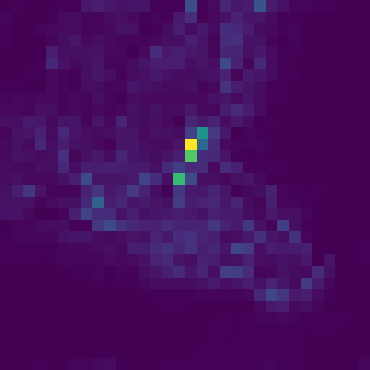

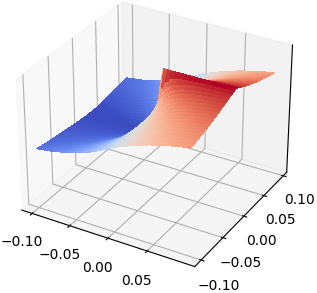

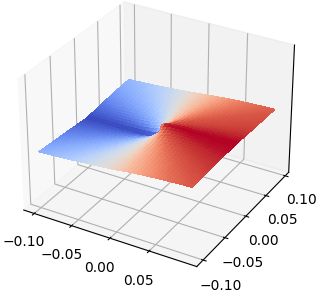

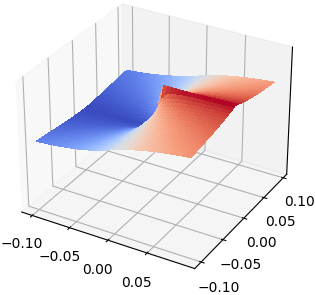

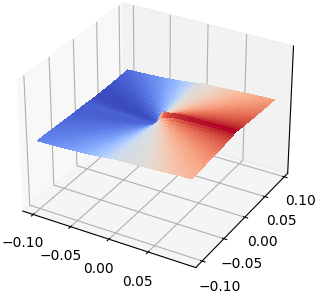

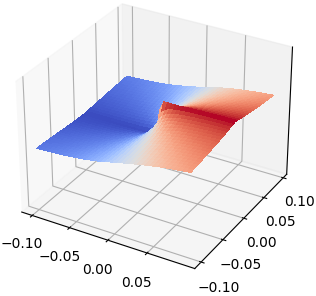

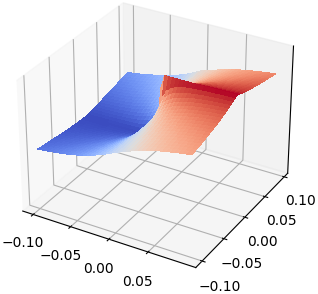

Recent studies demonstrate that deep networks, even robustified by the state-of-the-art adversarial training (AT), still suffer from large robust generalization gaps, in addition to the much more expensive training costs than standard training. In this paper, we investigate this intriguing problem from a new perspective, i.e., injecting appropriate forms of sparsity during adversarial training. We introduce two alternatives for sparse adversarial training: (i) static sparsity, by leveraging recent results from the lottery ticket hypothesis to identify critical sparse subnetworks arising from the early training; (ii) dynamic sparsity, by allowing the sparse subnetwork to adaptively adjust its connectivity pattern (while sticking to the same sparsity ratio) throughout training. We find both static and dynamic sparse methods to yield win-win: substantially shrinking the robust generalization gap and alleviating the robust overfitting, meanwhile significantly saving training and inference FLOPs. Extensive experiments validate our proposals with multiple network architectures on diverse datasets, including CIFAR-10/100 and Tiny-ImageNet. For example, our methods reduce robust generalization gap and overfitting by 34.44% and 4.02%, with comparable robust/standard accuracy boosts and 87.83%/87.82% training/inference FLOPs savings on CIFAR-100 with ResNet-18. Besides, our approaches can be organically combined with existing regularizers, establishing new state-of-the-art results in AT. Codes are available in https://github.com/VITA-Group/Sparsity-Win-Robust-Generalization.

翻译:最近的研究显示,深层次的网络,甚至由于最先进的对抗性培训(AT)而变得强大,仍然存在着巨大的普遍化差距,除了比标准培训费用更昂贵得多的培训费用之外,还存在巨大的普遍化差距。在本文件中,我们从新的角度来调查这一令人感兴趣的问题,即在对抗性培训中注入适当的宽度形式。我们为稀少的对抗性培训引入了两种替代办法:(一) 静态的紧张性,利用彩票假设的最新结果,查明早期培训产生的紧缺的子网络;(二) 动态的渗透性,允许稀疏的亚网络在整个培训中适应性地调整其连接模式(同时坚持同样的宽度比率)。我们发现,静态和动态的稀少方法可以产生双赢:大大缩小稳健的普及差距,缓解强的过度调整,同时大量节省培训和推断。 广泛的实验用多种网络架构验证了我们的建议,包括CIFAR-10-100和Tiy-ImageNet。例如,我们的方法可以减少稳健的通用差距,并且以84/RA-R-R-R8P-real-realalal-al-al-al-al-ress real-lab/al-lax-lax-lax-lax-lax-lax-lax-laxxxal-laxxxxxal-laxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx/xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxal_xal_xxxal_xal_xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx