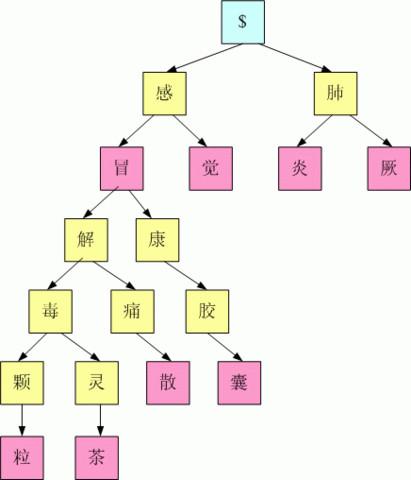

Autoregressive (AR) encoder-decoder neural networks have proved successful in many NLP problems, including Semantic Parsing -- a task that translates natural language to machine-readable parse trees. However, the sequential prediction process of AR models can be slow. To accelerate AR for semantic parsing, we introduce a new technique called TreePiece that tokenizes a parse tree into subtrees and generates one subtree per decoding step. On TopV2 benchmark, TreePiece shows 4.6 times faster decoding speed than standard AR, and comparable speed but significantly higher accuracy compared to Non-Autoregressive (NAR).

翻译:自回归编码器-解码器神经网络在许多NLP问题中都取得了成功,包括语义分析——一种将自然语言翻译成可读的机器解析树的任务。然而,自回归模型的顺序预测过程可能会很慢。为了加速自回归语义分析,我们引入了一种叫做TreePiece的新技术,它将解析树分成子树,并在每个解码步骤中生成一个子树。在TopV2基准测试中,TreePiece的解码速度比标准自回归快4.6倍,并且与非自回归(NAR)相比,速度相当,但准确性显著更高。