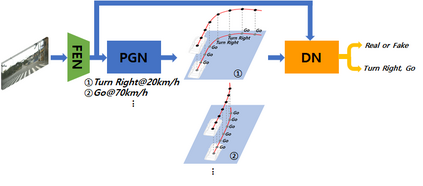

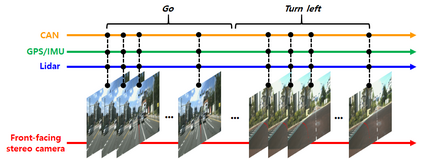

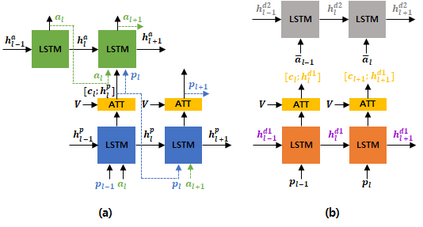

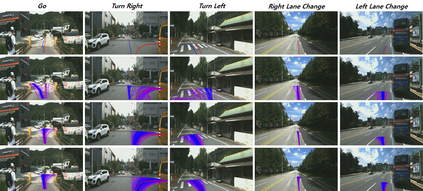

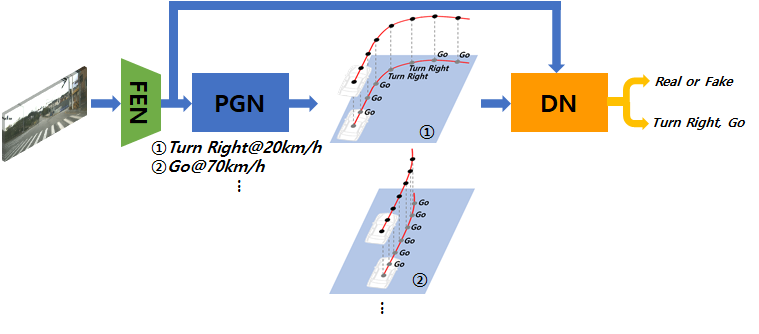

Targeting autonomous driving without High-Definition maps, we present a model capable of generating multiple plausible paths from sensory inputs for autonomous vehicles. Our generative model comprises two neural networks, Feature Extraction Network (FEN) and Path Generation Network (PGN). FEN extracts meaningful features from input scene images while PGN generates multiple paths from the features given a driving intention and speed. To make paths generated by PGN both be plausible and match the intention, we introduce a discrimination network and train it with PGN under generative adversarial networks (GANs) framework. Besides, to further increase the accuracy and diversity of the generated paths, we encourage PGN to capture intentions hidden in the positions in the paths and let the discriminator evaluate how realistic the sequential intentions are. Finally, we introduce ETRIDriving, the dataset for autonomous driving where the recorded sensory data is labeled with discrete high-level driving actions, and demonstrate the-state-of-the-art performances of the proposed model on ETRIDriving in terms of the accuracy and diversity.

翻译:在没有高定义地图的情况下,我们展示了一种能够从自动车辆的感官输入中产生多条貌似合理的路径的模型。我们的基因模型包括两个神经网络,即地貌提取网络和路径生成网络。FEN从输入场图像中提取有意义的特征,而PN则从具有驱动意图和速度的特征中生成多条路径。为了使PGN产生的路径既可信又符合意图,我们引入了歧视网络,并在基因化对抗网络(GANs)框架下用PGN培训它。此外,为了进一步提高生成路径的准确性和多样性,我们鼓励PGN捕捉在路径位置上隐藏的意图,让歧视者评估相继意图是如何现实的。最后,我们引入了ETRIDriviging,在记录传感器数据贴有离散高层次驱动动作标签的地方,为自主驱动数据集,并展示了ETRIDriviing拟议模型在准确性和多样性方面的最新表现。