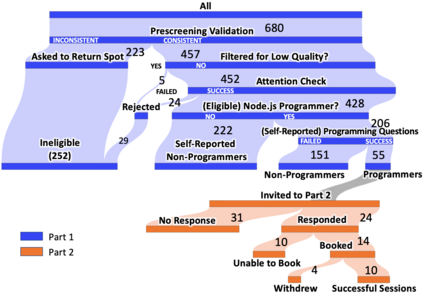

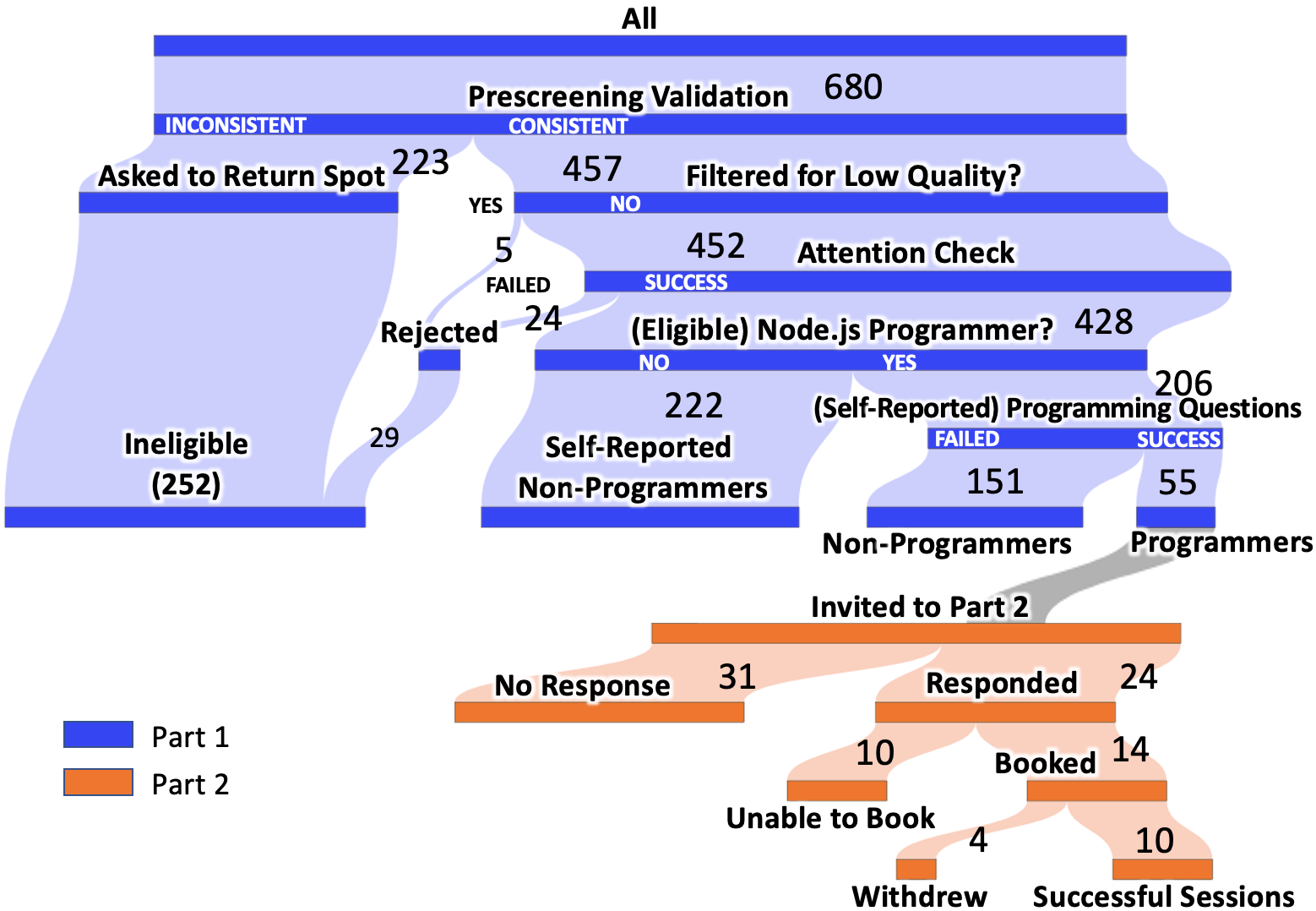

Online participant recruitment platforms such as Prolific have been gaining popularity in research, as they enable researchers to easily access large pools of participants. However, participant quality can be an issue; participants may give incorrect information to gain access to more studies, adding unwanted noise to results. This paper details our experience recruiting participants from Prolific for a user study requiring programming skills in Node.js, with the aim of helping other researchers conduct similar studies. We explore a method of recruiting programmer participants using prescreening validation, attention checks and a series of programming knowledge questions. We received 680 responses, and determined that 55 met the criteria to be invited to our user study. We ultimately conducted user study sessions via video calls with 10 participants. We conclude this paper with a series of recommendations for researchers.

翻译:诸如Prolific等在线参与者征聘平台在研究中越来越受欢迎,因为这些平台使研究人员能够方便地接触大批参与者,然而,参与者的质量可能是一个问题;参与者可能提供错误的信息,以便获得更多的研究,从而增加不必要的噪音;本文件详细介绍了我们从Prolicific为用户学习征聘参与者的经验,该学习需要诺德杰斯的编程技能,目的是帮助其他研究人员进行类似的研究;我们探索了一种利用预先筛选验证、关注检查和一系列编程知识问题来征聘程序参与者的方法;我们收到了680份答复,确定55份符合邀请参加我们用户研究的标准;我们最终通过视频电话与10名参与者进行了用户研究;我们完成这份文件,并提出了一系列研究人员建议。