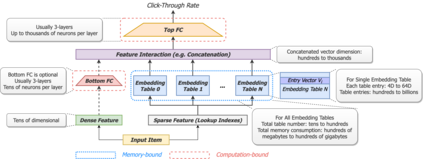

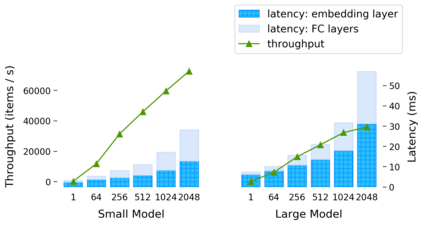

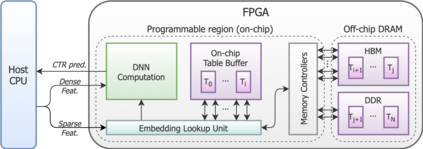

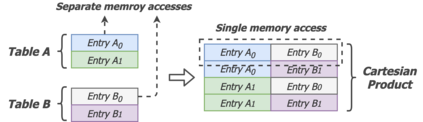

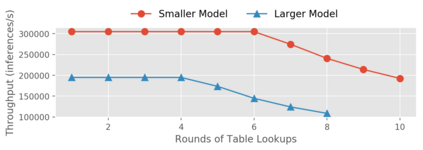

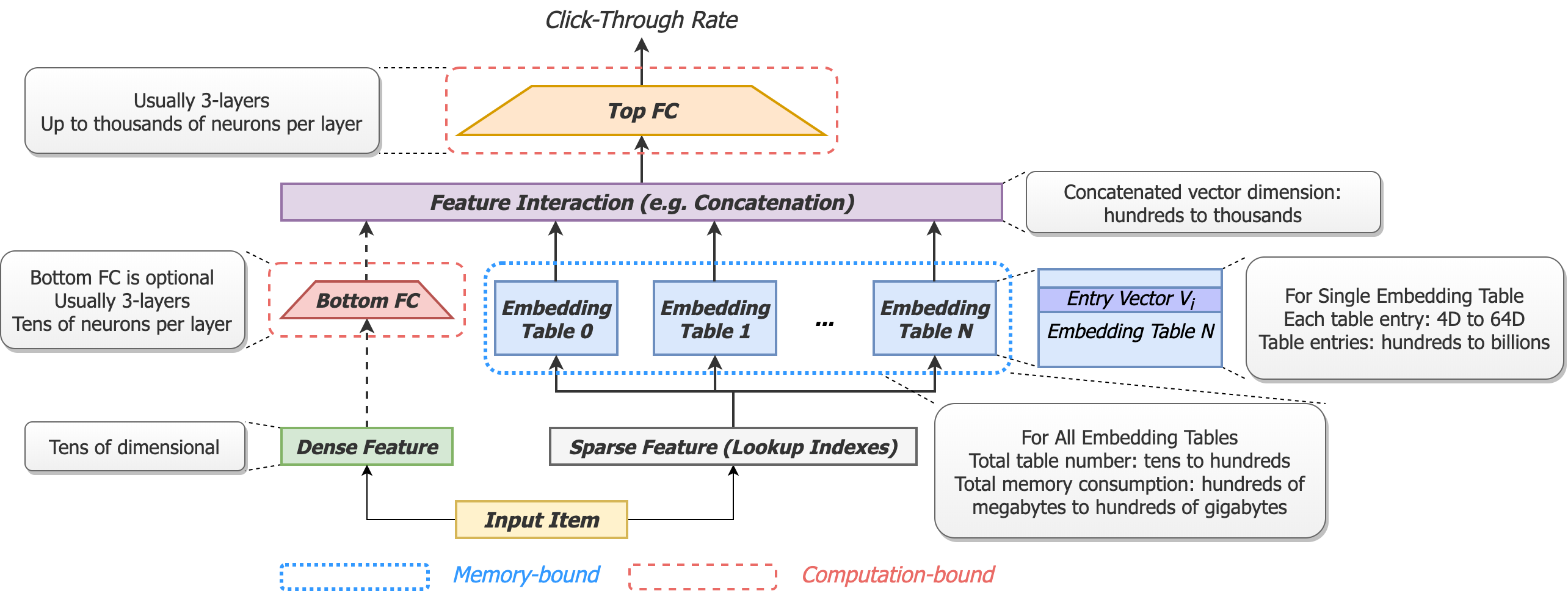

Deep neural networks are widely used in personalized recommendation systems. Unlike regular DNN inference workloads, recommendation inference is memory-bound due to the many random memory accesses needed to lookup the embedding tables. The inference is also heavily constrained in terms of latency because producing a recommendation for a user must be done in about tens of milliseconds. In this paper, we propose MicroRec, a high-performance inference engine for recommendation systems. MicroRec accelerates recommendation inference by (1) redesigning the data structures involved in the embeddings to reduce the number of lookups needed and (2) taking advantage of the availability of High-Bandwidth Memory (HBM) in FPGA accelerators to tackle the latency by enabling parallel lookups. We have implemented the resulting design on an FPGA board including the embedding lookup step as well as the complete inference process. Compared to the optimized CPU baseline (16 vCPU, AVX2-enabled), MicroRec achieves 13.8~14.7x speedup on embedding lookup alone and 2.5$~5.4x speedup for the entire recommendation inference in terms of throughput. As for latency, CPU-based engines needs milliseconds for inferring a recommendation while MicroRec only takes microseconds, a significant advantage in real-time recommendation systems.

翻译:在个人化建议系统中广泛使用深心血管网络。与正常的 DNN 推断工作量不同,建议推导值与正常的 DNN 推断值不同,建议推导值具有内存性,因为要查看嵌入表需要许多随机的内存存存权限,因此建议值在延缓度方面也受到很大限制,因为为用户提出建议必须在大约几十毫秒内完成。在本文中,我们提议MicroRec,这是建议系统的一种高性能推导引擎。微Rec加速建议引文,办法是(1)重新设计嵌入中的数据结构,以减少所需的查勘次数,(2)利用FPGA 中高宽线内存(HBM)的可用性,通过平行查勘,处理延缓度问题。我们已经在FPGA 板上实施了相应的设计,包括嵌入式查取步骤以及完整的推导过程。 与优化的CPU基线(16 VCPU, AVX2-C) 相比,微后加参考,微Rec 系统实现了安装全13.8~14.7x(H) 高级嵌入系统,同时查看2.5~5摩车建议。