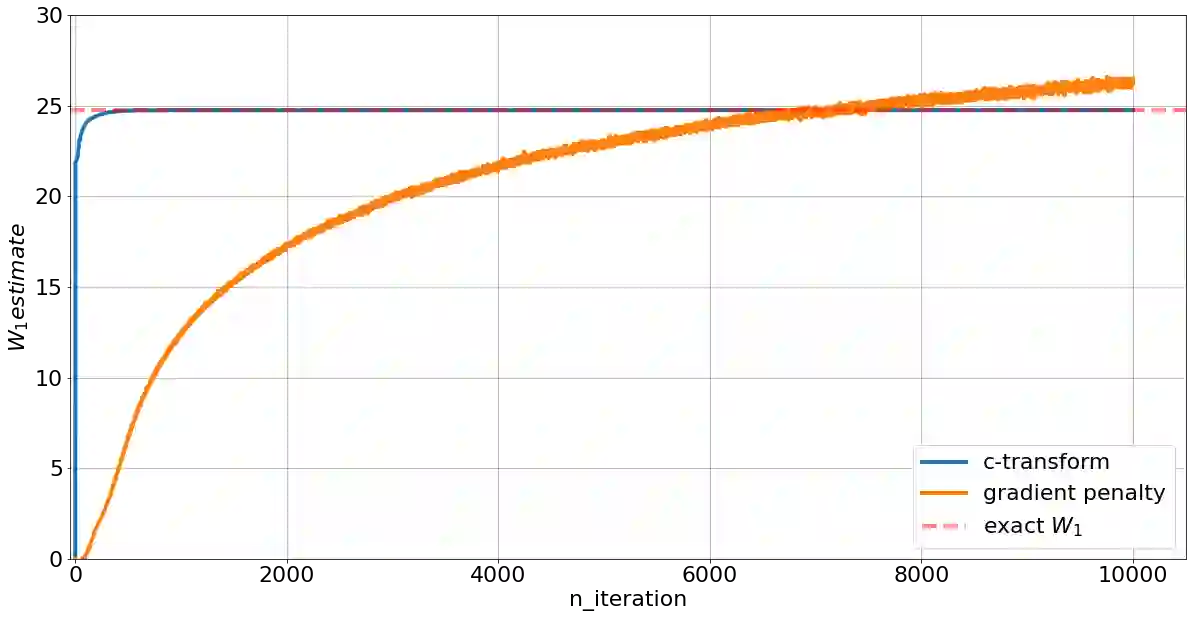

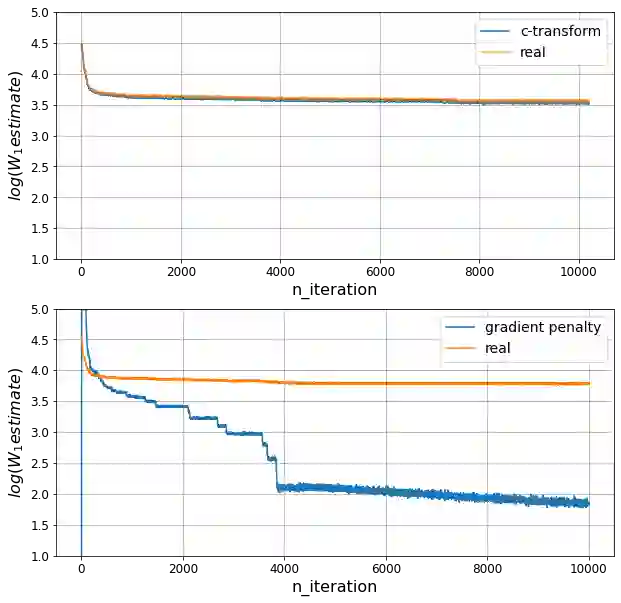

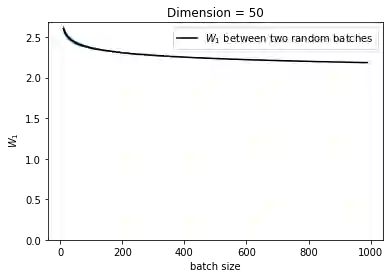

Wasserstein GANs are based on the idea of minimising the Wasserstein distance between a real and a generated distribution. We provide an in-depth mathematical analysis of differences between the theoretical setup and the reality of training Wasserstein GANs. In this work, we gather both theoretical and empirical evidence that the WGAN loss is not a meaningful approximation of the Wasserstein distance. Moreover, we argue that the Wasserstein distance is not even a desirable loss function for deep generative models, and conclude that the success of Wasserstein GANs can in truth be attributed to a failure to approximate the Wasserstein distance.

翻译:瓦森斯坦GANs基于将实际分布和生成分布之间的瓦森斯坦距离最小化的理念,我们对培训瓦森斯坦GANs的理论设置和现实之间的差异提供了深入的数学分析,在这项工作中,我们收集理论和经验证据,证明WGAN的损失不是瓦森斯坦距离的有意义的近似值,此外,我们争辩说,瓦森斯坦距离甚至不是深层基因模型的可取损失函数,我们的结论是,瓦塞尔斯坦GANs的成功可以归结于瓦森斯坦距离的近似值。