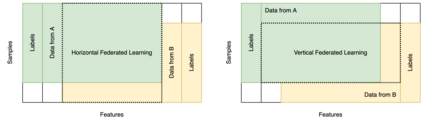

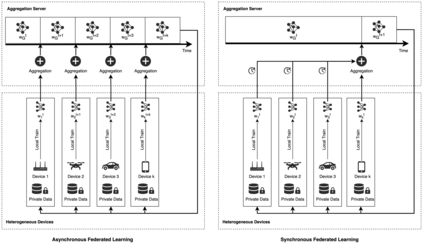

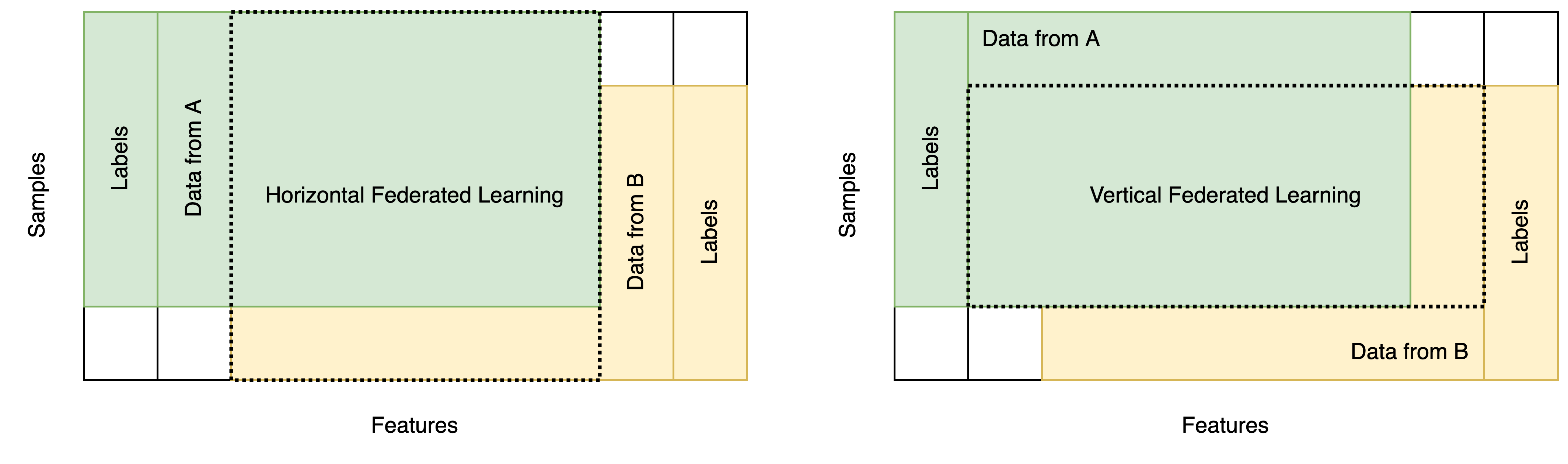

Federated learning (FL) is experiencing a fast booming with the wave of distributed machine learning. In the FL paradigm, the global model is aggregated on the centralized aggregation server according to the parameters of local models instead of local training data, mitigating privacy leakage caused by the collection of sensitive information. With the increased computing and communication capabilities of edge and IoT devices, applying FL on heterogeneous devices to train machine learning models becomes a trend. The synchronous aggregation strategy in the classic FL paradigm cannot effectively use the limited resource, especially on heterogeneous devices, due to its waiting for straggler devices before aggregation in each training round. Furthermore, the disparity of data spread on devices (i.e. data heterogeneity) in real-world scenarios downgrades the accuracy of models. As a result, many asynchronous FL (AFL) paradigms are presented in various application scenarios to improve efficiency, performance, privacy, and security. This survey comprehensively analyzes and summarizes existing variants of AFL according to a novel classification mechanism, including device heterogeneity, data heterogeneity, privacy and security on heterogeneous devices, and applications on heterogeneous devices. Finally, this survey reveals rising challenges and presents potentially promising research directions in this under-investigated field.

翻译:联邦学习联盟(FL)正在经历随着分布式机器学习浪潮的浪潮而迅速兴盛。在FL范式中,全球模型根据当地模型参数而不是当地培训数据,在中央集成服务器上根据当地模型参数而不是根据当地培训数据进行汇总,以减少敏感信息收集造成的隐私泄漏;随着边缘和IoT装置的计算和通信能力的提高,将FL应用于不同设备以培训机器学习模型成为趋势;传统FL范式中的同步聚合战略无法有效利用有限资源,特别是各种装置,原因是它等待在每个培训回合中汇总之前使用分散式装置。此外,设备(即数据异质性)在现实世界情景中的数据分布差异降低了模型的准确性。结果,许多不均匀的FL(AFL)范式在各种应用情景中被介绍,以提高效率、性能、隐私和安全性。这项调查根据新颖的分类机制,全面分析和总结了亚利联的现有变体,包括设备异性、数据异性、隐私和安全性、数据性能和安全性能等,从而降低了模型的准确性,最终展示了这一多样化设备领域上的变化趋势。