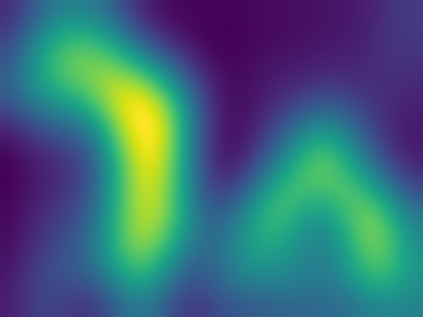

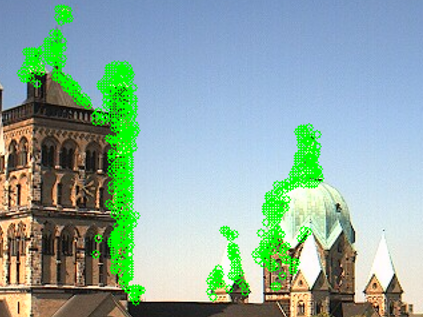

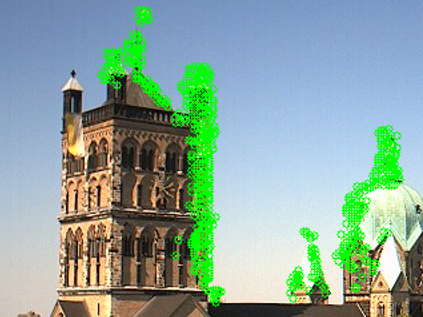

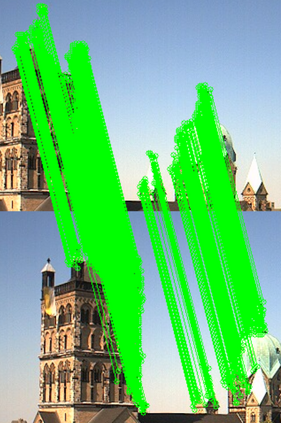

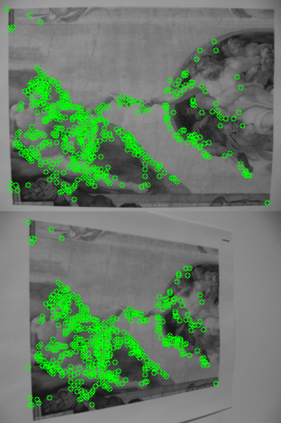

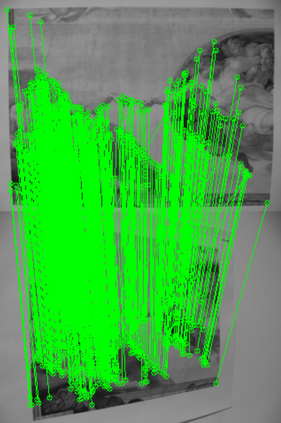

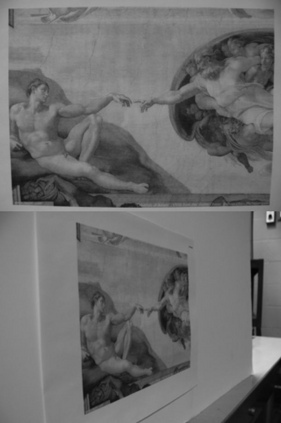

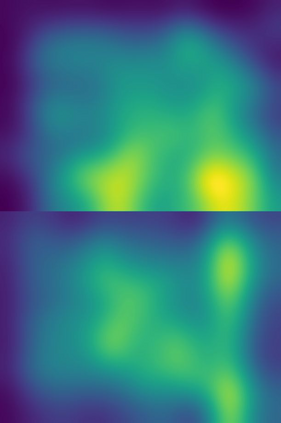

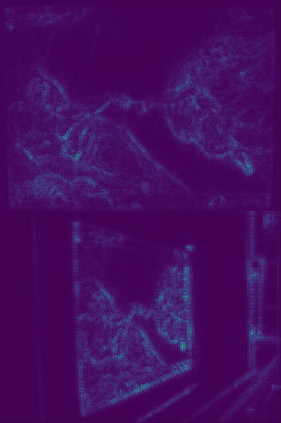

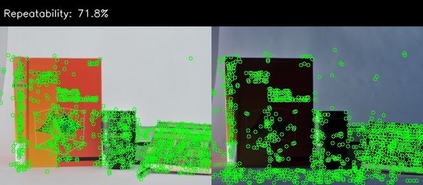

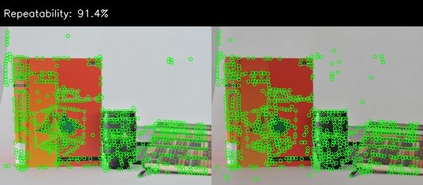

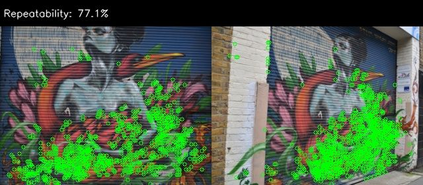

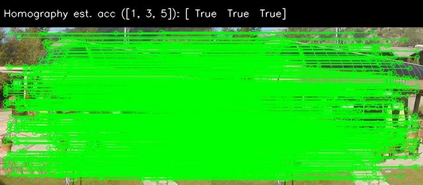

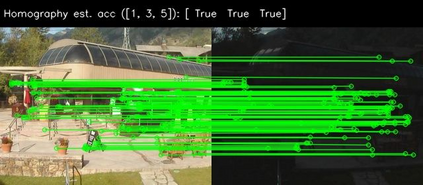

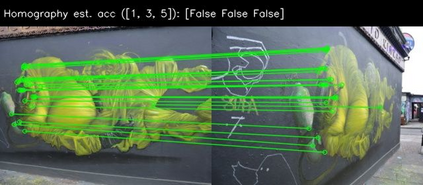

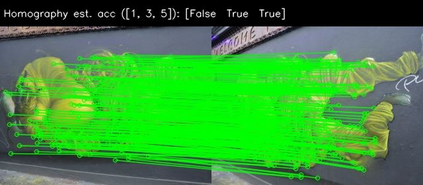

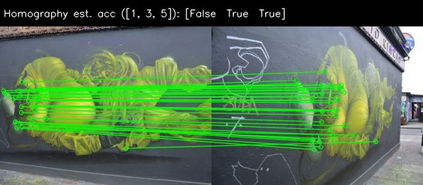

Learnable keypoint detectors and descriptors are beginning to outperform classical hand-crafted feature extraction methods. Recent studies on self-supervised learning of visual representations have driven the increasing performance of learnable models based on deep networks. By leveraging traditional data augmentations and homography transformations, these networks learn to detect corners under adverse conditions such as extreme illumination changes. However, their generalization capabilities are limited to corner-like features detected a priori by classical methods or synthetically generated data. In this paper, we propose the Correspondence Network (CorrNet) that learns to detect repeatable keypoints and to extract discriminative descriptions via unsupervised contrastive learning under spatial constraints. Our experiments show that CorrNet is not only able to detect low-level features such as corners, but also high-level features that represent similar objects present in a pair of input images through our proposed joint guided backpropagation of their latent space. Our approach obtains competitive results under viewpoint changes and achieves state-of-the-art performance under illumination changes.

翻译:可学习的钥匙探测器和描述器正在开始超过传统的手工制作特征提取方法。最近关于自我监督的视觉表现学习的研究推动了基于深层网络的可学习模型的日益提高的性能。通过利用传统数据扩增和同质法转换,这些网络学会在极端照明变化等不利条件下探测角。然而,它们的普及能力仅限于通过古典方法或合成生成的数据先验地探测到的近角特征。在本文件中,我们提议建立通信网络(CorrNet),在空间限制下,通过不受监督的对比学习,发现可重复的关键点并提取歧视性描述。我们的实验显示,CorrNet不仅能够探测角等低层次特征,而且能够通过我们提议的对其潜质空间进行联合引导反剖析,在一对投入图像中体现类似物体的高层次特征。我们的方法在视觉变化中取得了竞争性结果,并在污染变化下实现状态性表现。