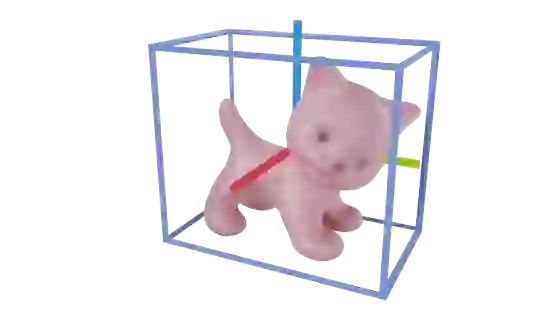

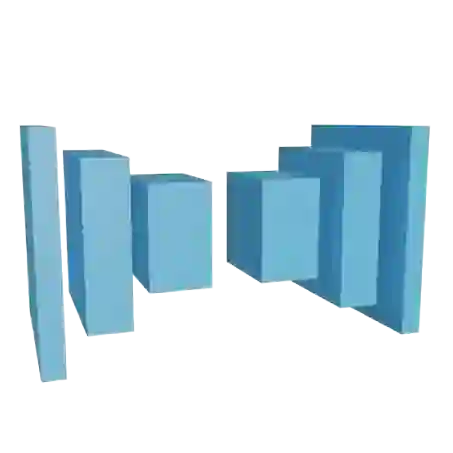

We propose a new method named OnePose for object pose estimation. Unlike existing instance-level or category-level methods, OnePose does not rely on CAD models and can handle objects in arbitrary categories without instance- or category-specific network training. OnePose draws the idea from visual localization and only requires a simple RGB video scan of the object to build a sparse SfM model of the object. Then, this model is registered to new query images with a generic feature matching network. To mitigate the slow runtime of existing visual localization methods, we propose a new graph attention network that directly matches 2D interest points in the query image with the 3D points in the SfM model, resulting in efficient and robust pose estimation. Combined with a feature-based pose tracker, OnePose is able to stably detect and track 6D poses of everyday household objects in real-time. We also collected a large-scale dataset that consists of 450 sequences of 150 objects.

翻译:我们提议了一个名为 OnePose 的天体显示估计的新方法。 与现有的例级或类级方法不同, OnePose 不依赖 CAD 模型, 并且可以在不进行实例或类别网络培训的情况下处理任意类别的天体。 OnePose 从视觉本地化中绘制这个想法, 只需要对天体进行简单的 RGB 视频扫描, 以构建该天体的稀疏 SfM 模型。 然后, 这个模型被注册为带有通用特征匹配网络的新查询图像。 为了减轻现有视觉本地化方法的缓慢运行时间, 我们建议建立一个新的图形关注网络, 将查询图像中的 2D 点与 SfM 模型中的 3D 点直接匹配, 从而产生高效和稳健健的外观估计。 OnePose 与基于地貌的定位跟踪器一起, 能够实时地刺探和跟踪日常住户物体的 6D 。 我们还收集了一个由 450 序列 150 个天体组成的大型数据集 。