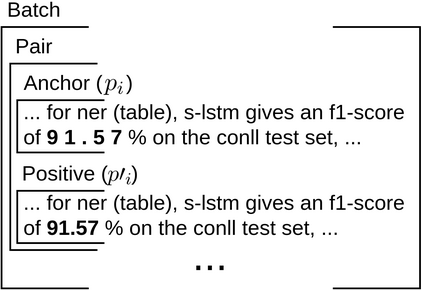

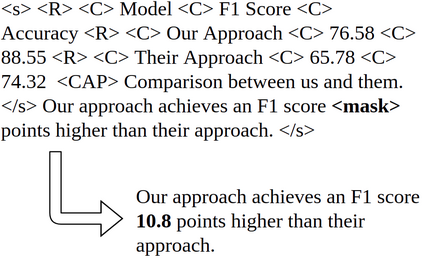

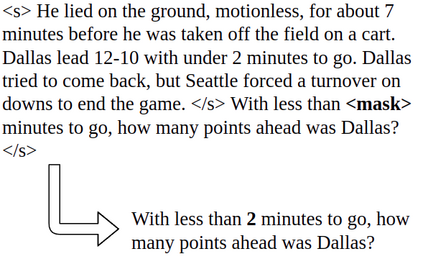

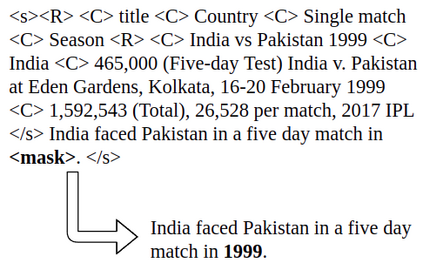

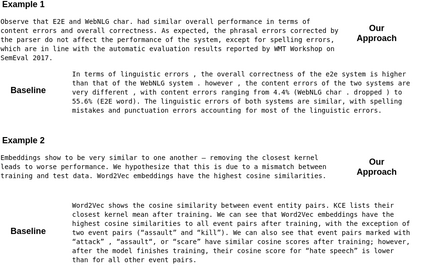

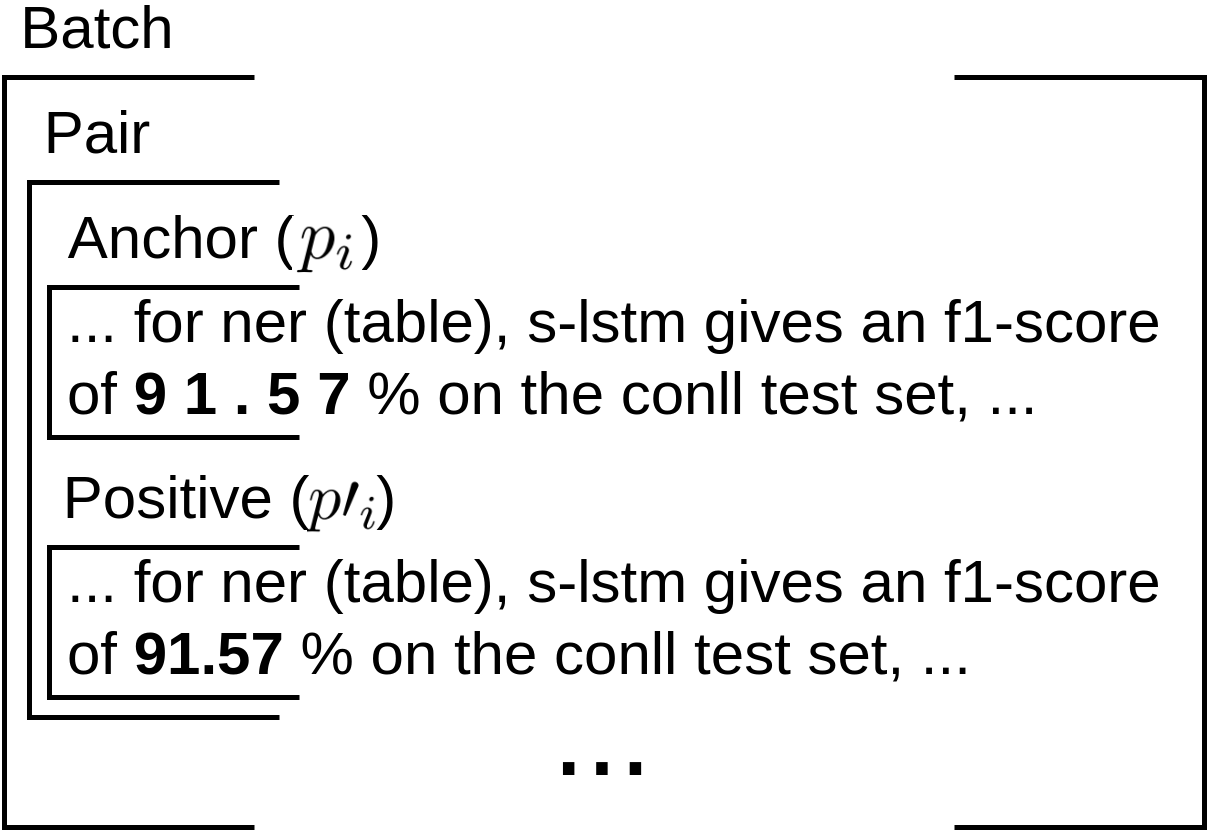

State-of-the-art pretrained language models tend to perform below their capabilities when applied out-of-the-box on tasks that require reasoning over numbers. Recent work sees two main reasons for this: (1) popular tokenisation algorithms are optimized for common words, and therefore have limited expressiveness for numbers, and (2) common pretraining objectives do not target numerical reasoning or understanding numbers at all. Recent approaches usually address them separately and mostly by proposing architectural changes or pretraining models from scratch. In this paper, we propose a new extended pretraining approach called reasoning-aware pretraining to jointly address both shortcomings without requiring architectural changes or pretraining from scratch. Using contrastive learning, our approach incorporates an alternative number representation into an already pretrained model, while improving its numerical reasoning skills by training on a novel pretraining objective called inferable number prediction task. We evaluate our approach on three different tasks that require numerical reasoning, including (a) reading comprehension in the DROP dataset, (b) inference-on-tables in the InfoTabs dataset, and (c) table-to-text generation in WikiBio and SciGen datasets. Our results on DROP and InfoTabs show that our approach improves the accuracy by 9.6 and 33.9 points on these datasets, respectively. Our human evaluation on SciGen and WikiBio shows that our approach improves the factual correctness on all datasets.

翻译:最先进的预先培训语言模式往往在应用需要推理数字的任务时表现低于其能力。最近的工作发现两个主要原因:(1) 大众化象征性算法为通用词优化,因此对数字的表达性有限,而共同的预培训目标并不针对数字推理或理解数字。最近的方法通常单独解决它们,而且大多从零开始提出建筑改变或培训前的模式。在本文中,我们提议了一个新的扩大的培训前方法,称为推理意识预培训,以联合解决这两个缺陷,而无需从零开始对建筑进行修改或预培训。我们的方法采用对比学习,将替代数字代表纳入已经预先培训的模式,同时通过培训新颖的预培训目标(即可推断数字预测任务)提高数字推理技能。我们评估了三种不同任务的方法,需要从数字推理,包括:(a) 阅读DROP数据集的理解,(b) InfoTabs数据集中的推论,以及(c) WikiB 和 SciG.9信息技术中的表格生成,分别显示我们的数据和SDIG的准确性。