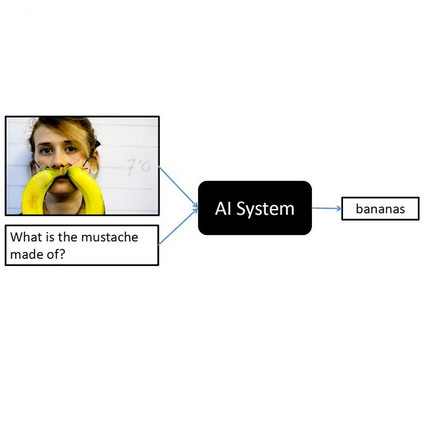

We present VISPROG, a neuro-symbolic approach to solving complex and compositional visual tasks given natural language instructions. VISPROG avoids the need for any task-specific training. Instead, it uses the in-context learning ability of large language models to generate python-like modular programs, which are then executed to get both the solution and a comprehensive and interpretable rationale. Each line of the generated program may invoke one of several off-the-shelf computer vision models, image processing routines, or python functions to produce intermediate outputs that may be consumed by subsequent parts of the program. We demonstrate the flexibility of VISPROG on 4 diverse tasks - compositional visual question answering, zero-shot reasoning on image pairs, factual knowledge object tagging, and language-guided image editing. We believe neuro-symbolic approaches like VISPROG are an exciting avenue to easily and effectively expand the scope of AI systems to serve the long tail of complex tasks that people may wish to perform.

翻译:我们展示了VISPROG, 这是一种解决复杂和成文的视觉任务的神经同步方法,根据自然语言的指示, VISPROG避免了任何特定任务培训的需要, 相反,它使用大型语言模型的内流学习能力来生成像Python一样的模块程序,然后执行这些模块程序以获得解决方案和全面、可解释的理由。 生成的每个程序的每一行都可能引用几种现成的计算机视觉模型、图像处理程序或双眼功能中的一个来产生中间输出,而中间输出可能由程序随后部分消耗。 我们展示了VISPROG在四种不同任务上的灵活性,即成像对、事实知识对象标记和语言制导图像编辑方面的零光推论。 我们相信,像VISPROG这样的神经同步方法是一个令人兴奋的渠道,可以方便和有效地扩大AI系统的范围,为人们可能希望完成的复杂任务的长尾部服务。