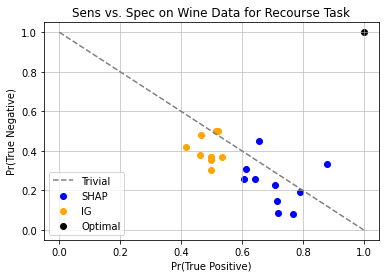

Despite a sea of interpretability methods that can produce plausible explanations, the field has also empirically seen many failure cases of such methods. In light of these results, it remains unclear for practitioners how to use these methods and choose between them in a principled way. In this paper, we show that for even moderately rich model classes (easily satisfied by neural networks), any feature attribution method that is complete and linear--for example, Integrated Gradients and SHAP--can provably fail to improve on random guessing for inferring model behaviour. Our results apply to common end-tasks such as identifying local model behaviour, spurious feature identification, and algorithmic recourse. One takeaway from our work is the importance of concretely defining end-tasks. In particular, we show that once such an end-task is defined, a simple and direct approach of repeated model evaluations can outperform many other complex feature attribution methods.

翻译:尽管有大量可解释的方法可以提出可信的解释,但实地也从经验上看到许多这类方法的失败案例。根据这些结果,实践者仍然不清楚如何使用这些方法,并以原则性的方式在它们之间作出选择。在本文件中,我们表明,即使是中等富裕的模型类别(神经网络很容易满足),任何完整和线性特征归属方法,例如,综合梯子和SHAP -- -- 在随机猜测推断模型行为方面,都可以发现无法改进。我们的结果适用于共同的终端任务,例如确定当地模型行为、假地特征识别和算法追索。我们工作的一个成果是具体界定最终任务的重要性。特别是,我们表明,一旦确定了最终任务,重复的模型评估的简单直接方法可能超过许多其他复杂的特征归属方法。