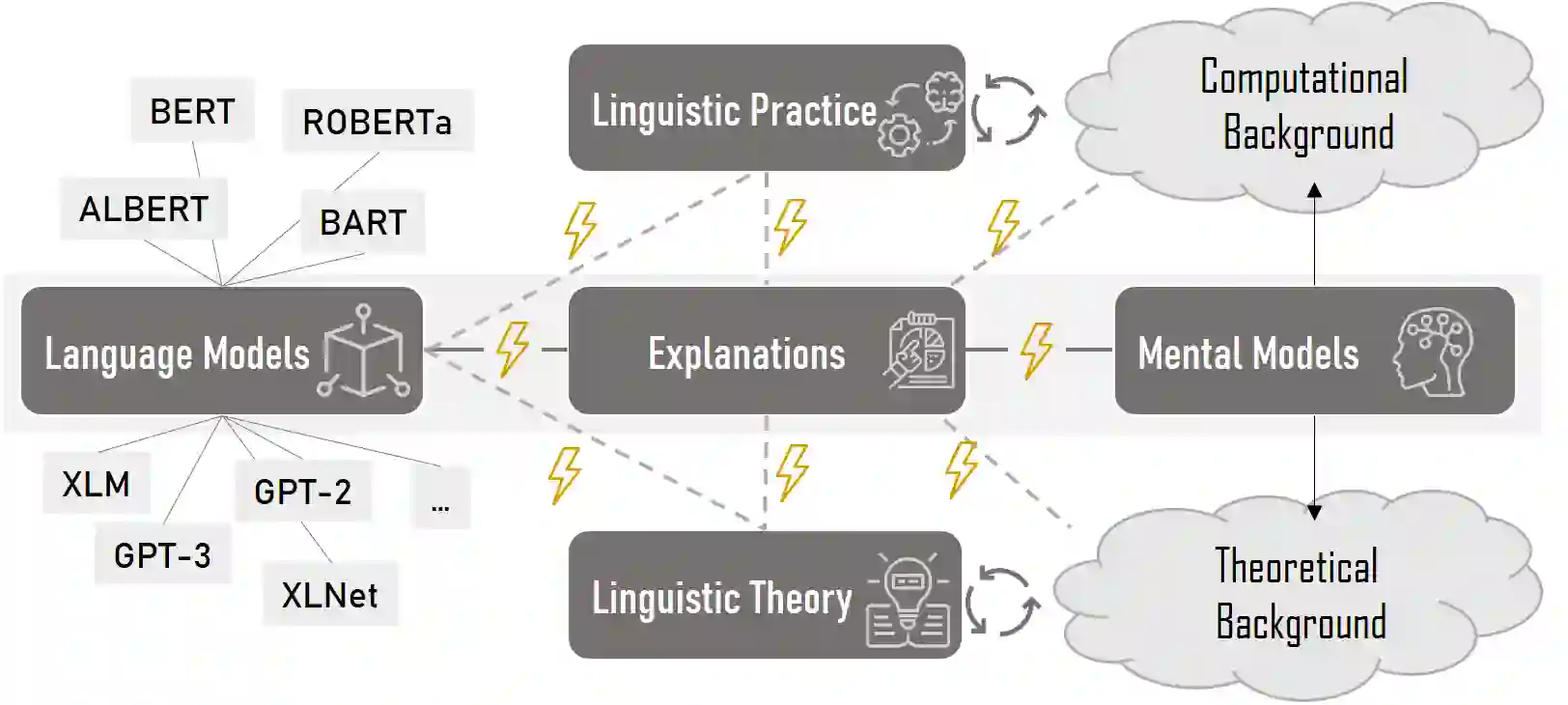

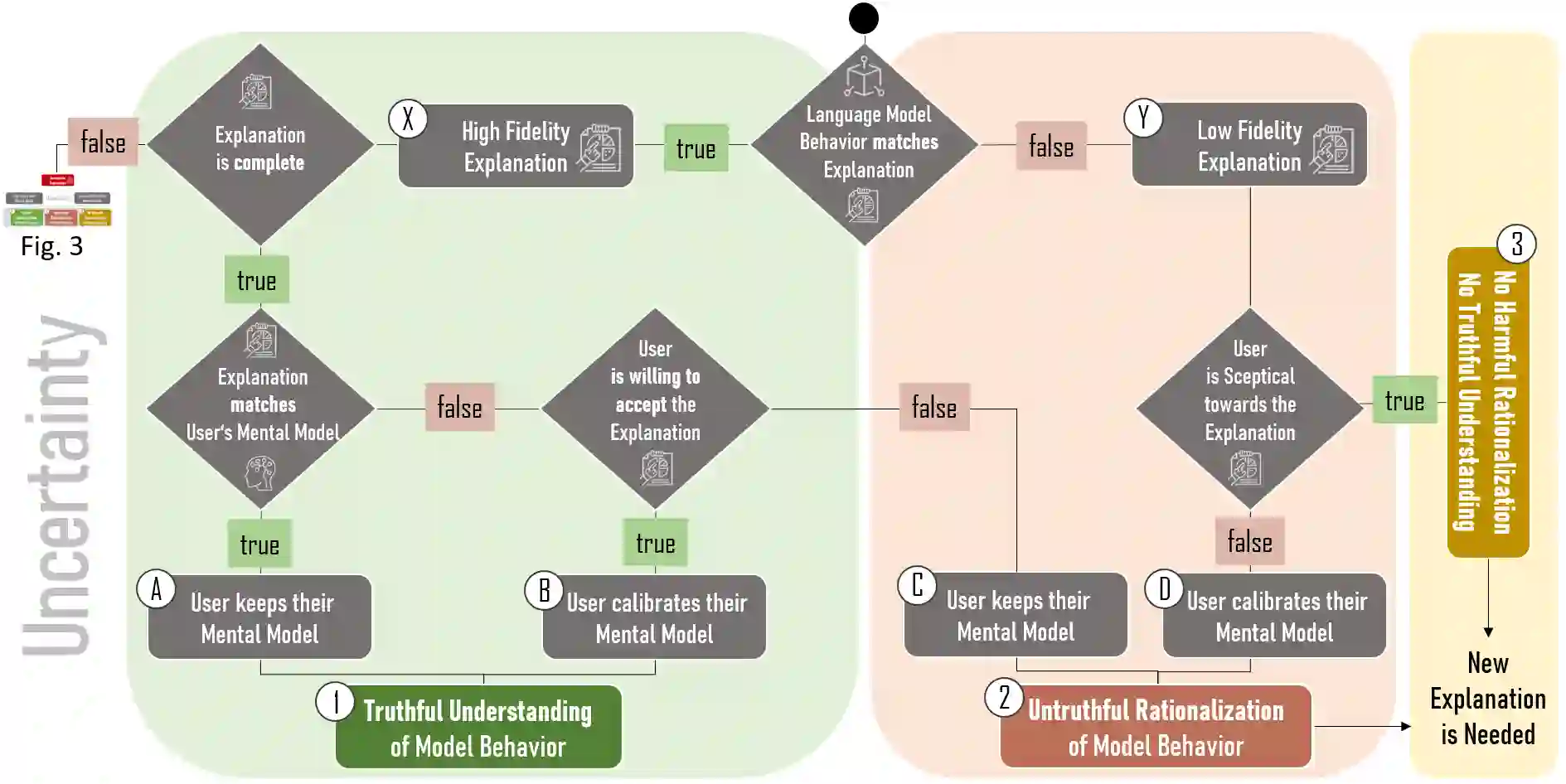

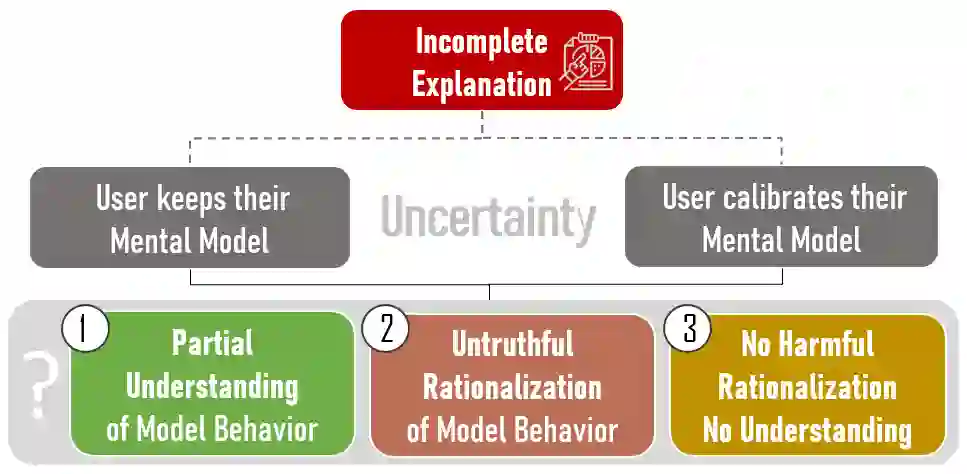

Language models learn and represent language differently than humans; they learn the form and not the meaning. Thus, to assess the success of language model explainability, we need to consider the impact of its divergence from a user's mental model of language. In this position paper, we argue that in order to avoid harmful rationalization and achieve truthful understanding of language models, explanation processes must satisfy three main conditions: (1) explanations have to truthfully represent the model behavior, i.e., have a high fidelity; (2) explanations must be complete, as missing information distorts the truth; and (3) explanations have to take the user's mental model into account, progressively verifying a person's knowledge and adapting their understanding. We introduce a decision tree model to showcase potential reasons why current explanations fail to reach their objectives. We further emphasize the need for human-centered design to explain the model from multiple perspectives, progressively adapting explanations to changing user expectations.

翻译:语言模型与人学习和代表的语言不同;语言模型与人不同;语言模型学习形式而不是含义。因此,为了评估语言模型解释成功与否,我们需要考虑其与用户精神语言模型差异的影响。在本立场文件中,我们主张,为了避免有害的合理化,实现对语言模型的真诚理解,解释程序必须满足三个主要条件:(1) 解释必须真实地代表模式行为,即高度忠诚;(2) 解释必须完整,因为缺失的信息扭曲了事实;(3) 解释必须考虑到用户的精神模型,逐步核实一个人的知识并调整他们的理解。我们引入了决策树模型,以展示当前解释未能实现其目标的潜在原因。我们进一步强调,需要以人为本的设计从多种角度解释模型,逐步调整解释,以适应用户不断变化的期望。