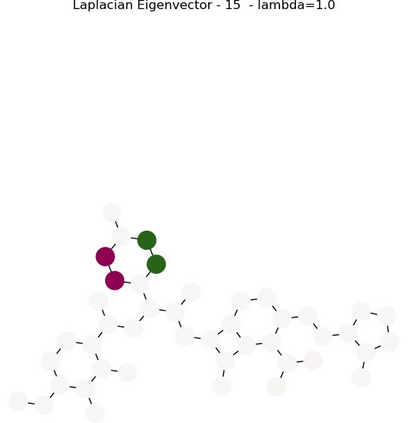

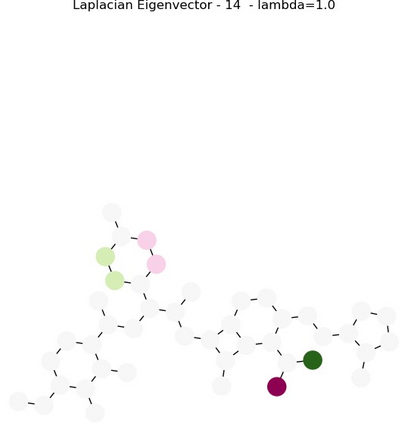

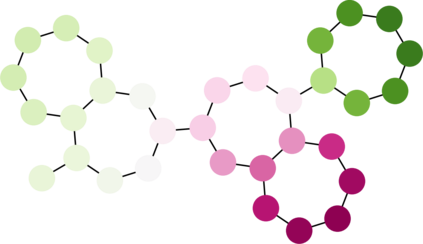

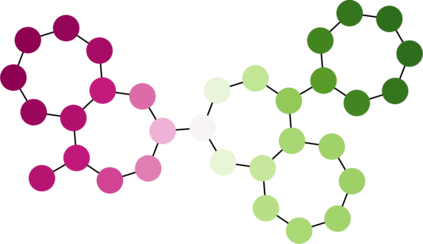

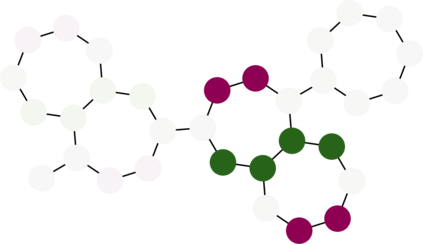

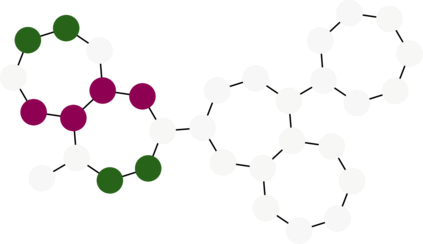

In recent years, the Transformer architecture has proven to be very successful in sequence processing, but its application to other data structures, such as graphs, has remained limited due to the difficulty of properly defining positions. Here, we present the $\textit{Spectral Attention Network}$ (SAN), which uses a learned positional encoding (LPE) that can take advantage of the full Laplacian spectrum to learn the position of each node in a given graph. This LPE is then added to the node features of the graph and passed to a fully-connected Transformer. By leveraging the full spectrum of the Laplacian, our model is theoretically powerful in distinguishing graphs, and can better detect similar sub-structures from their resonance. Further, by fully connecting the graph, the Transformer does not suffer from over-squashing, an information bottleneck of most GNNs, and enables better modeling of physical phenomenons such as heat transfer and electric interaction. When tested empirically on a set of 4 standard datasets, our model performs on par or better than state-of-the-art GNNs, and outperforms any attention-based model by a wide margin, becoming the first fully-connected architecture to perform well on graph benchmarks.

翻译:近年来,变异器结构在序列处理方面证明非常成功,但由于难以正确定义位置,它对其他数据结构(如图表)的应用仍然有限。在这里,我们展示了$\textit{spectraltention Network}$(SAN),它使用一个学习的定位编码(LPE),可以利用全拉普拉西亚频谱来学习每个节点在特定图中的位置。这个LPE随后被添加到图形的节点特征中,并传递到一个完全连通的变异器中。通过利用拉普拉提亚的全频谱,我们的模型在理论上在区分图中的力量很强,并且能够更好地从它们的共振中探测类似的次结构。此外,通过完全连接图形,变异器不会因过度偏差而受损,大多数GNNS的信息瓶颈能够更好地模拟热传输和电动互动等物理现象。在对一套4个标准数据集进行实验时,我们的模型在等值或优于状态的模型中,在分辨图中表现得力,并且能够通过完全连接的模型来完成任何基础的模型的模型的模型的离差值。