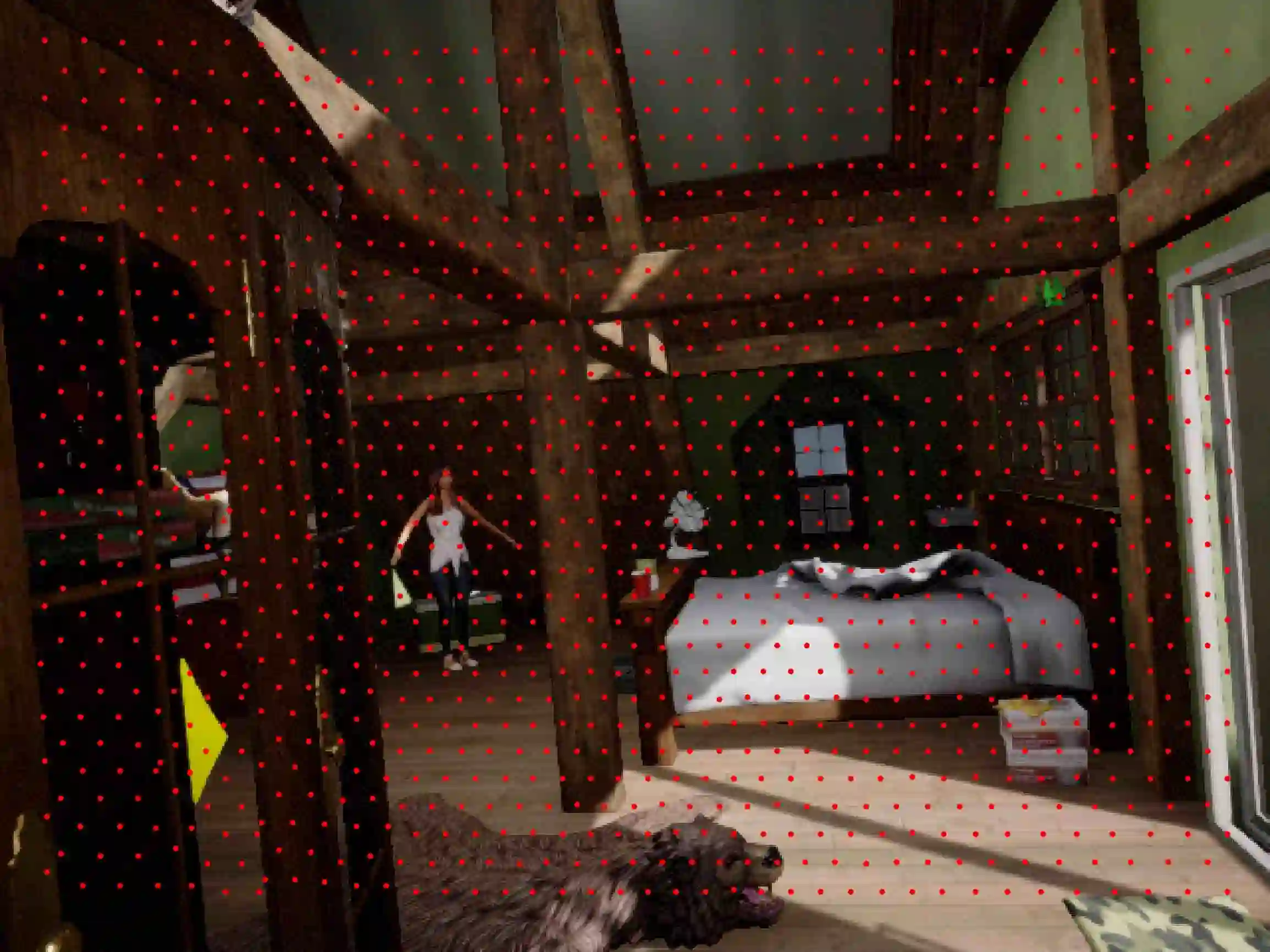

Sparse active illumination enables precise time-of-flight depth sensing as it maximizes signal-to-noise ratio for low power budgets. However, depth completion is required to produce dense depth maps for 3D perception. We address this task with realistic illumination and sensor resolution constraints by simulating ToF datasets for indoor 3D perception with challenging sparsity levels. We propose a quantized convolutional encoder-decoder network for this task. Our model achieves optimal depth map quality by means of input pre-processing and carefully tuned training with a geometry-preserving loss function. We also achieve low memory footprint for weights and activations by means of mixed precision quantization-at-training techniques. The resulting quantized models are comparable to the state of the art in terms of quality, but they require very low GPU times and achieve up to 14-fold memory size reduction for the weights w.r.t. their floating point counterpart with minimal impact on quality metrics.

翻译:由于低功率预算的信号到噪音比率最大化,微粒活性光化能够进行精确的飞行时间深度感测。然而,要制作三维感知的密度深度地图,需要深度完成深度的完成。我们用现实的光度和感官分辨率限制来应对这项任务,方法是模拟室内三维感知的 ToF 数据集,且具有具有挑战性的宽度水平。我们建议为此任务建立一个四分化的共变编码解码网络。我们的模型通过输入预处理和以几何保存损失功能进行仔细调整的培训,达到最佳深度地图质量。我们还通过混合精度量度训练技术实现重量和激活的低记忆足迹。由此产生的四分模型在质量上可以与艺术状态相近,但是它们需要非常低的GPU时间,并且其浮点对等的重量达到高达14倍的内存大小,对质量指标的影响最小。