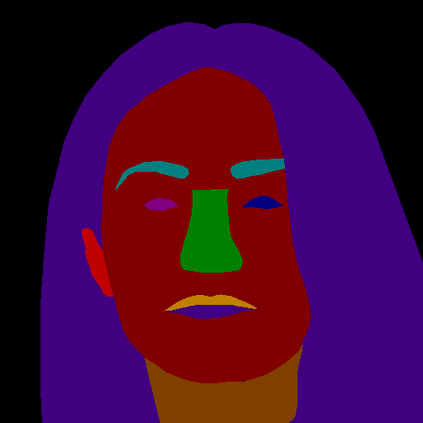

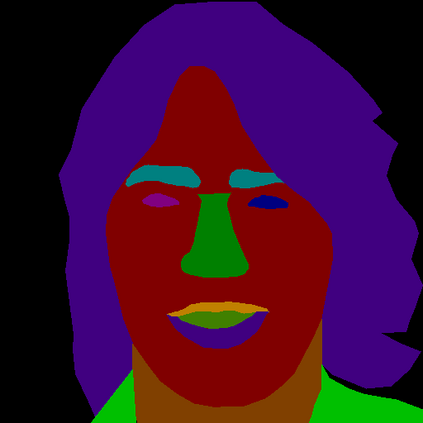

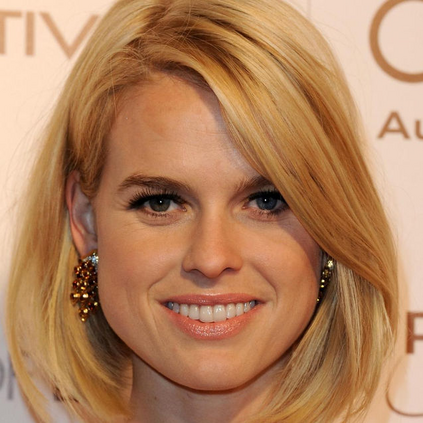

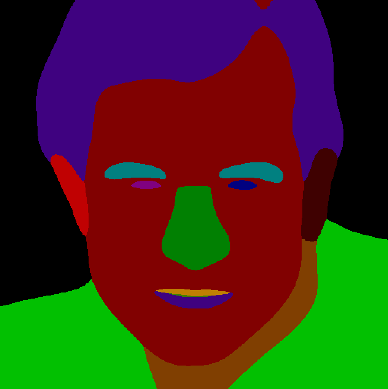

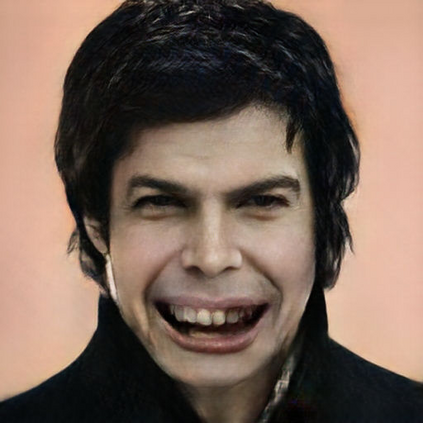

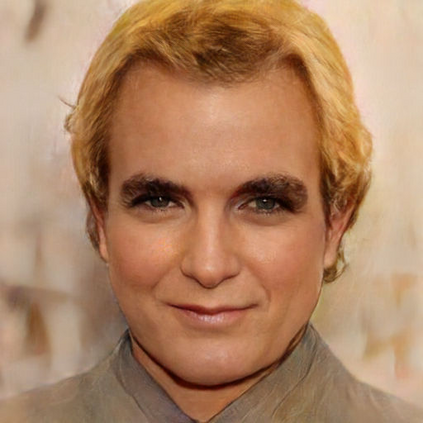

Facial image manipulation has achieved great progresses in recent years. However, previous methods either operate on a predefined set of face attributes or leave users little freedom to interactively manipulate images. To overcome these drawbacks, we propose a novel framework termed MaskGAN, enabling diverse and interactive face manipulation. Our key insight is that semantic masks serve as a suitable intermediate representation for flexible face manipulation with fidelity preservation. MaskGAN has two main components: 1) Dense Mapping Network, and 2) Editing Behavior Simulated Training. Specifically, Dense mapping network learns style mapping between a free-form user modified mask and a target image, enabling diverse generation results. Editing behavior simulated training models the user editing behavior on the source mask, making the overall framework more robust to various manipulated inputs. To facilitate extensive studies, we construct a large-scale high-resolution face dataset with fine-grained mask annotations named CelebAMask-HQ. MaskGAN is comprehensively evaluated on two challenging tasks: attribute transfer and style copy, demonstrating superior performance over other state-of-the-art methods. The code, models and dataset are available at \url{https://github.com/switchablenorms/CelebAMask-HQ}.

翻译:近年来,对面部图像的操纵取得了巨大的进步。然而,以往的方法要么是用一套预先定义的面部属性操作,要么是让用户几乎没有交互操作图像的自由。为了克服这些缺点,我们提议了一个名为MaskGAN的新框架,允许对面部进行多样化和互动的操纵。我们的关键见解是,语义面罩是使用忠实保护进行灵活面部操纵的适当中间代表。MaskGAN有两个主要组成部分:1) Dense映射网络,和2)编辑行为模拟培训。具体来说,音义绘图网络学习自由格式用户修改面罩和目标图像之间的风格映射,以促成不同的生成结果。编辑行为模拟培训模式是源面罩上的用户编辑行为,使总体框架对各种被操纵的投入更加有力。为了便利广泛的研究,我们用精细的面罩说明构建了一个大型高分辨率面部数据集,名为Celebamask-HQ。 MaskGAN对两项具有挑战性的任务进行了全面评价:属性传输和风格复制,展示优于其他状态方法的性表现。代码、模型和数据设置可在以下/Hurmasrmasrst/hststalstable。