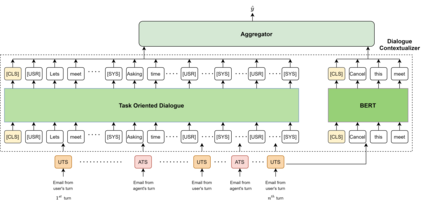

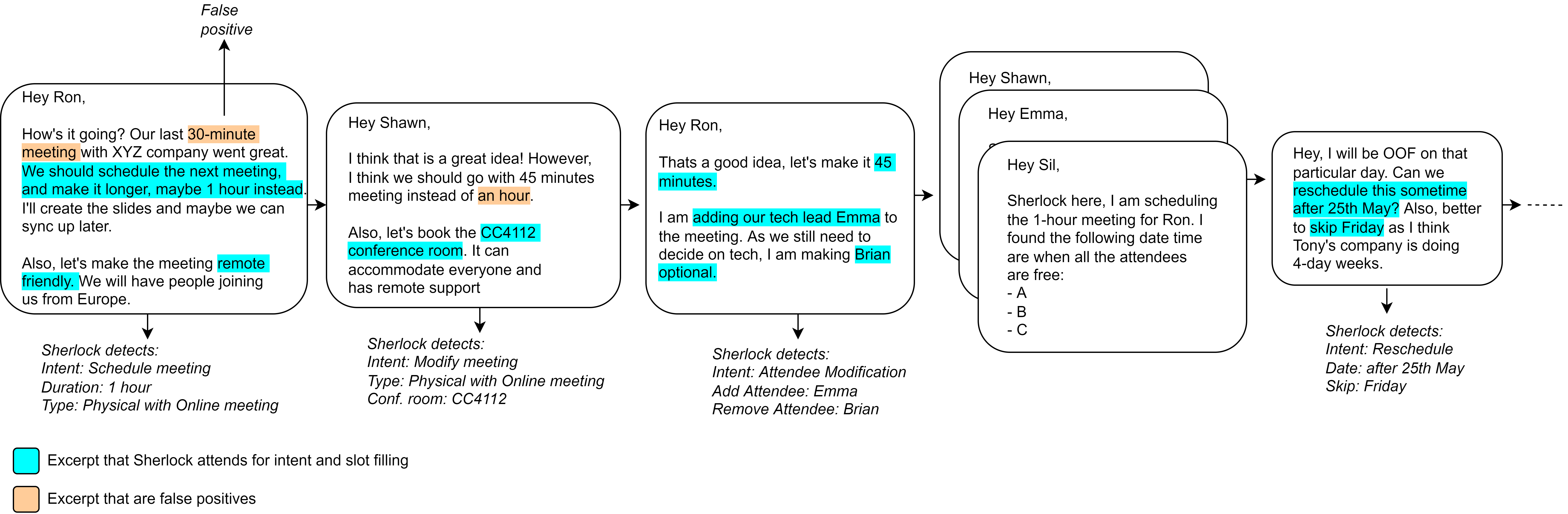

Intent detection is a key part of any Natural Language Understanding (NLU) system of a conversational assistant. Detecting the correct intent is essential yet difficult for email conversations where multiple directives and intents are present. In such settings, conversation context can become a key disambiguating factor for detecting the user's request from the assistant. One prominent way of incorporating context is modeling past conversation history like task-oriented dialogue models. However, the nature of email conversations (long form) restricts direct usage of the latest advances in task-oriented dialogue models. So in this paper, we provide an effective transfer learning framework (EMToD) that allows the latest development in dialogue models to be adapted for long-form conversations. We show that the proposed EMToD framework improves intent detection performance over pre-trained language models by 45% and over pre-trained dialogue models by 30% for task-oriented email conversations. Additionally, the modular nature of the proposed framework allows plug-and-play for any future developments in both pre-trained language and task-oriented dialogue models.

翻译:故意检测是对话助理任何自然语言理解(NLU)系统的关键部分。 检测正确意图对于存在多种指令和意图的电子邮件对话来说是必不可少的, 但对于存在多种指令和意图的电子邮件对话来说却是困难的。 在这种环境下, 谈话环境可能成为检测用户请求的关键模糊因素。 整合背景的一个突出方式是模拟过去的对话历史, 像任务导向对话模式那样。 然而, 电子邮件对话的性质( 长式)限制了直接使用任务导向对话模式的最新进展。 因此, 在本文中, 我们提供了一个有效的传输学习框架( EMToD), 使得对话模式的最新发展能够适应长式对话。 我们显示, 拟议的EMToD 框架可以使培训前语言和任务导向对话模式在未来的任何发展动态中, 使培训前语言模式的绩效提高45%, 培训前对话模式的绩效提高30% 。 此外, 拟议框架的模块性质使得预先培训的语言和任务导向对话模式的将来的任何发展都能够插插手戏。