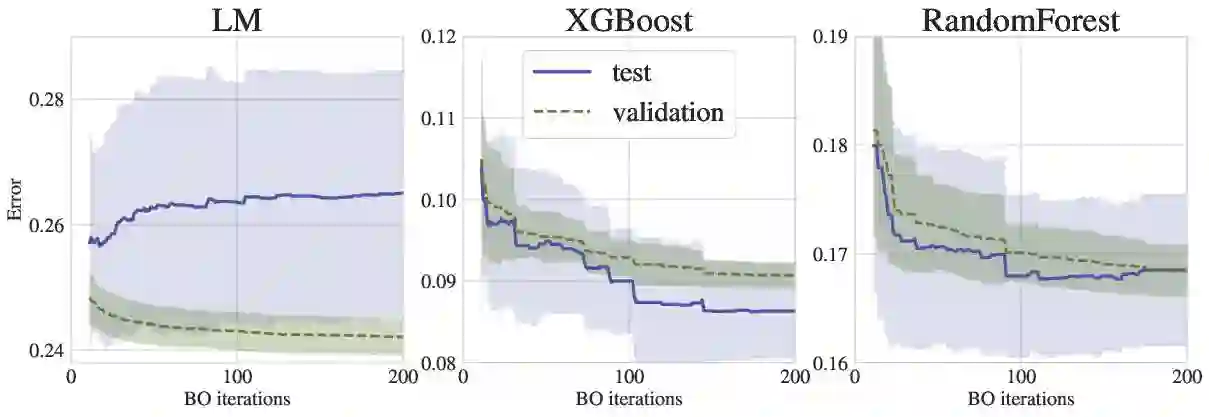

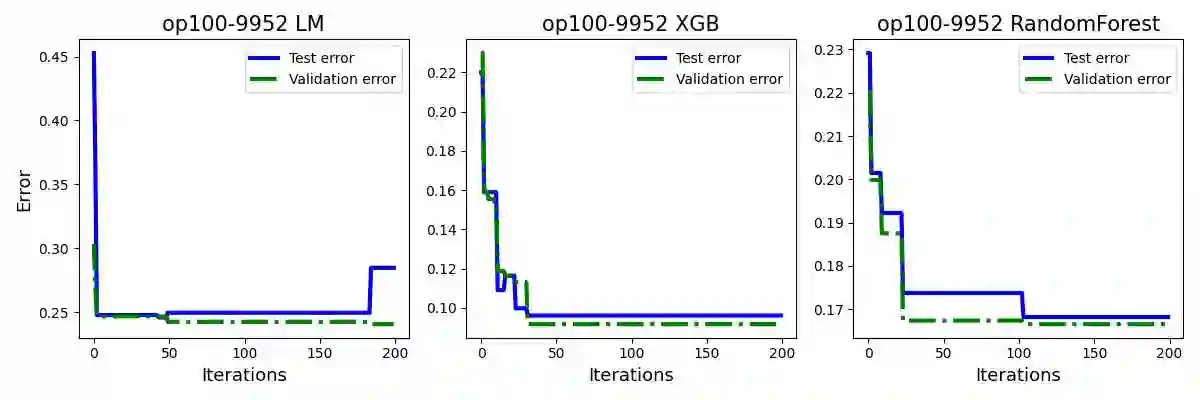

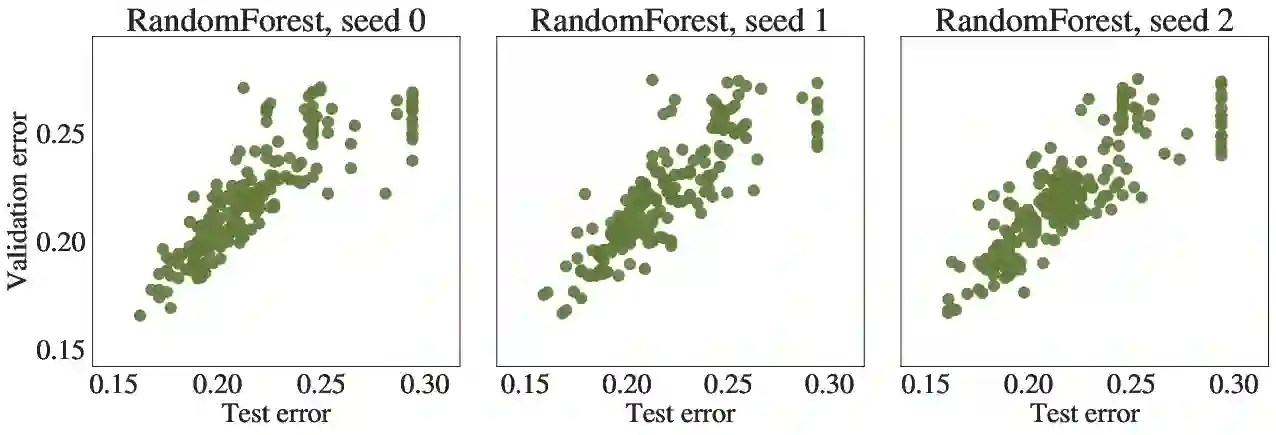

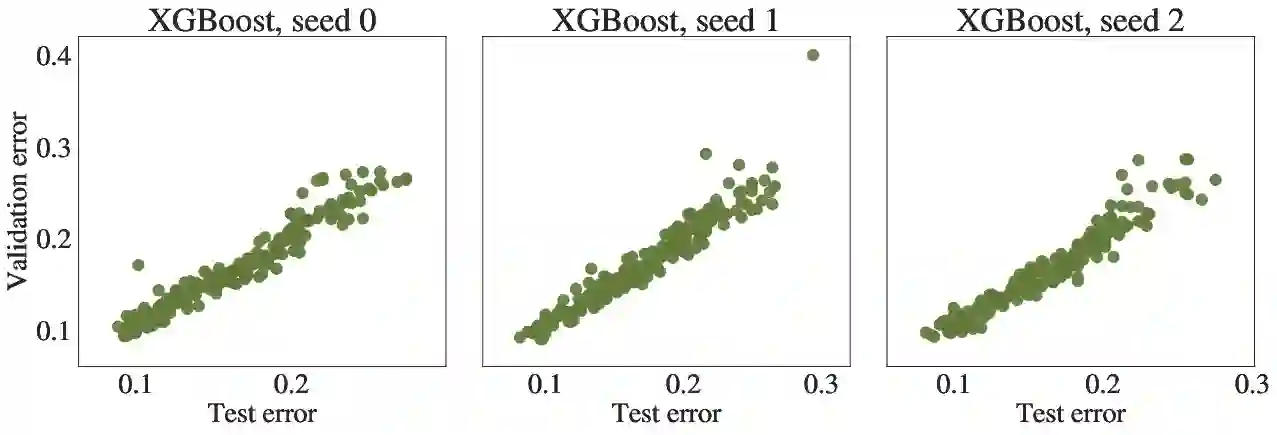

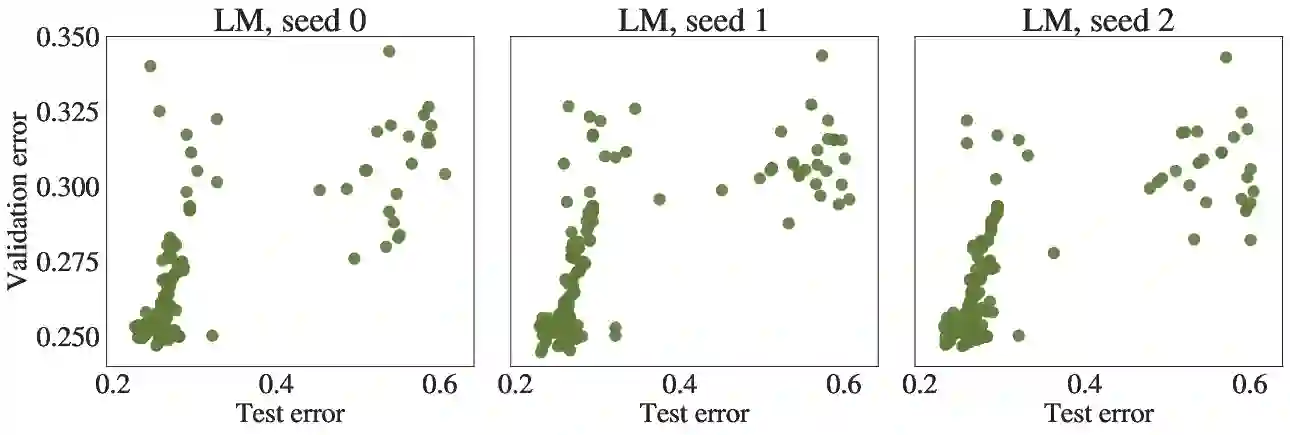

Tuning machine learning models with Bayesian optimization (BO) is a successful strategy to find good hyperparameters. BO defines an iterative procedure where a cross-validated metric is evaluated on promising hyperparameters. In practice, however, an improvement of the validation metric may not translate in better predictive performance on a test set, especially when tuning models trained on small datasets. In other words, unlike conventional wisdom dictates, BO can overfit. In this paper, we carry out the first systematic investigation of overfitting in BO and demonstrate that this issue is serious, yet often overlooked in practice. We propose a novel criterion to early stop BO, which aims to maintain the solution quality while saving the unnecessary iterations that can lead to overfitting. Experiments on real-world hyperparameter optimization problems show that our approach effectively meets these goals and is more adaptive comparing to baselines.

翻译:以巴耶斯优化(BO) 测试机器学习模型是寻找高超参数的成功战略。 BO 定义了一种迭代程序,在这种程序下,对有希望的超光度计进行交叉有效的衡量标准评估。然而,在实践中,改进验证指标可能不会在测试集上产生更好的预测性能,特别是当对小数据集培训模型进行调整时。换句话说,与传统智慧不同,BO可以过度使用。在本文中,我们首次系统调查了在BO的过度装配问题,并表明这一问题是严重的,但在实践中常常被忽视。我们提出了尽早停止BO的新标准,目的是保持解决方案的质量,同时避免可能导致过度装配的不必要的迭代。在现实世界超光谱优化问题上的实验表明,我们的方法有效地达到了这些目标,并且比基线更具适应性。