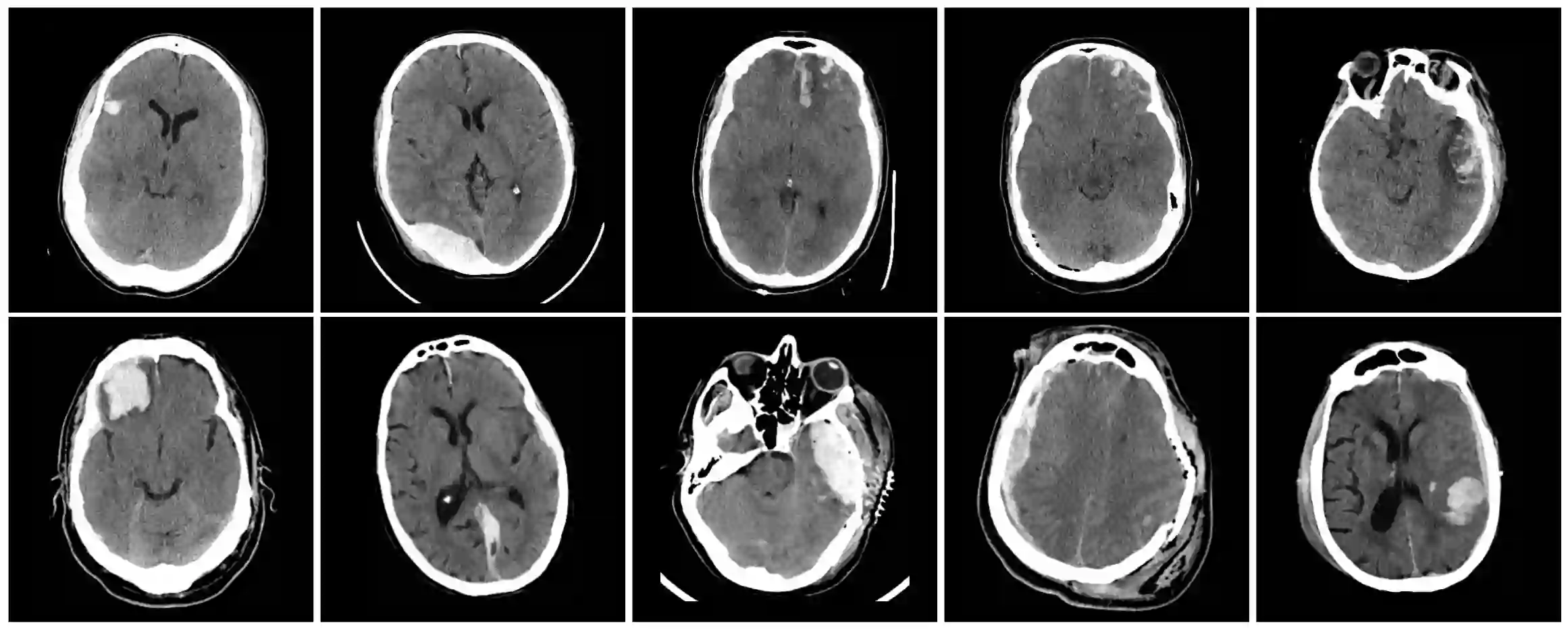

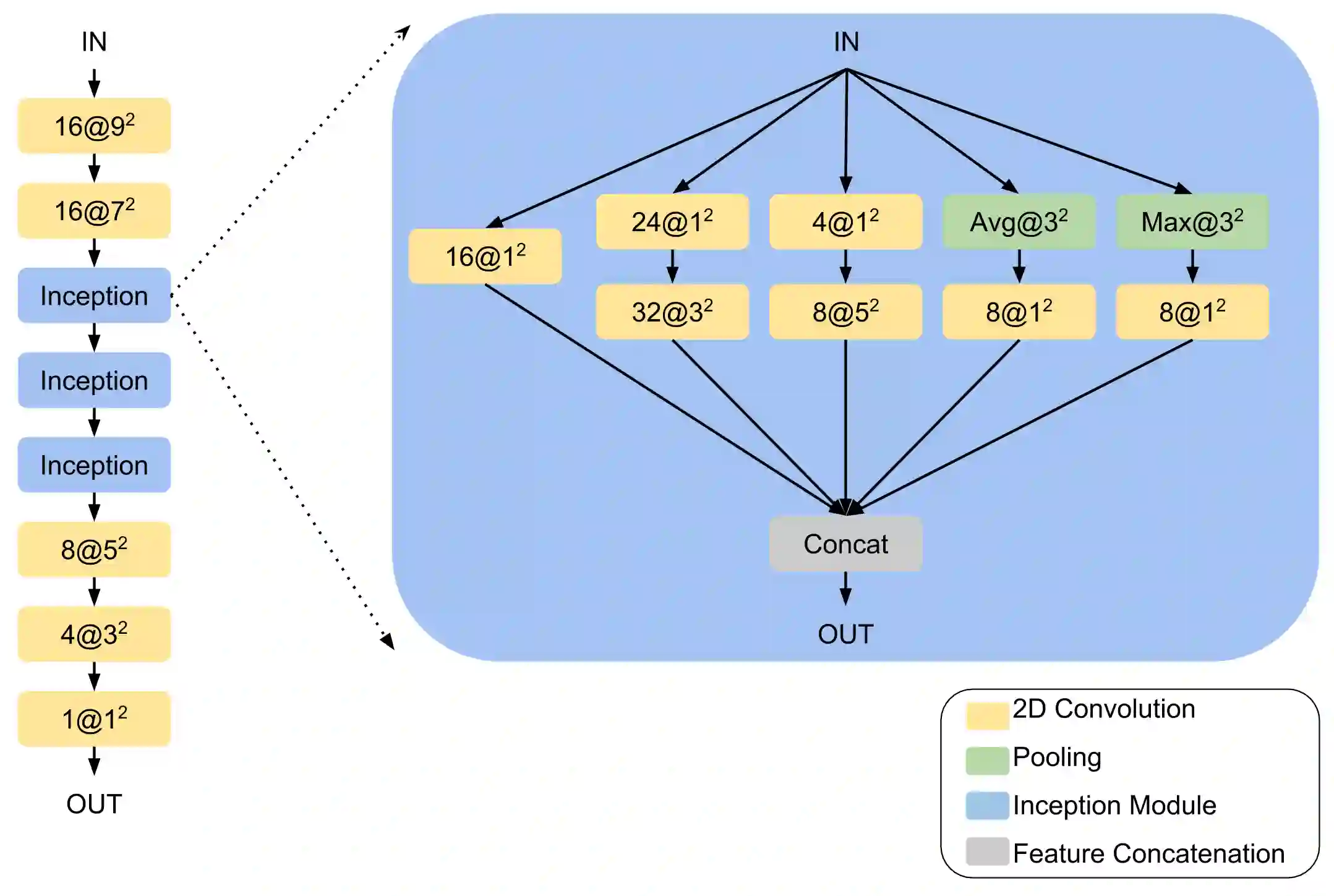

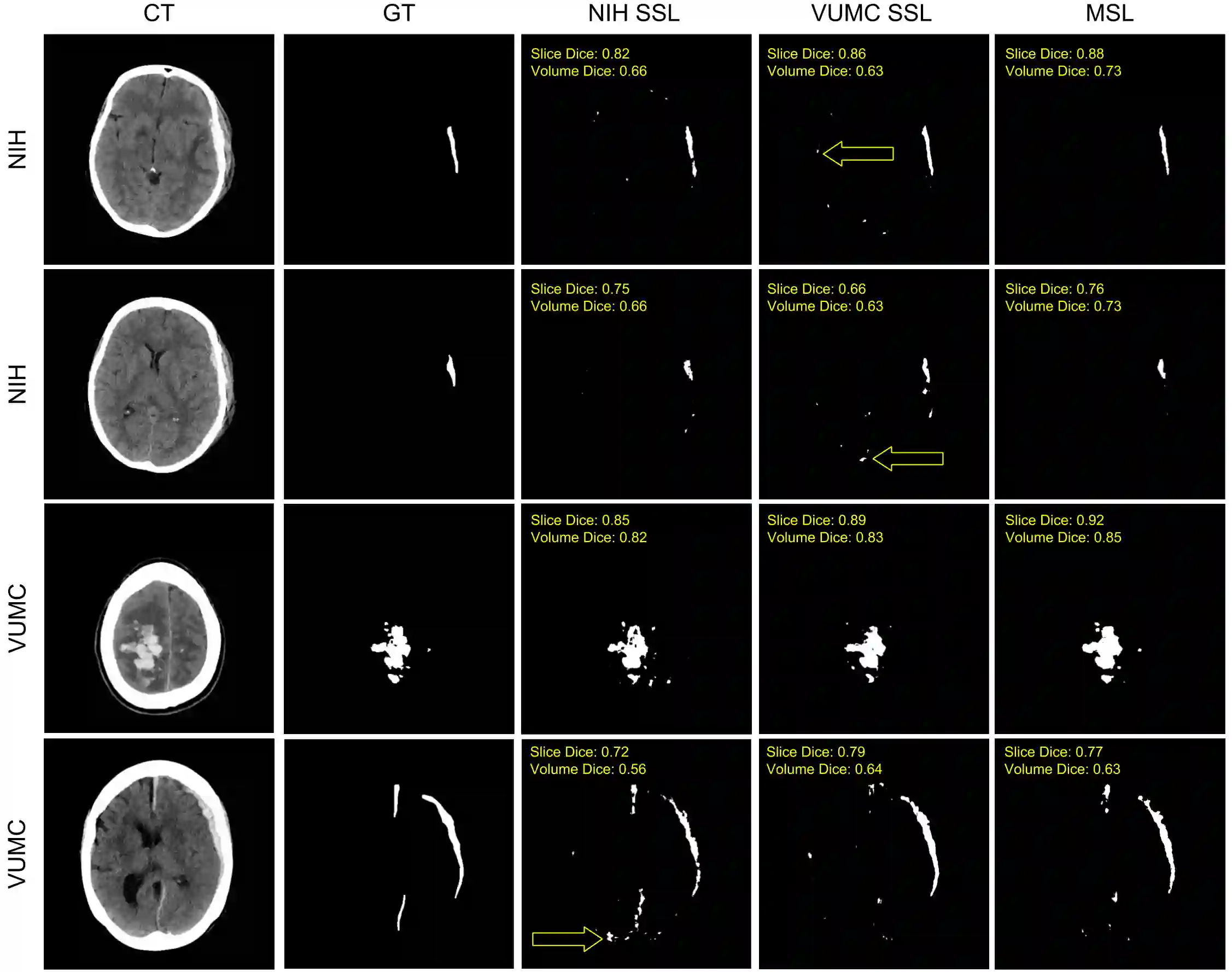

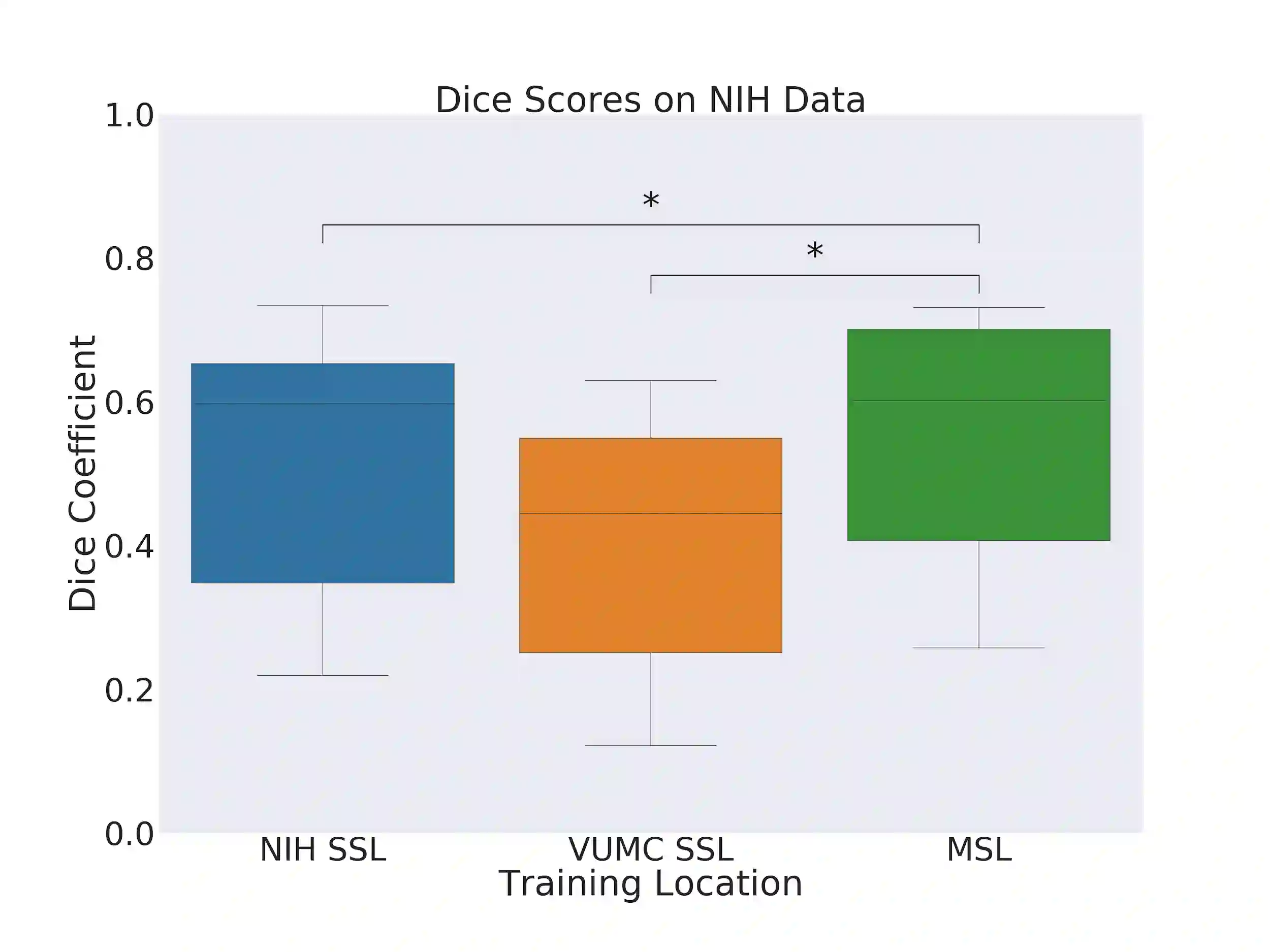

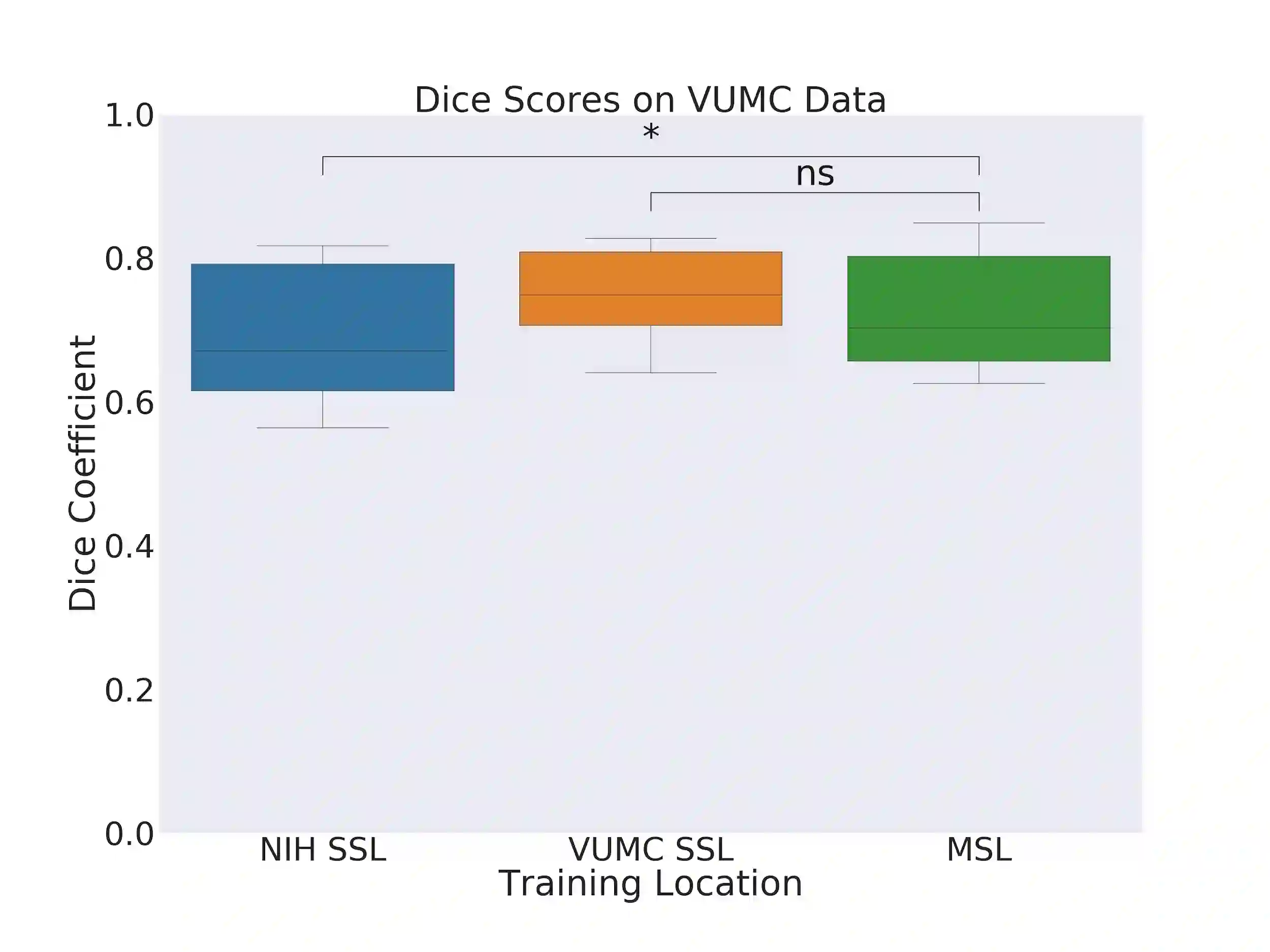

Machine learning models are becoming commonplace in the domain of medical imaging, and with these methods comes an ever-increasing need for more data. However, to preserve patient anonymity it is frequently impractical or prohibited to transfer protected health information (PHI) between institutions. Additionally, due to the nature of some studies, there may not be a large public dataset available on which to train models. To address this conundrum, we analyze the efficacy of transferring the model itself in lieu of data between different sites. By doing so we accomplish two goals: 1) the model gains access to training on a larger dataset that it could not normally obtain and 2) the model better generalizes, having trained on data from separate locations. In this paper, we implement multi-site learning with disparate datasets from the National Institutes of Health (NIH) and Vanderbilt University Medical Center (VUMC) without compromising PHI. Three neural networks are trained to convergence on a computed tomography (CT) brain hematoma segmentation task: one only with NIH data,one only with VUMC data, and one multi-site model alternating between NIH and VUMC data. Resultant lesion masks with the multi-site model attain an average Dice similarity coefficient of 0.64 and the automatically segmented hematoma volumes correlate to those done manually with a Pearson correlation coefficient of 0.87,corresponding to an 8% and 5% improvement, respectively, over the single-site model counterparts.

翻译:在医学成像领域,机器学习模型正在成为常见的医学成像领域,随着这些方法,越来越需要更多的数据。然而,为了保持病人匿名,在各机构之间转移受保护的健康信息(PHI)往往不切实际或被禁止。此外,由于一些研究的性质,可能没有庞大的公共数据集可供培训模型。为了解决这一难题,我们分析以模型本身代替不同地点之间数据转移模型本身的功效。通过这样做,我们实现了两个目标:(1) 模型增加了获得关于它通常无法获得的较大数据集的培训的机会;(2) 模型更加笼统,从不同地点对数据进行了培训。在本文件中,我们与国家卫生研究所(NIH)和Vanderbilt大学医疗中心(VUMC)的不同数据集进行多处学习,而不会损害PHI。 三个神经网络经过培训,可以与计算成的地形模型模型模型(CT)脑血红分解任务(NIH数据,仅与VUMC数据合并,一个多处模型模型,分别与NIH和VUMC平均数段进行。