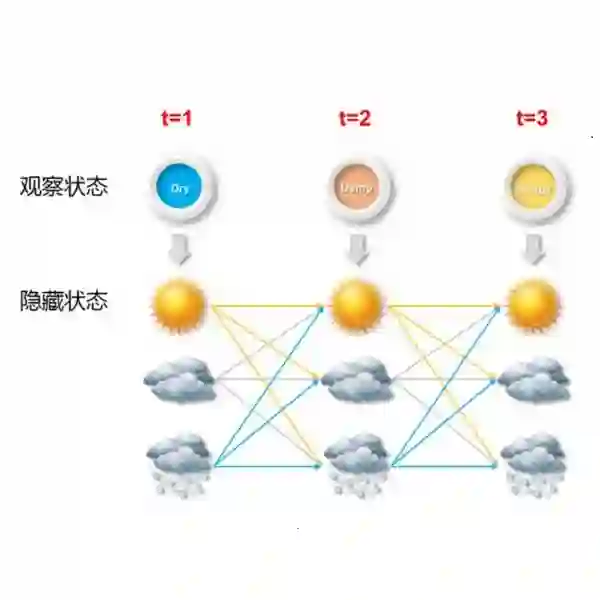

We study the problem of learning a named entity recognition (NER) tagger using noisy labels from multiple weak supervision sources. Though cheap to obtain, the labels from weak supervision sources are often incomplete, inaccurate, and contradictory, making it difficult to learn an accurate NER model. To address this challenge, we propose a conditional hidden Markov model (CHMM), which can effectively infer true labels from multi-source noisy labels in an unsupervised way. CHMM enhances the classic hidden Markov model with the contextual representation power of pre-trained language models. Specifically, CHMM learns token-wise transition and emission probabilities from the BERT embeddings of the input tokens to infer the latent true labels from noisy observations. We further refine CHMM with an alternate-training approach (CHMM-ALT). It fine-tunes a BERT-NER model with the labels inferred by CHMM, and this BERT-NER's output is regarded as an additional weak source to train the CHMM in return. Experiments on four NER benchmarks from various domains show that our method outperforms state-of-the-art weakly supervised NER models by wide margins.

翻译:我们研究的是利用来自多个薄弱监督来源的吵闹标签来学习名称实体识别(NER)吊带的问题。 薄弱监督来源的标签虽然廉价,但往往不完善、不准确、相互矛盾,因此难以学习准确的NER模型。 为了应对这一挑战,我们提议了一个有条件的隐蔽Markov模型(CHMM),它能够以不受监督的方式从多源噪音标签中有效地推断出真实标签。 CHMM用预先培训语言模型的背景代表力强化经典隐蔽的Markov模型。 具体地说,CHMM从BERT嵌入输入符号的象征性过渡和排放概率中学习,从噪音观察中推断潜在的真实标签。 我们进一步用替代培训方法(CHMM-ALT)改进CHMM。它用由CHMM所推断的标签微弱调整了BERT-NER模型,而BERT-NER的输出被视为对CHM进行回报的又一个薄弱来源。 具体地说,对四个域的NER基准的实验表明我们的方法超越了NER模型的大小。