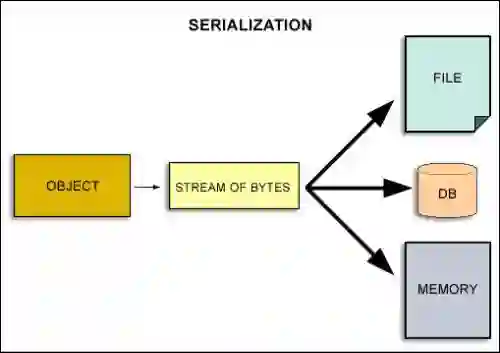

Traditional data storage formats and databases often introduce complexities and inefficiencies that hinder rapid iteration and adaptability. To address these challenges, we introduce ParquetDB, a Python-based database framework that leverages the Parquet file format's optimized columnar storage. ParquetDB offers efficient serialization and deserialization, native support for complex and nested data types, reduced dependency on indexing through predicate pushdown filtering, and enhanced portability due to its file-based storage system. Benchmarks show that ParquetDB outperforms traditional databases like SQLite and MongoDB in managing large volumes of data, especially when using data formats compatible with PyArrow. We validate ParquetDB's practical utility by applying it to the Alexandria 3D Materials Database, efficiently handling approximately 4.8 million complex and nested records. By addressing the inherent limitations of existing data storage systems and continuously evolving to meet future demands, ParquetDB has the potential to significantly streamline data management processes and accelerate research development in data-driven fields.

翻译:传统数据存储格式与数据库常引入复杂性和低效性,阻碍快速迭代与适应性。为应对这些挑战,我们提出了ParquetDB——一种基于Python的数据库框架,其利用Parquet文件格式的优化列式存储特性。ParquetDB提供高效的序列化与反序列化、对复杂与嵌套数据类型的原生支持、通过谓词下推过滤减少对索引的依赖,以及基于文件存储系统带来的增强可移植性。基准测试表明,ParquetDB在处理海量数据时性能优于SQLite和MongoDB等传统数据库,尤其在使用与PyArrow兼容的数据格式时。我们通过将ParquetDB应用于Alexandria三维材料数据库验证其实用性,高效处理了约480万条复杂嵌套记录。通过解决现有数据存储系统的固有局限并持续演进以满足未来需求,ParquetDB有望显著简化数据管理流程,并加速数据驱动领域的研究发展。