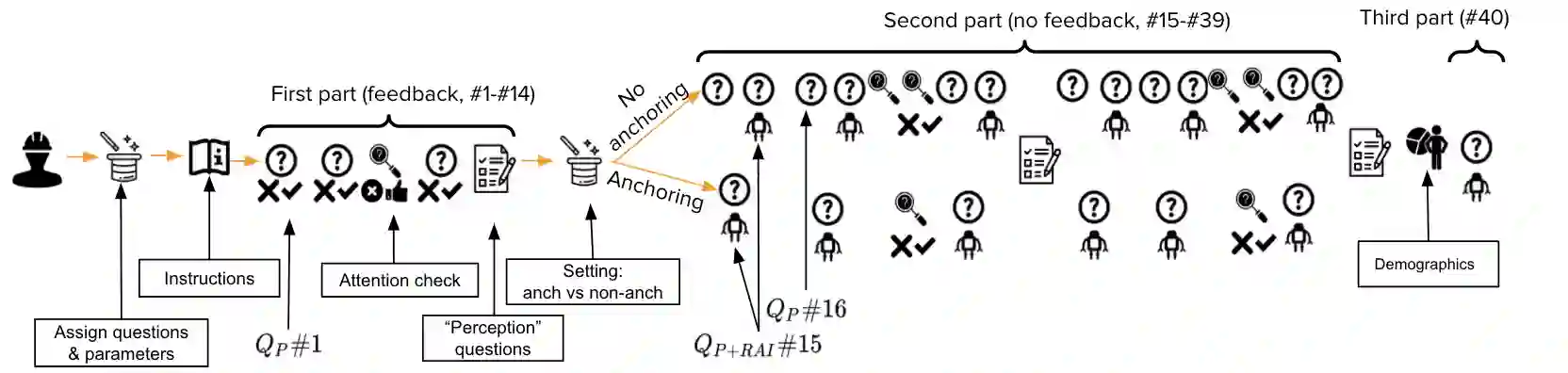

As algorithmic risk assessment instruments (RAIs) are increasingly adopted to assist decision makers, their predictive performance and potential to promote inequity have come under scrutiny. However, while most studies examine these tools in isolation, researchers have come to recognize that assessing their impact requires understanding the behavior of their human interactants. In this paper, building off of several recent crowdsourcing works focused on criminal justice, we conduct a vignette study in which laypersons are tasked with predicting future re-arrests. Our key findings are as follows: (1) Participants often predict that an offender will be rearrested even when they deem the likelihood of re-arrest to be well below 50%; (2) Participants do not anchor on the RAI's predictions; (3) The time spent on the survey varies widely across participants and most cases are assessed in less than 10 seconds; (4) Judicial decisions, unlike participants' predictions, depend in part on factors that are orthogonal to the likelihood of re-arrest. These results highlight the influence of several crucial but often overlooked design decisions and concerns around generalizability when constructing crowdsourcing studies to analyze the impacts of RAIs.

翻译:由于越来越多地采用算法风险评估工具来协助决策者,其预测性业绩和促进不平等的潜力受到审查,然而,虽然大多数研究孤立地审查这些工具,研究人员已经认识到评估其影响需要了解其人际互动者的行为;在本文件中,利用最近几项侧重于刑事司法的众包工作,我们开展了一项虚拟研究,委托非专业人士预测未来再逮捕。我们的主要结论如下:(1) 参与者经常预测,即使他们认为再次逮捕的可能性远远低于50%,罪犯也会再次被捕;(2) 参与者并不以RAI的预测为依据;(3) 调查花费的时间在参与者之间差异很大,大多数案例评估时间不到10秒钟;(4) 司法决定与参与者预测不同,部分取决于与再逮捕可能性有关的各种因素。这些结果突出表明,在进行众包研究以分析RAI的影响时,若干关键但往往被忽视的设计决定和对一般可能性的关切所产生的影响。