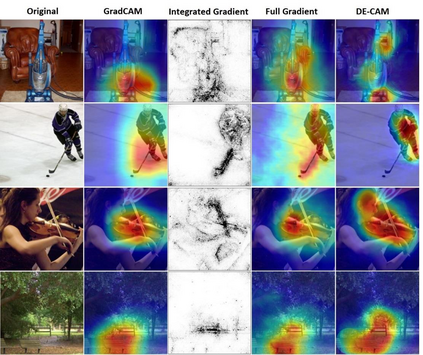

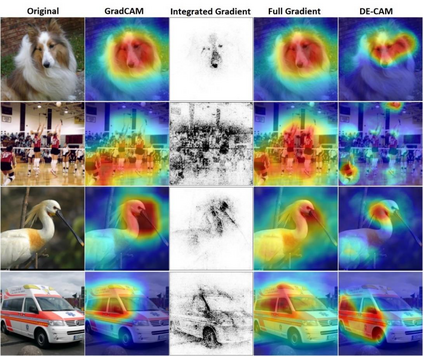

The widespread use of black-box AI models has raised the need for algorithms and methods that explain the decisions made by these models. In recent years, the AI research community is increasingly interested in models' explainability since black-box models take over more and more complicated and challenging tasks. Explainability becomes critical considering the dominance of deep learning techniques for a wide range of applications, including but not limited to computer vision. In the direction of understanding the inference process of deep learning models, many methods that provide human comprehensible evidence for the decisions of AI models have been developed, with the vast majority relying their operation on having access to the internal architecture and parameters of these models (e.g., the weights of neural networks). We propose a model-agnostic method for generating saliency maps that has access only to the output of the model and does not require additional information such as gradients. We use Differential Evolution (DE) to identify which image pixels are the most influential in a model's decision-making process and produce class activation maps (CAMs) whose quality is comparable to the quality of CAMs created with model-specific algorithms. DE-CAM achieves good performance without requiring access to the internal details of the model's architecture at the cost of more computational complexity.

翻译:广泛使用黑盒AI模型,增加了解释这些模型所作决定的算法和方法的必要性。近年来,AI研究界越来越关注模型的解释性,因为黑盒模型承担了越来越复杂和更具挑战性的任务。考虑到深层次学习技术在广泛应用中的主导地位,包括但不仅限于计算机的视觉,解释性变得至关重要。为了理解深层学习模型的推理过程,已经制定了许多方法,为AI模型的决定提供人类可理解的证据,绝大多数人依靠这些模型的内部结构和参数(例如神经网络的权重)的运作。我们提出了一个模型-不可知性方法,用于制作仅能获取模型产出的突出的地图,而不需要诸如梯度等额外信息。我们使用差异进化(DE)来确定哪些图像像素在模型的决策过程中最有影响力,并制作了类动地图(CAMs),其质量与CAMs所创建的模型的质量相仿,其质量与模型-具体的神经网络的权重(例如神经网络的权重)。我们提出了一种模型-不可分辨的方法,用于制作突出的地图,该模型的结果并不需要模型的复杂度,而是在内部的计算结构上实现良好的业绩。