As Machine Learning (ML) becomes pervasive in various real world systems, the need for models to be understandable has increased. We focus on interpretability, noting that models often need to be constrained in size for them to be considered interpretable, e.g., a decision tree of depth 5 is easier to interpret than one of depth 50. But smaller models also tend to have high bias. This suggests a trade-off between interpretability and accuracy. We propose a model agnostic technique to minimize this trade-off. Our strategy is to first learn a powerful, possibly black-box, probabilistic model -- referred to as the oracle -- on the training data. Uncertainty in the oracle's predictions are used to learn a sampling distribution for the training data. The interpretable model is trained on a sample obtained using this distribution. We demonstrate that such a model often is significantly more accurate than one trained on the original data. Determining the sampling strategy is formulated as an optimization problem. Our solution to this problem possesses the following key favorable properties: (1) the number of optimization variables is independent of the dimensionality of the data: a fixed number of seven variables are used (2) our technique is model agnostic - in that both the interpretable model and the oracle may belong to arbitrary model families. Results using multiple real world datasets, using Linear Probability Models and Decision Trees as interpretable models, with Gradient Boosted Model and Random Forest as oracles, are presented. We observe significant relative improvements in the F1-score in most cases, occasionally seeing improvements greater than 100%. Additionally, we discuss an interesting application of our technique where a Gated Recurrent Unit network is used to improve the sequence classification accuracy of a Decision Tree that uses character n-grams as features.

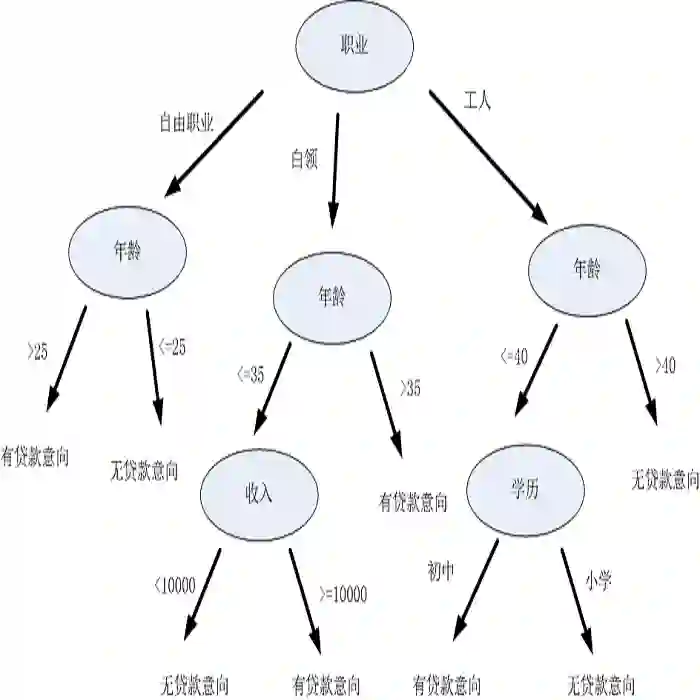

翻译:随着机器学习(ML)在各种真实世界系统中的普及,对模型的可理解性的需求增加了。我们注重解释性,指出模型通常需要限制其大小,才能被视为可解释性,例如,深度5的决策树比深度50要容易解释,但较小的模型也往往具有高度偏差。这表明在可解释性和准确性之间要权衡利弊。我们提出一个模型不可知性技术,以尽量减少这种权衡。我们的战略是首先在培训数据中学习一个强大的,可能是黑箱,概率模型 -- -- 称为甲骨灰 -- 的改进性模型。在甲骨文的预测中往往需要限制其大小,以便用来学习培训数据的取样性分布。在使用这种分布的样本上,可解释的模型往往比原始数据分类所训练的精确性要好得多。我们对这一问题的解决方案具有以下关键的有利特性:(1) 优化变量的数量独立于数据的维度;在Oral 判法中,使用一个固定的数值,或者使用一种任意的直径直径直线,使用一种直径的模型。