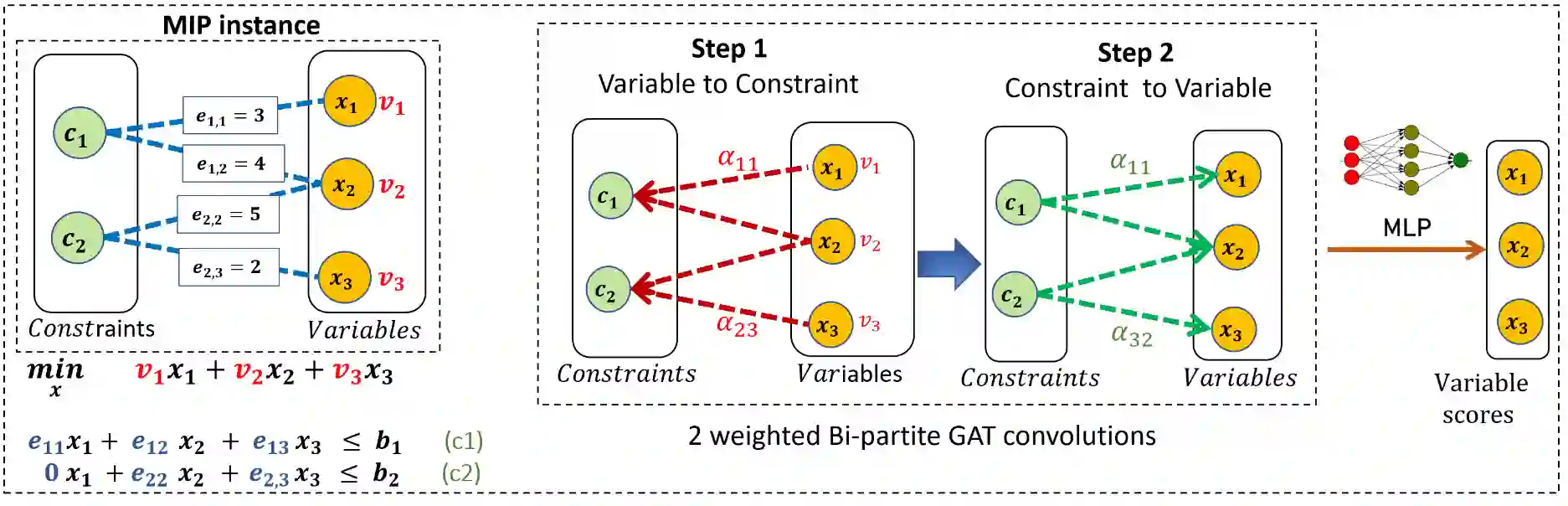

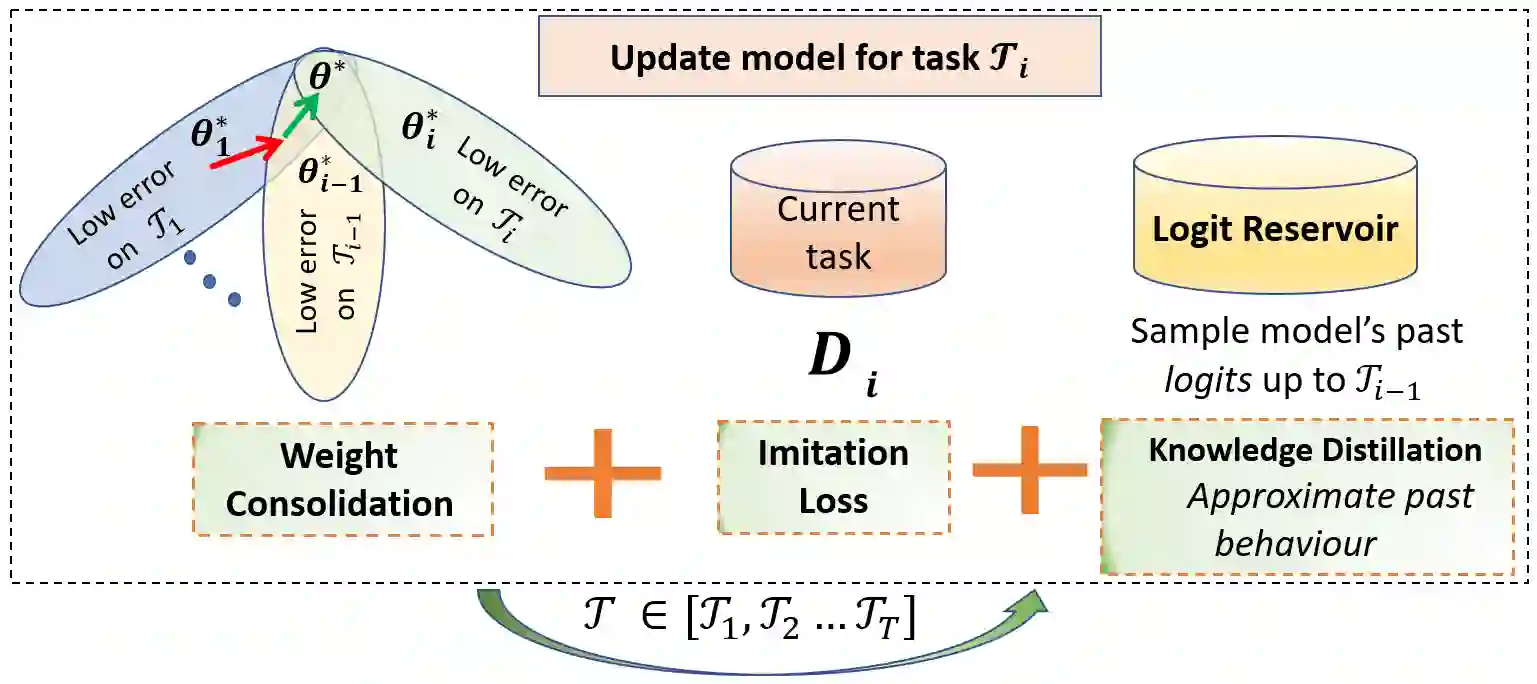

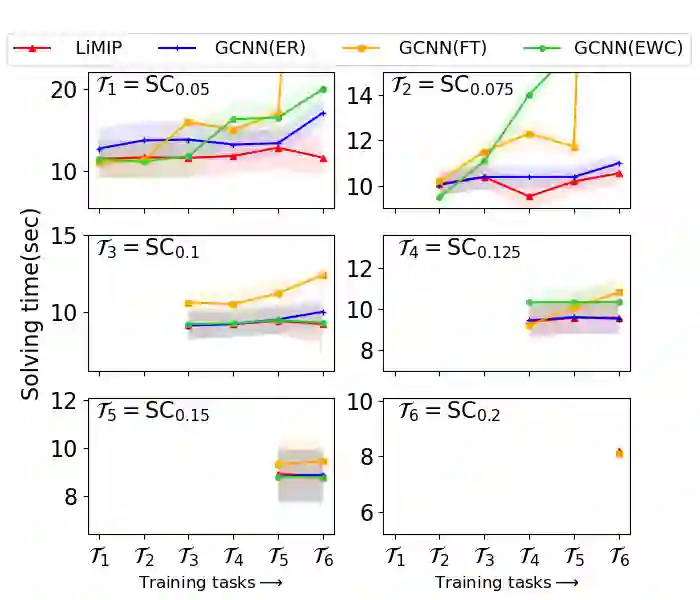

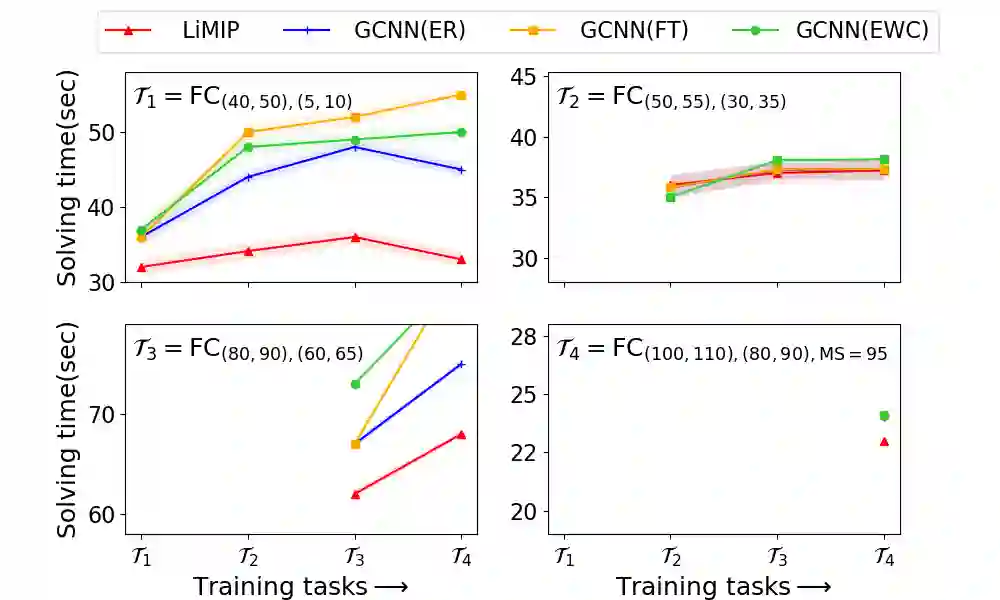

Mixed Integer programs (MIPs) are typically solved by the Branch-and-Bound algorithm. Recently, Learning to imitate fast approximations of the expert strong branching heuristic has gained attention due to its success in reducing the running time for solving MIPs. However, existing learning-to-branch methods assume that the entire training data is available in a single session of training. This assumption is often not true, and if the training data is supplied in continual fashion over time, existing techniques suffer from catastrophic forgetting. In this work, we study the hitherto unexplored paradigm of Lifelong Learning to Branch on Mixed Integer Programs. To mitigate catastrophic forgetting, we propose LIMIP, which is powered by the idea of modeling an MIP instance in the form of a bipartite graph, which we map to an embedding space using a bipartite Graph Attention Network. This rich embedding space avoids catastrophic forgetting through the application of knowledge distillation and elastic weight consolidation, wherein we learn the parameters key towards retaining efficacy and are therefore protected from significant drift. We evaluate LIMIP on a series of NP-hard problems and establish that in comparison to existing baselines, LIMIP is up to 50% better when confronted with lifelong learning.

翻译:混合整形程序(MIPs)通常由分支和组合组合算法解决。最近,由于成功地缩短了解决混合整形程序的运行时间,学习模仿专家强大整形结构的快速近似,最近由于成功地减少了解决混合整形程序的运行时间而引起人们的注意。然而,现有的从学习到分支的方法假定,整个培训数据都可在一次培训中找到。这一假设往往不真实,如果培训数据在一段时间里以连续的方式提供,现有技术就会受到灾难性的遗忘。在这项工作中,我们研究至今尚未探索的终身学习模式,到混合整形程序分支。为了减轻灾难性的遗忘,我们提议LIMIP,这是由模拟一个双面图式的模型所推动的,我们用双面图绘制一个嵌入空间。我们用双面图绘制一个双面图显示空间。这种丰富的嵌入空间通过应用知识蒸馏和弹性重量整合避免灾难性的遗忘,我们学习了保存功效的关键参数,因此受到保护,避免发生重大漂浮。我们评估LIMIP在一系列的NP-硬度上与现有问题相比,我们评估了50个基底比。