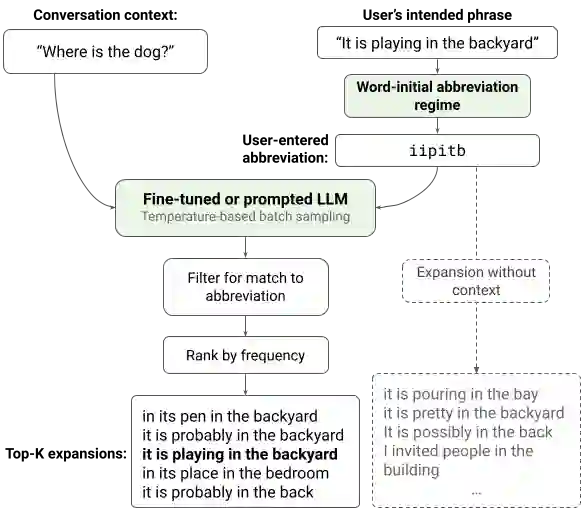

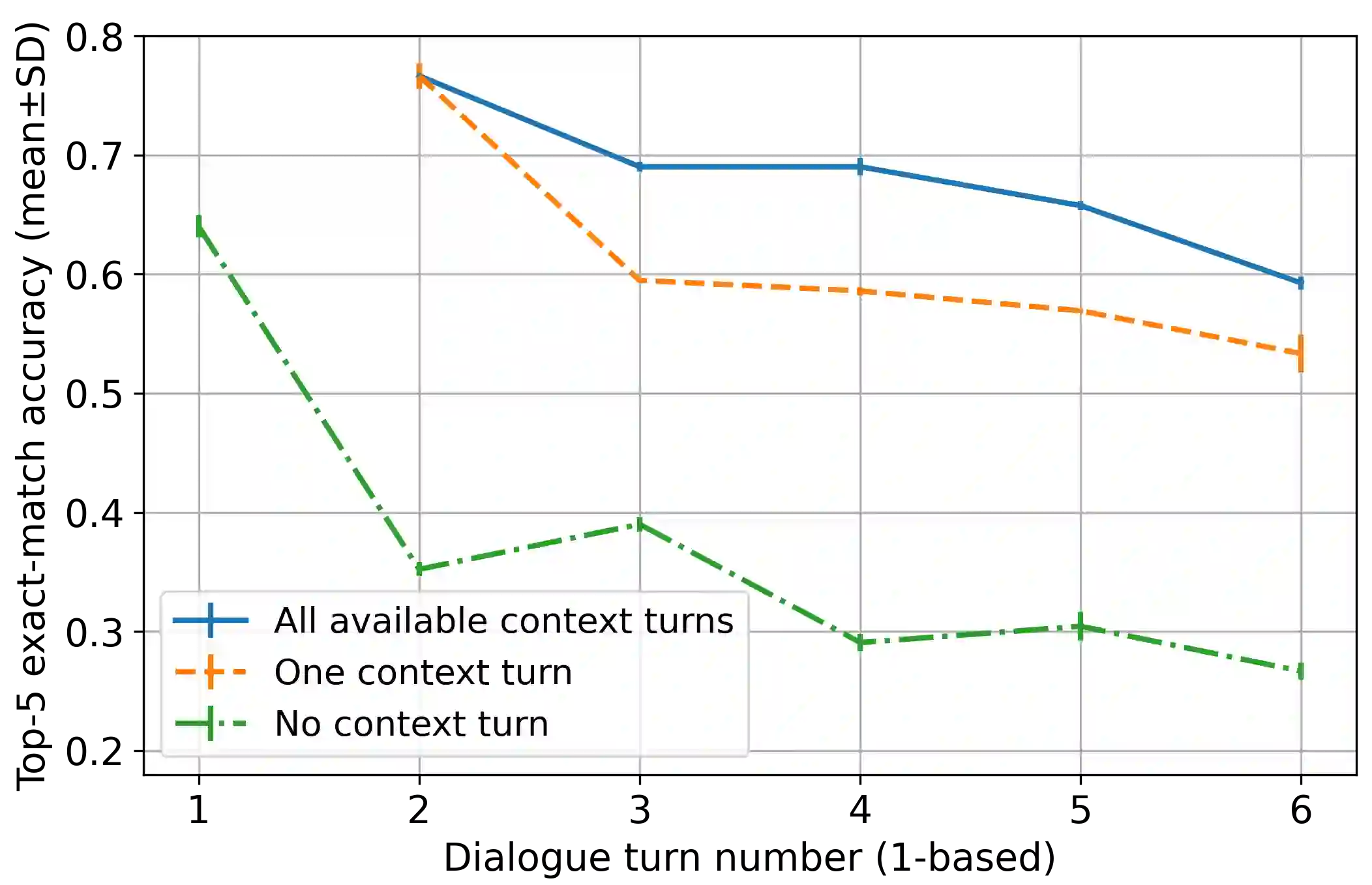

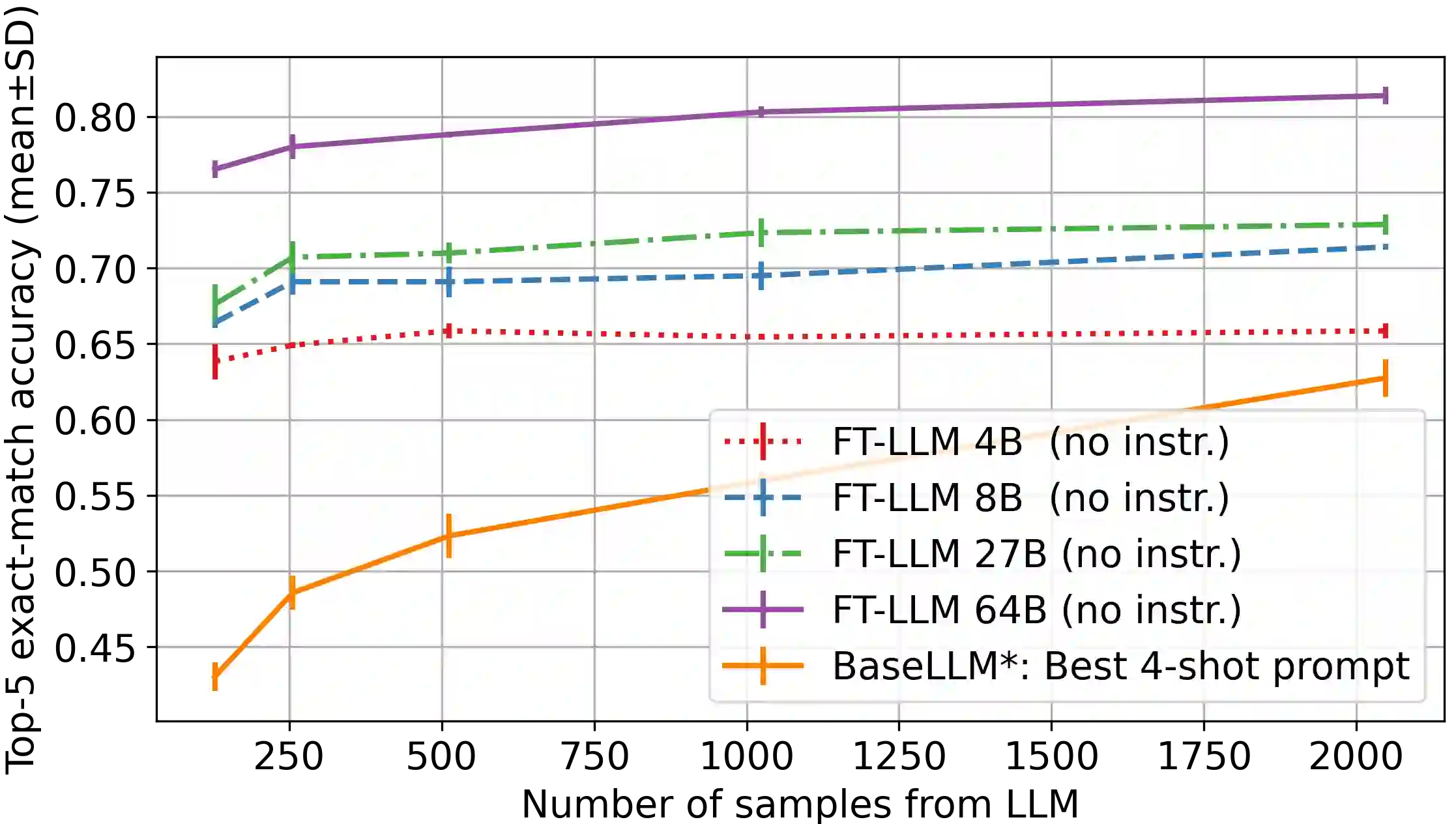

Motivated by the need for accelerating text entry in augmentative and alternative communication (AAC) for people with severe motor impairments, we propose a paradigm in which phrases are abbreviated aggressively as primarily word-initial letters. Our approach is to expand the abbreviations into full-phrase options by leveraging conversation context with the power of pretrained large language models (LLMs). Through zero-shot, few-shot, and fine-tuning experiments on four public conversation datasets, we show that for replies to the initial turn of a dialog, an LLM with 64B parameters is able to exactly expand over 70% of phrases with abbreviation length up to 10, leading to an effective keystroke saving rate of up to about 77% on these exact expansions. Including a small amount of context in the form of a single conversation turn more than doubles abbreviation expansion accuracies compared to having no context, an effect that is more pronounced for longer phrases. Additionally, the robustness of models against typo noise can be enhanced through fine-tuning on noisy data.

翻译:由于需要加快对有严重运动障碍的人的强化和替代交流(AAC)的文本输入,我们提出了一个模式,即以主要是字首字母的形式将短语缩写成主要为字首字母。我们的做法是利用预先培训的大型语言模型(LLMs)的力量来利用对话背景,将缩略语扩展为全句选项。通过零弹、微小和微调四个公开对话数据集的实验,我们显示,在对对话初始转弯的答复中,一个具有64B参数的LLM能够将缩写长度超过70%的短语完全扩展至10,从而在这些精确扩展中有效按键节约率达到大约77%。包括一个单一对话形式的小段次的缩略语扩展缩略语,而不是没有上下文的缩略语扩展。此外,通过对响声数据的微调微调整,可以提高模型的稳健性。