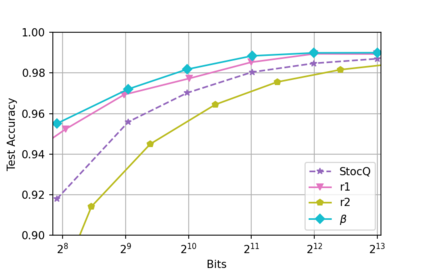

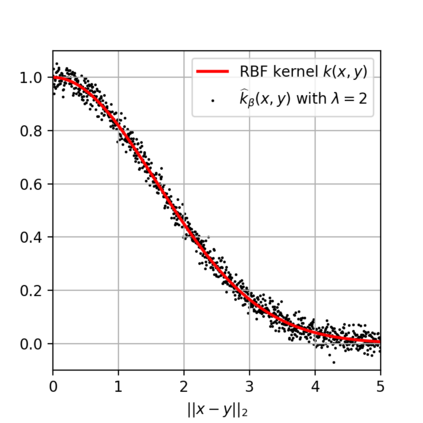

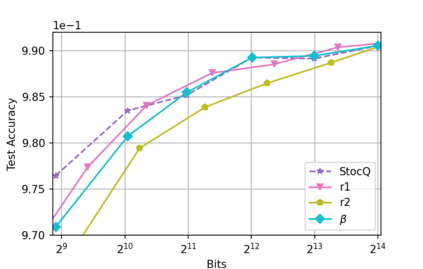

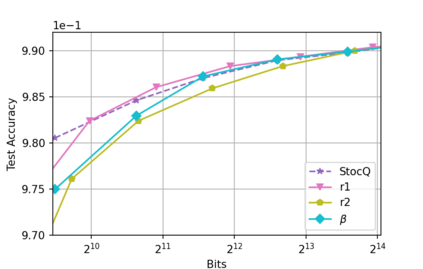

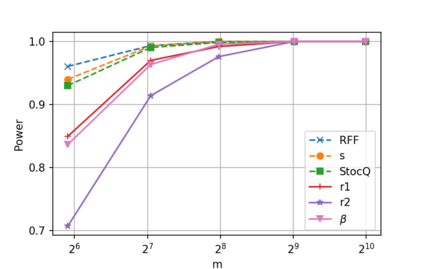

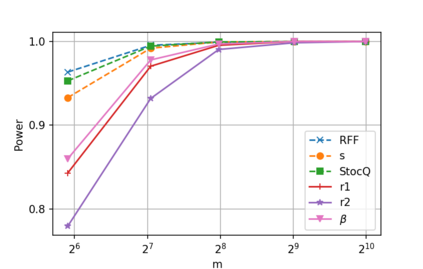

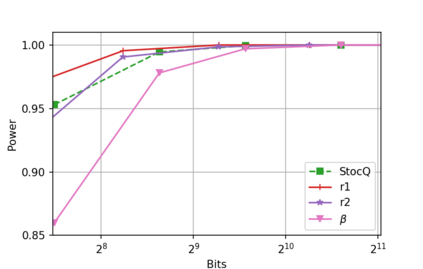

We propose the use of low bit-depth Sigma-Delta and distributed noise-shaping methods for quantizing the Random Fourier features (RFFs) associated with shift-invariant kernels. We prove that our quantized RFFs -- even in the case of $1$-bit quantization -- allow a high accuracy approximation of the underlying kernels, and the approximation error decays at least polynomially fast as the dimension of the RFFs increases. We also show that the quantized RFFs can be further compressed, yielding an excellent trade-off between memory use and accuracy. Namely, the approximation error now decays exponentially as a function of the bits used. Moreover, we empirically show by testing the performance of our methods on several machine learning tasks that our method compares favorably to other state of the art quantization methods in this context.

翻译:我们建议使用低位深度Sigma-Delta 和分布式的噪声散射法来量化与移动变量内核相关的随机傅里叶特性(RFFs ) 。 我们证明我们量化的 RFF 方法 -- -- 即便在1美元-位位数内核的情况下 -- -- 也允许基础内核的高度精确近似,而近似错误随着RFF的维度增加,至少会减退多球速。 我们还表明,量化的 RFF 可以进一步压缩,从而在内存使用和准确性之间实现极好的平衡。 也就是说, 近似错误现在作为所使用的位数的函数而急剧衰减。 此外,我们通过实验性地展示了我们的方法在几个机器学习任务上的性能,我们的方法比此背景下的艺术量化方法的其他状态要好。