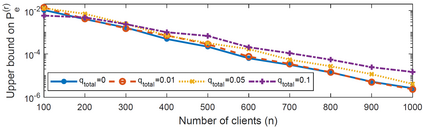

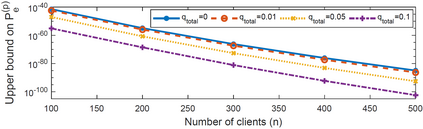

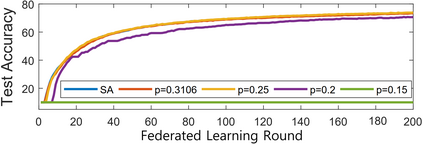

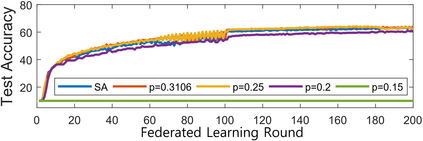

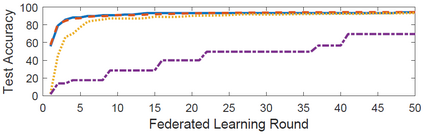

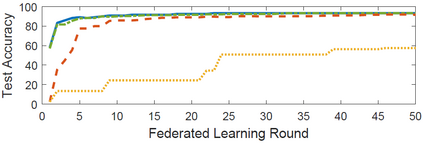

Federated learning has been spotlighted as a way to train neural networks using distributed data with no need for individual nodes to share data. Unfortunately, it has also been shown that adversaries may be able to extract local data contents off model parameters transmitted during federated learning. A recent solution based on the secure aggregation primitive enabled privacy-preserving federated learning, but at the expense of significant extra communication/computational resources. In this paper, we propose a low-complexity scheme that provides data privacy using substantially reduced communication/computational resources relative to the existing secure solution. The key idea behind the suggested scheme is to design the topology of secret-sharing nodes as a sparse random graph instead of the complete graph corresponding to the existing solution. We first obtain the necessary and sufficient condition on the graph to guarantee both reliability and privacy. We then suggest using the Erd\H{o}s-R\'enyi graph in particular and provide theoretical guarantees on the reliability/privacy of the proposed scheme. Through extensive real-world experiments, we demonstrate that our scheme, using only $20 \sim 30\%$ of the resources required in the conventional scheme, maintains virtually the same levels of reliability and data privacy in practical federated learning systems.

翻译:使用分散的数据来培训神经网络,无需个别节点来分享数据; 不幸的是,还表明对手可能能够从联邦学习期间传送的模型参数中提取本地数据内容; 最近基于安全聚合原始软件的解决方案,使隐私能够保护联合会学习,但以大量额外通信/计算资源为代价; 在本文件中,我们提出一个低兼容性计划,利用与现有安全解决方案相比大量减少的通信/计算资源来提供数据隐私,提供数据隐私; 所建议的计划的关键思想是将秘密共享节点的表层设计成一个稀散的随机图,而不是与现有解决方案相对应的完整图表; 我们首先在图表上获得必要和充分的条件,以保证可靠性和隐私; 我们然后特别建议使用Erd\H{o}s-R\'enyi 图表,为拟议计划的可靠性/隐私提供理论保障。 通过广泛的现实世界实验,我们证明我们的计划,仅使用20美元左右的保密性数据,实际上维持了常规系统所需的可靠程度。