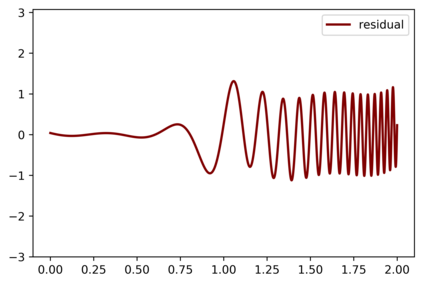

Hierarchical support vector regression (HSVR) models a function from data as a linear combination of SVR models at a range of scales, starting at a coarse scale and moving to finer scales as the hierarchy continues. In the original formulation of HSVR, there were no rules for choosing the depth of the model. In this paper, we observe in a number of models a phase transition in the training error -- the error remains relatively constant as layers are added, until a critical scale is passed, at which point the training error drops close to zero and remains nearly constant for added layers. We introduce a method to predict this critical scale a priori with the prediction based on the support of either a Fourier transform of the data or the Dynamic Mode Decomposition (DMD) spectrum. This allows us to determine the required number of layers prior to training any models.

翻译:等级支持矢量回归(HSVR) 模型中的数据函数,从数据作为SVR模型在一系列尺度上的线性组合,从粗缩的尺度开始,随着等级的延续而移动到更细的尺度。在HSVR的最初配制中,没有选择模型深度的规则。在本文中,我们观察到一些模型在培训错误中发生了一个阶段的过渡 -- -- 在增加层之后,错误相对稳定,直到一个关键尺度通过为止,到培训错误下降近于零,对于添加的层则几乎保持不变。我们引入了一种方法,根据数据四重转换或动态模式分解谱的支持预测这一关键尺度。这使我们能够在培训任何模型之前确定所需的层数。